Salesforce has taken a significant leap in AI development with the release of its xLAM family, introducing Large Action Models (LAMs) to enable more efficient and autonomous workflows. Unlike Large Language Models (LLMs) that excel in generating text, LAMs are built to execute tasks autonomously, making decisions and managing entire processes without requiring explicit human instructions. xLAM-1B, xLAM-7B, and xLAM-8x22B, are available on Hugging Face.

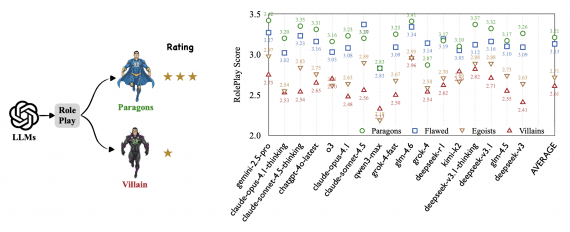

xLAM vs. LLMs: A New Paradigm

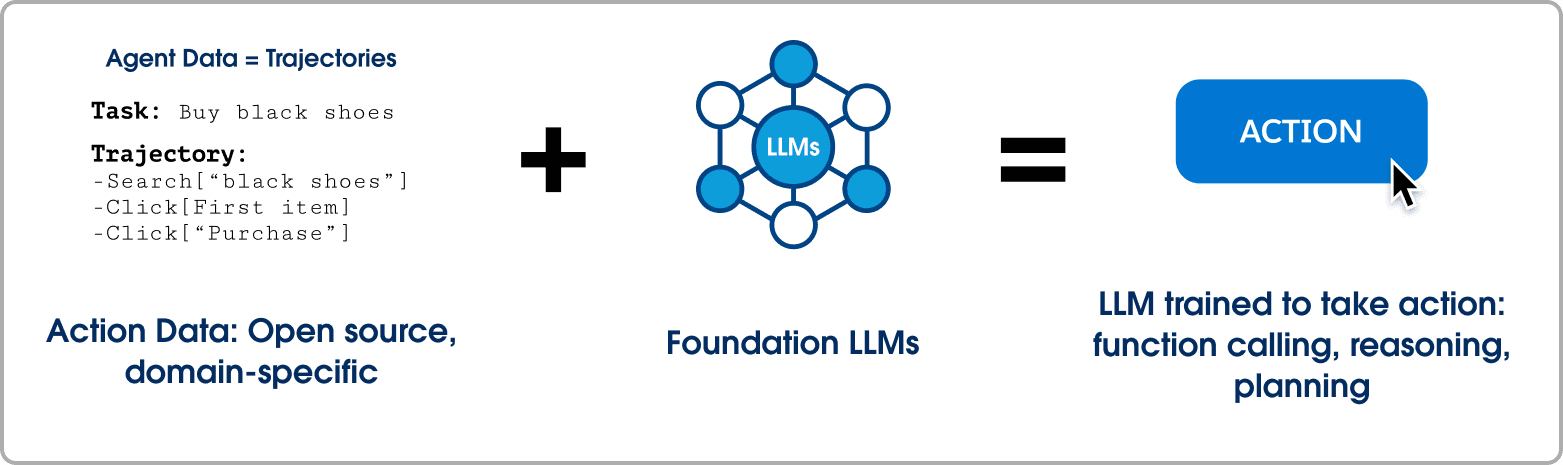

While LLMs have demonstrated impressive capabilities in text generation and content creation, LAMs take this functionality a step further. LAMs are designed for function-calling, enabling AI to not just suggest actions, but actually execute them autonomously. This is critical in business environments where real-time decisions are necessary, such as updating databases or completing workflows based on function calls.

xLAM models specialize in real-time task execution, offering businesses the ability to automate complex processes more efficiently than existing LLMs. For example, xLAM models can autonomously handle tasks such as CRM updates, customer support queries, or sales pipeline management, thereby reducing the need for human intervention.

Technical Overview: xLAM Family

Salesforce’s xLAM family consists of various models, each optimized for specific tasks and computational environments:

- xLAM-1B-fc-r (“Tiny Giant”):

- Parameters: 1.35 billion

- Context Length: 16k tokens

- Use Case: Ideal for on-device applications where larger models are impractical due to resource constraints. It is compact and highly efficient for running real-time tasks on devices with limited computational power.

- xLAM-7B-fc-r:

- Parameters: 6.91 billion

- Context Length: 4k tokens

- Use Case: Designed for academic research and small-scale industry use. This model balances performance with GPU resource efficiency, making it suitable for organizations with limited hardware.

- xLAM-7b-r:

- Parameters: 7.24 billion

- Context Length: 32k tokens

- Use Case: Built for industrial-grade applications, requiring extended context and high precision across longer documents and workflows.

- xLAM-8x7b-r:

- Parameters: 46.7 billion

- Context Length: 32k tokens

- Use Case: Suitable for computationally intensive tasks, combining high throughput and accuracy for real-time business applications.

- xLAM-8x22b-r:

- Parameters: 141 billion

- Context Length: 64k tokens

- Use Case: This model is built for resource-heavy tasks that demand the best possible performance for decision-making and workflow automation in large enterprises.

Proven Performance

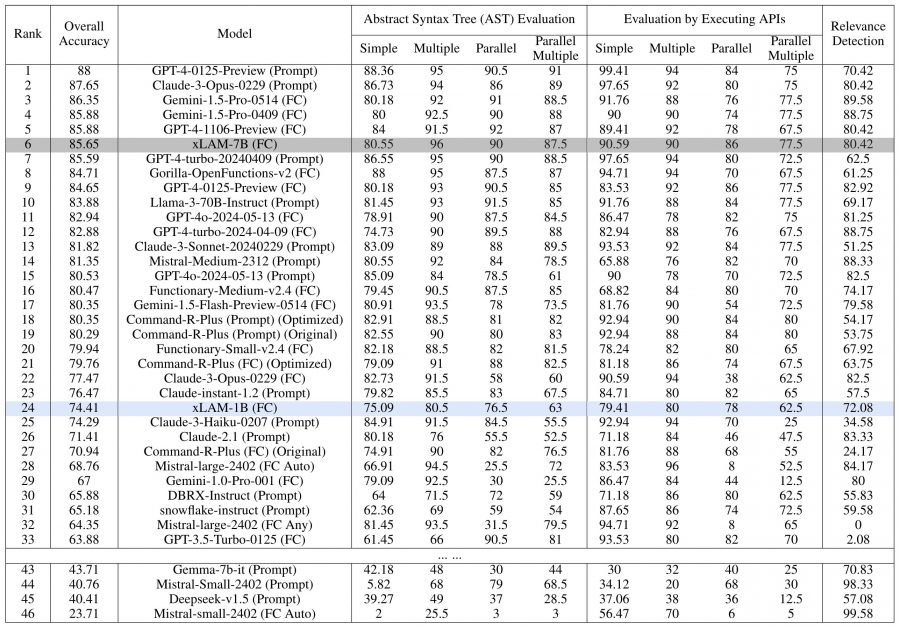

Salesforce has benchmarked these models against existing state-of-the-art models such as GPT-4. On the Berkeley Function Calling Leaderboard, the xLAM-7B(fc) model outperformed GPT-4 in function-calling tasks, a key area for CRM and business process automation. This is a testament to the models’ efficiency in handling tasks that require real-time function execution, accuracy, and reduced latency.

Furthermore, Salesforce’s xLAM-1B—despite being compact—achieved top rankings in various function-calling tasks, showing that smaller models can outperform larger models in specific domains such as tool usage, planning, and reasoning. This makes xLAM-1B particularly suitable for on-device AI assistants, offering real-time decision-making and responsiveness on devices with limited processing power, such as smartphones.

xGen-Sales: Redefining Sales Automation

Complementing the xLAM family, Salesforce has developed xGen-Sales, a model specifically fine-tuned for sales tasks such as customer insights, lead generation, and pipeline management. According to Salesforce’s internal benchmarks, xGen-Sales outperformed larger, more generalized models in automating repetitive sales tasks. The model is designed to enhance Agentforce, allowing the platform to autonomously coach sales representatives, nurture pipelines, and provide real-time guidance without requiring human intervention.

Why LAMs Matter for CRM

One of the key advantages of Large Action Models over traditional LLMs is their ability to handle function-calling, allowing them to directly interact with business systems and execute tasks such as updating CRM entries, managing customer interactions, and even modifying complex workflows. This introduces an unprecedented level of autonomy in CRM systems, automating time-consuming tasks and enabling employees to focus on strategic decision-making.

Moreover, these LAMs are built to work in multi-agent environments, which means multiple AI agents can collaborate on tasks, improving operational efficiency and scalability in real-time applications.

Availability and Open-Source Access

The xLAM models, including xLAM-1B, xLAM-7B, and xLAM-8x22B, are available for download on Hugging Face for open-source experimentation, enabling researchers and developers to access, fine-tune, and deploy these models across various business environments. This open-access approach is set to accelerate innovation in the field of AI-driven business automation, providing a foundation for real-world applications that require both high accuracy and real-time task execution.

Conclusion

Salesforce’s xLAM family marks a critical advancement in AI technology, offering a shift from content generation to real-time task execution. With proven performance metrics and a flexible open-source offering, these models provide a robust solution for businesses seeking to automate complex workflows, increase operational efficiency, and reduce the need for human intervention in decision-making processes. Through the introduction of xGen-Sales and xLAM, Salesforce is driving the future of AI-powered business automation, positioning itself as a leader in CRM and enterprise AI solutions.