Show-o2: Open-source 7B multimodal model outperforms 14B models on benchmarks using significantly less training data

26 June 2025

Show-o2: Open-source 7B multimodal model outperforms 14B models on benchmarks using significantly less training data

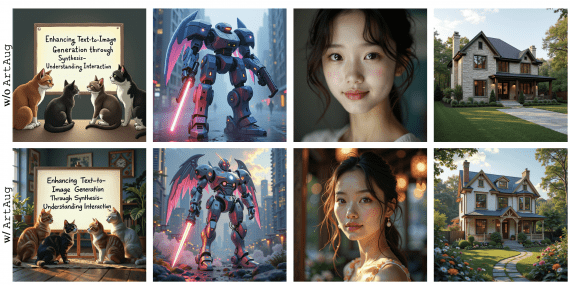

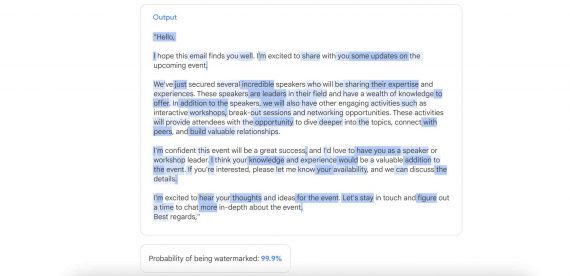

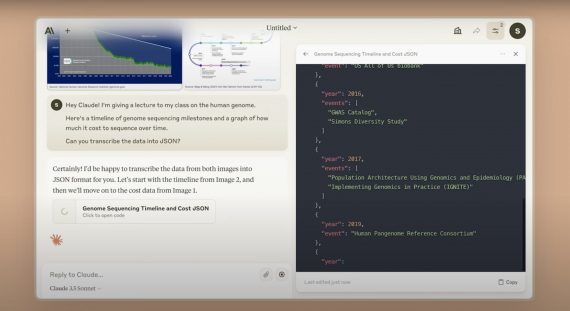

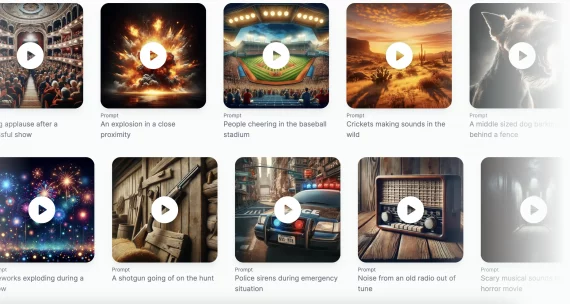

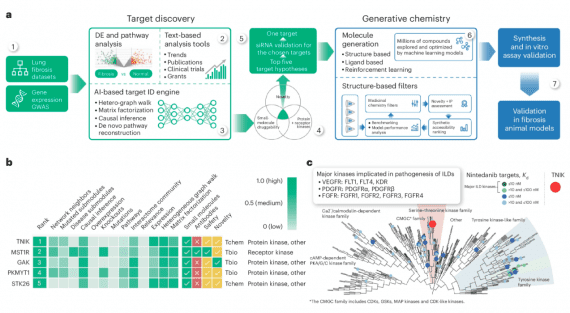

Researchers from Show Lab at the National University of Singapore and ByteDance introduced Show-o2 — a second-generation multimodal model that demonstrates superior results in image and video understanding and generation…