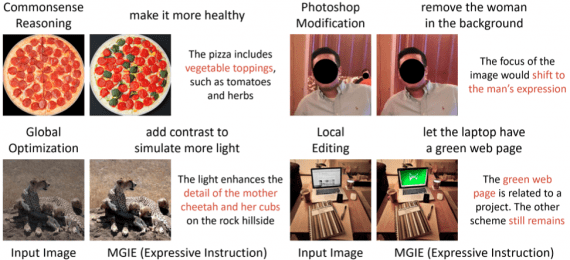

The image generation service Midjourney now offers a character transfer feature to new images by specifying a link to an existing image with the character in the request. This functionality will enable the service to be used for creating new types of content such as redraws, comics, and graphic novels.

Inconsistency in output results for the same query is a common drawback of existing diffusion-based text-to-image and language models. The ability to consistently recreate characters on new images is one of the most requested features of text-to-image models, as its absence significantly limits their practical usability.

Midjourney has addressed this issue by introducing a new tag, “-cref” (“character reference”), which users can add to the end of their text queries in Midjourney Discord. This tag will attempt to transfer the character’s facial features, body type, and clothing from the URL of the image that the user specifies after this tag.

The tag works best with previously generated Midjourney images. Thus, the process for the user would involve first generating or obtaining the URL of a previously generated image, and then specifying it in requests for new images.

Users can control the “weight” at which the character will be reproduced on the new image by applying the “-cw” (“character weight”) tag, followed by a number from 1 to 100. The lower the weight, the more the character will differ from the existing image, and vice versa. At a weight of 100, the model will attempt to reproduce the facial shape, hairstyle, and clothing. At a weight of 0, the model will only consider the facial shape, suitable for changing clothing or hairstyle.

To improve the accuracy of character transfer, multiple “-cref” tags can be used with links to images of the same character.