ByteDance Unveil TA-TiTok Tokenizer Achieving SOTA in Text-to-Image Generation Using Only Public Data

19 January 2025

ByteDance Unveil TA-TiTok Tokenizer Achieving SOTA in Text-to-Image Generation Using Only Public Data

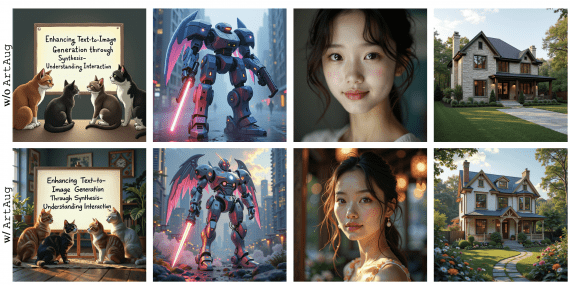

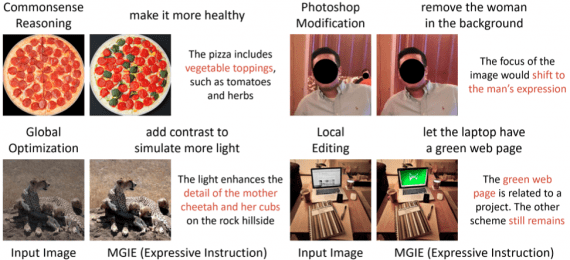

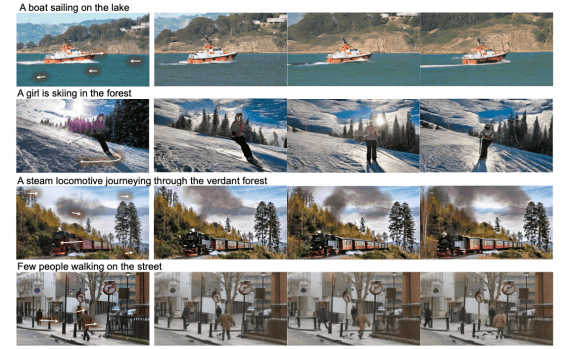

Researchers from ByteDance and POSTECH introduced TA-TiTok (Text-Aware Transformer-based 1-Dimensional Tokenizer), a novel approach to making text-to-image AI models more accessible and efficient. Their work demonstrates through MaskGen models how…