DeepMath-103K: Advancing AI Reasoning Through Challenge

21 April 2025

DeepMath-103K: Advancing AI Reasoning Through Challenge

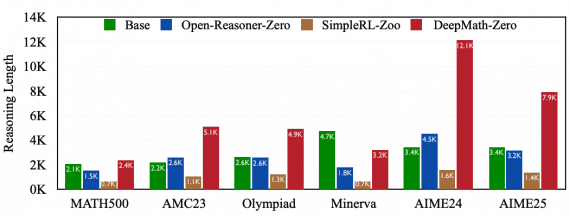

Mathematical reasoning stands as a crucial benchmark for artificial intelligence systems, requiring logical deduction, symbolic manipulation, and multi-step problem-solving. Recent breakthroughs in AI reasoning have been significantly driven by reinforcement…