Researchers from Sakana AI have introduced Adaptive Branching Monte Carlo Tree Search (AB-MCTS) — a revolutionary approach to creating “dream teams” from large language models that allows them to dynamically collaborate to solve complex tasks. The method achieves 30% superiority over individual models on the most challenging ARC-AGI-2 benchmark. The method is implemented in the open-source framework TreeQuest, available for commercial use.

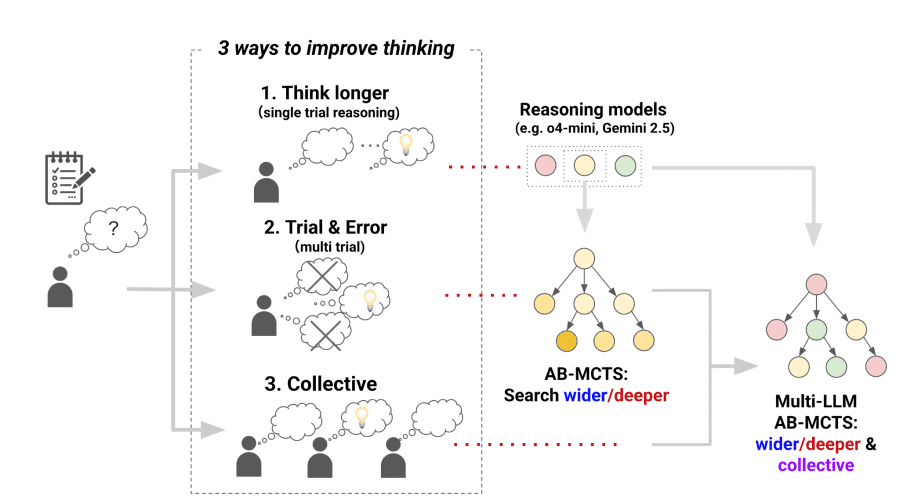

The Concept of Collective Intelligence

Each LLM has unique strengths and weaknesses. One model may excel at programming, another at creative writing. Researchers at Sakana AI view these differences as valuable resources for creating collective intelligence. Like human teams, AI models achieve more through collaboration.

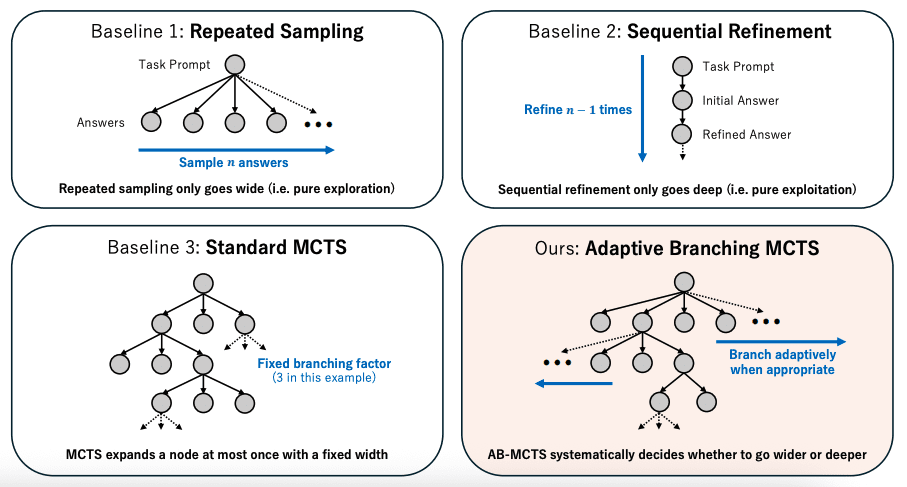

Problems with Traditional Approaches

Repeated sampling generates multiple candidates from a single prompt but focuses exclusively on exploration without exploiting feedback.

Standard MCTS uses a fixed branching factor, limiting scalability.

Individual models cannot effectively combine diverse expert knowledge.

AB-MCTS Architecture

AB-MCTS solves the problem of implementing unbounded branching in tree search without fixing width as a static hyperparameter.

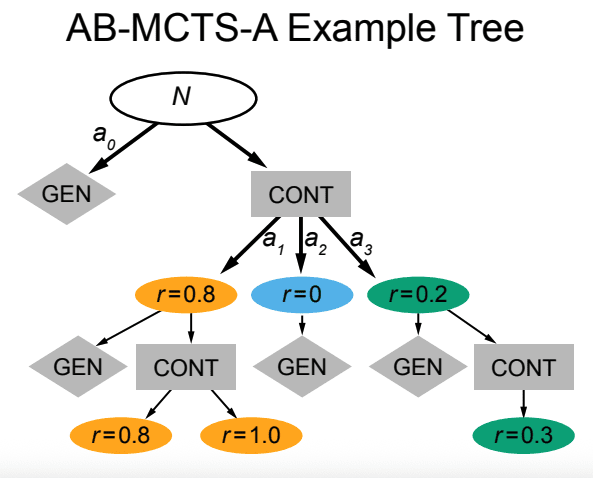

GEN-Nodes Mechanism

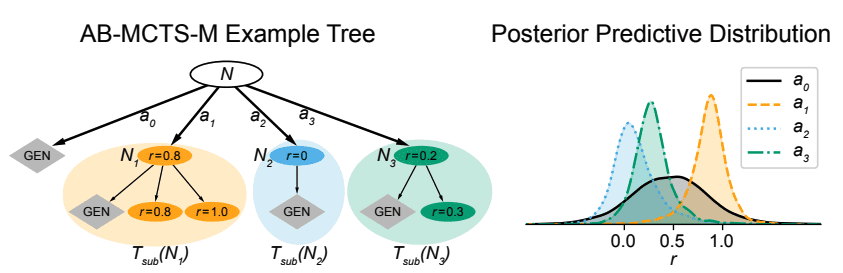

To represent the action of generating new child nodes, AB-MCTS employs GEN-nodes. Each node N has a GEN-node as a child. When a GEN-node is selected, the system expands the parent node by adding a new offspring.

Two Implementation Variants

AB-MCTS-M (Mixed Model) uses a Bayesian mixed model for modeling score distributions. The system assigns each subtree under node Nj a separate group, using shared parameters to capture answer quality characteristics.

AB-MCTS-A (Node Aggregation) aggregates all child nodes under a single CONT-node representing refinements of existing solutions.

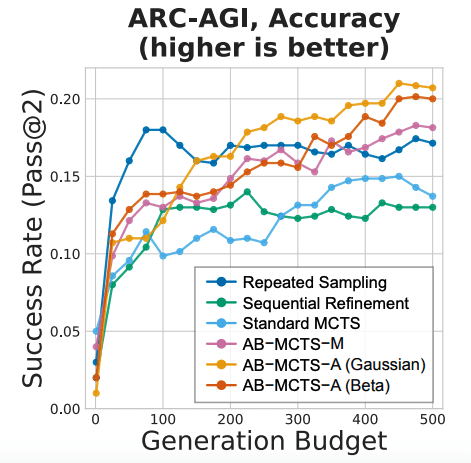

Experimental Results

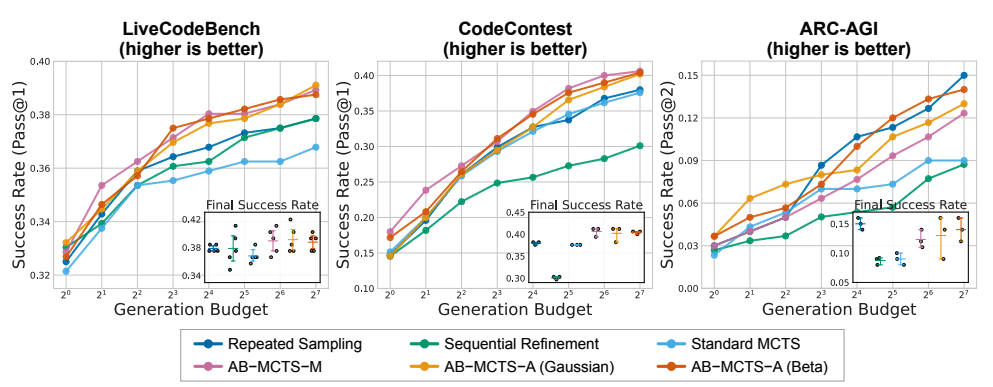

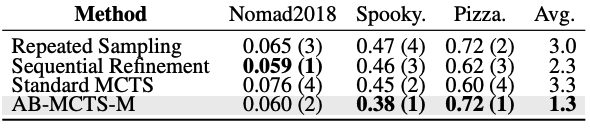

AB-MCTS was tested on four benchmarks: LiveCodeBench, CodeContest, ARC-AGI, and MLE-Bench, using GPT-4o and DeepSeek-V3 with a budget of 128 calls.

Results

On LiveCodeBench, AB-MCTS achieves 39.1% vs. 37.8% for repeated sampling. On CodeContest — 40.6% vs. 37.9%. On ARC-AGI, it shows 15.0% success rate. On MLE-Bench, it demonstrates the best average rank among all methods.

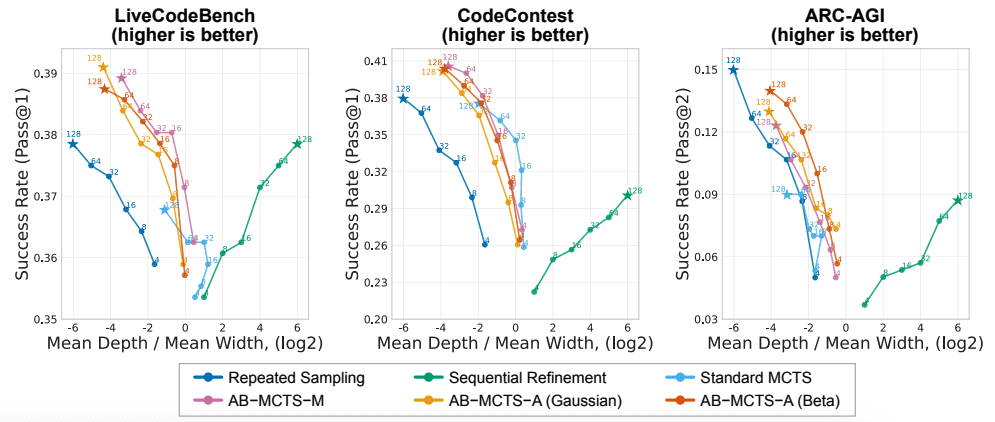

Search Behavior Analysis

AB-MCTS generates wider trees compared to standard MCTS due to its ability to adaptively make decisions about search expansion.

When increasing the budget to 512 calls, AB-MCTS continues to improve while repeated sampling reaches a plateau.

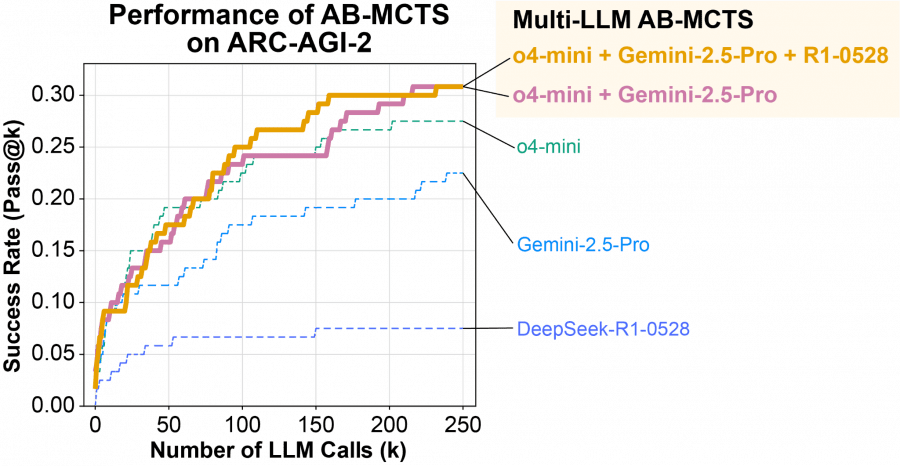

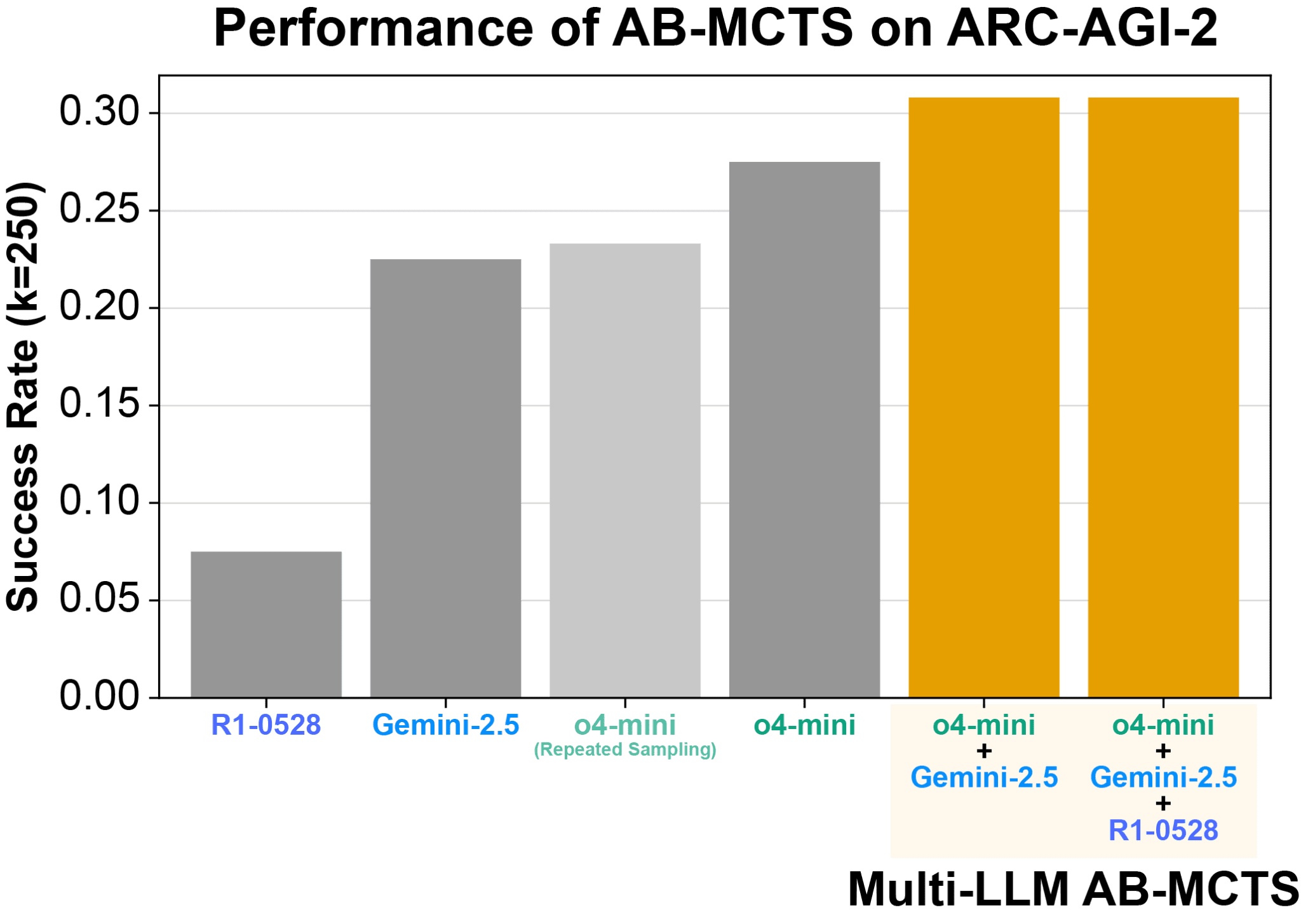

Multi-LLM: Creating AI Teams

Multi-LLM AB-MCTS solves not only “what to do” but also “which model should do it”. The method starts with balanced LLM usage and learns each model’s effectiveness, redistributing workload to the most productive ones.

Multi-LLM Operating Principle

At the start of a task, the system doesn’t know which model is best suited for the specific problem. It begins with balanced use of available LLMs and progressively learns each model’s effectiveness, redistributing workload to the most productive ones.

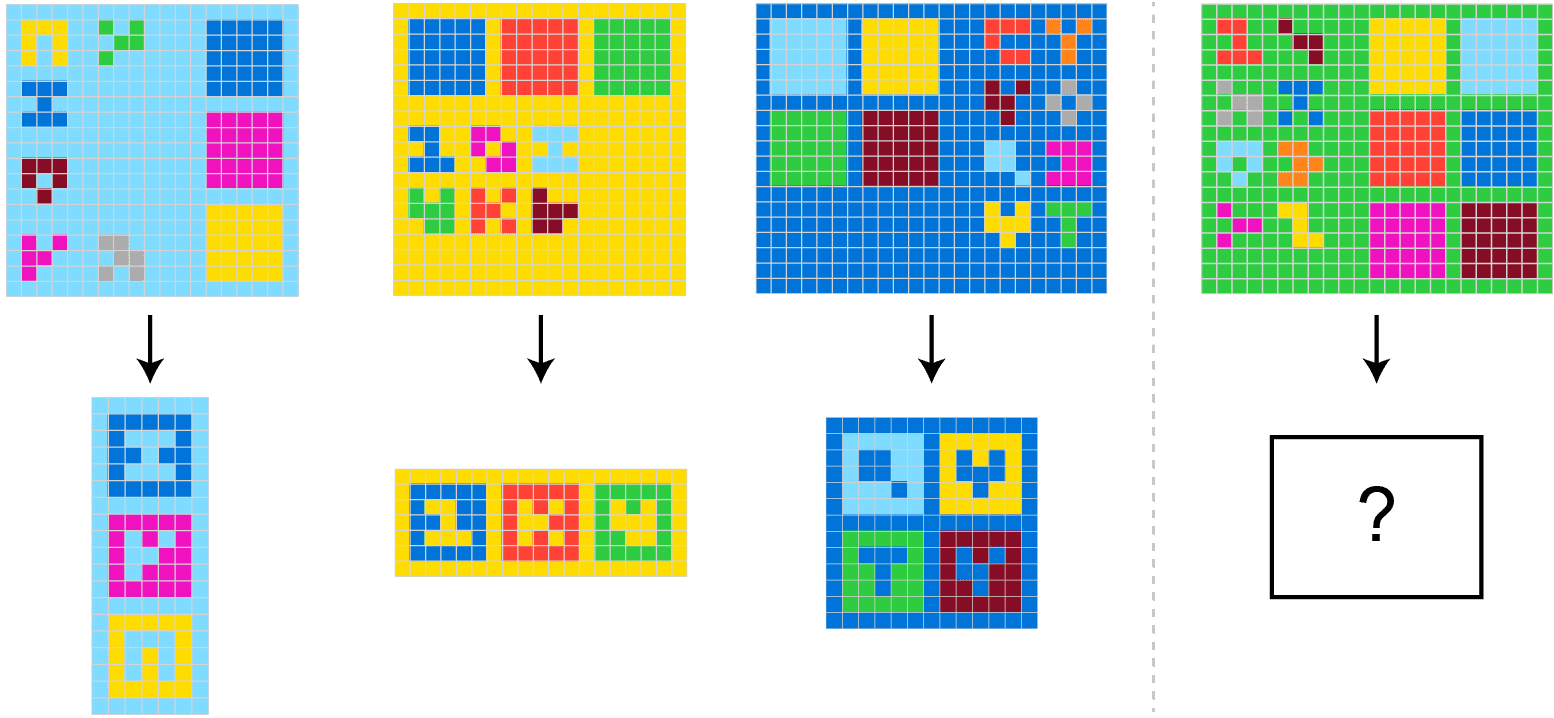

Breakthrough Results on ARC-AGI-2

The method was tested on ARC-AGI-2 — one of the most challenging AI benchmarks requiring human-like visual reasoning. The team used three frontier models:

- o4-mini

- Gemini 2.5 Pro

- DeepSeek-R1-0528

The collective of models was able to find correct solutions for more than 30% of 120 test tasks — a result significantly surpassing any individual model.

Synergistic Collaboration

Most impressive was solving “unsolvable” tasks — problems that no individual model could handle. In one case, an incorrect solution from o4-mini served as a hint for DeepSeek-R1-0528 and Gemini-2.5 Pro, which analyzed the error, corrected it, and obtained the right answer.

TreeQuest: From Research to Practice

Sakana AI released the open-source TreeQuest framework under Apache 2.0 license for commercial use. TreeQuest provides an API for implementing Multi-LLM AB-MCTS.

Practical Applications

TreeQuest opens possibilities for solving real business problems:

- Iterative optimization: automatically finding ways to improve existing software performance metrics

- Reducing response time of web services through intelligent optimization

- Improving accuracy of machine learning models

- Complex algorithmic programming

Fighting Hallucinations

Multi-LLM reduces hallucinations through ensembles of models with different error tendencies — critical for business applications.

Limitations and Future Directions

The approach requires reliable solution quality evaluators, which can be challenging depending on the task. Future research may focus on incorporating more detailed real-world cost factors beyond the number of API calls.

AB-MCTS opens prospects for efficient inference scaling through adaptive search strategy, demonstrating superiority over existing methods on diverse complex tasks.