MiniCPM4: Open Local Model Achieves Qwen3-8B Performance with 7x Inference Acceleration

15 June 2025

MiniCPM4: Open Local Model Achieves Qwen3-8B Performance with 7x Inference Acceleration

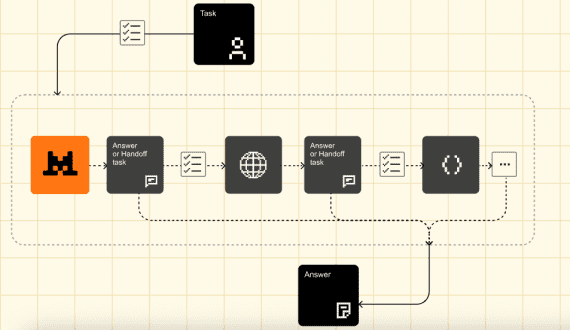

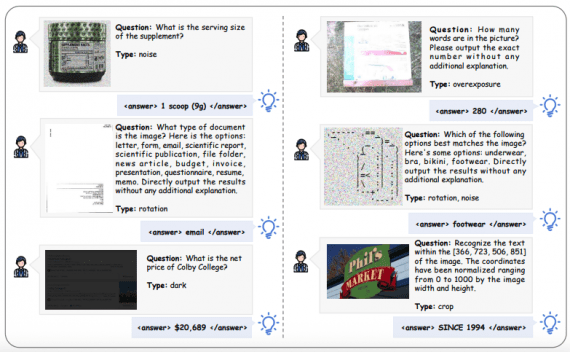

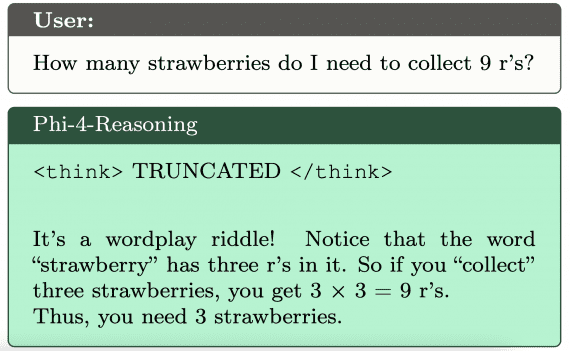

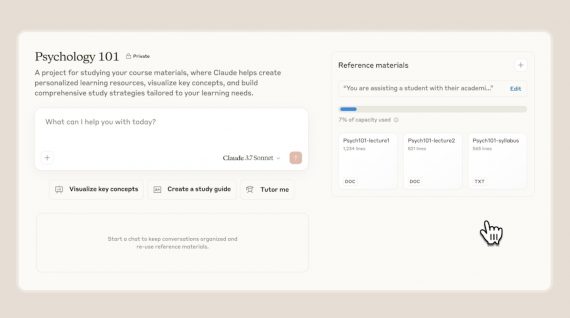

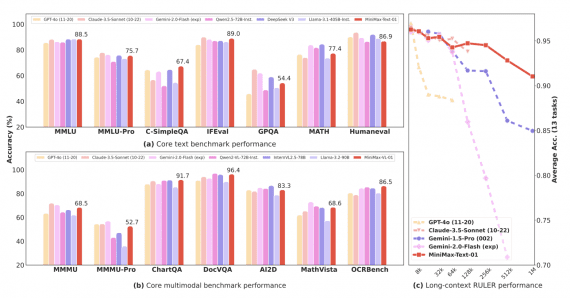

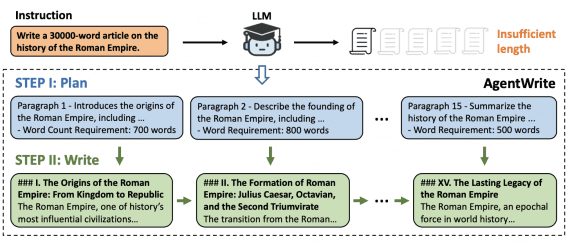

The OpenBMB research team presented MiniCPM4 — a highly efficient language model designed specifically for local devices. MiniCPM4-8B achieves comparable performance to Qwen3-8B (81.13 vs 80.55), while requiring 4.5 times…