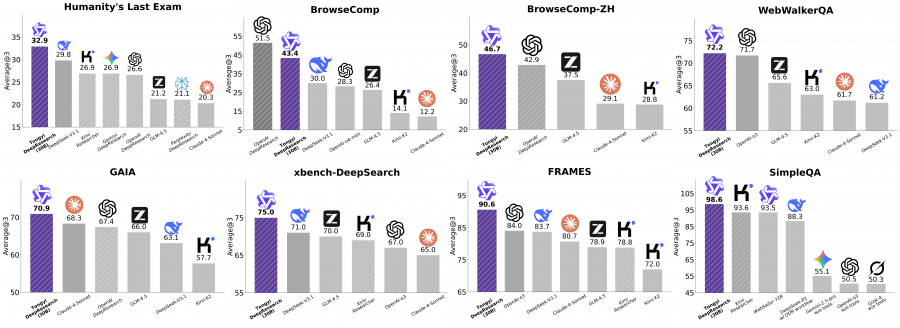

Researchers from Tongyi Lab (Alibaba Group) introduced WebWeaver — an open dual-agent framework for deep research that simulates the human research process. The framework consists of a planner, which iteratively alternates between web search for sources and optimizing the final report structure, and a content generator that performs hierarchical synthesis with targeted extraction of relevant materials. WebWeaver achieves state-of-the-art performance on three benchmarks: DeepResearch Bench, DeepConsult, and DeepResearchGym, surpassing both proprietary systems (OpenAI DeepResearch, Gemini Deep Research) and open-source solutions. The model Tongyi-DeepResearch-30B-A3B, a fine-tuned version of Qwen3-30b-a3b-Instruct using WebWeaver, along with its weights, is available on GitHub and HuggingFace under an open license.

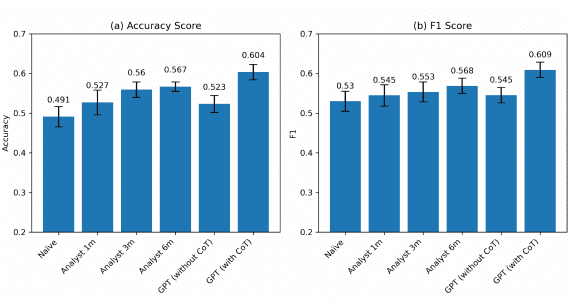

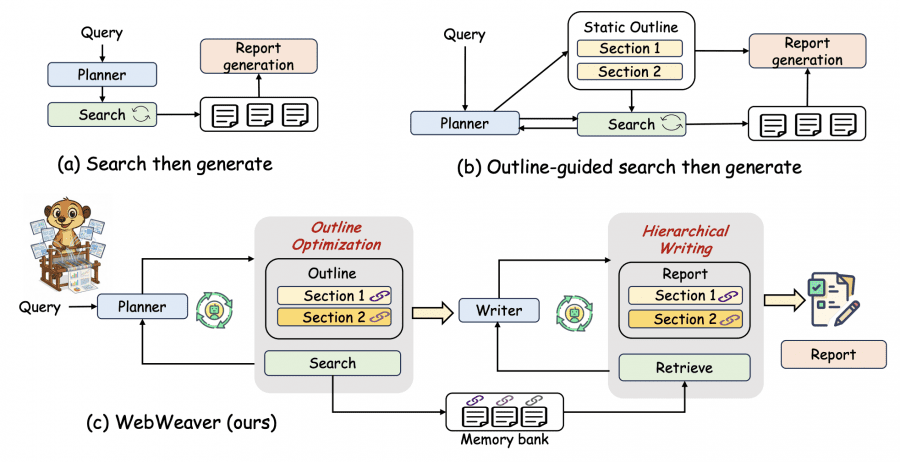

Existing approaches to open deep research suffer from two critical limitations. The first is the “search-then-generate” approach, where the agent gathers all information before writing the report, resulting in low-quality outputs without guiding structure. The second generates a static plan in advance and then performs targeted search for each section. However, a fixed structure relies solely on the LLM’s internal knowledge and hinders exploration in areas where the model lacks sufficient background.

Another challenge is feeding all collected materials into a single context for final generation. This leads to well-known long-context issues: mid-context information loss, hallucinations, and reduced accuracy. WebWeaver addresses these problems with a human-centered approach using dynamic structural optimization and hierarchical generation.

WebWeaver Architecture

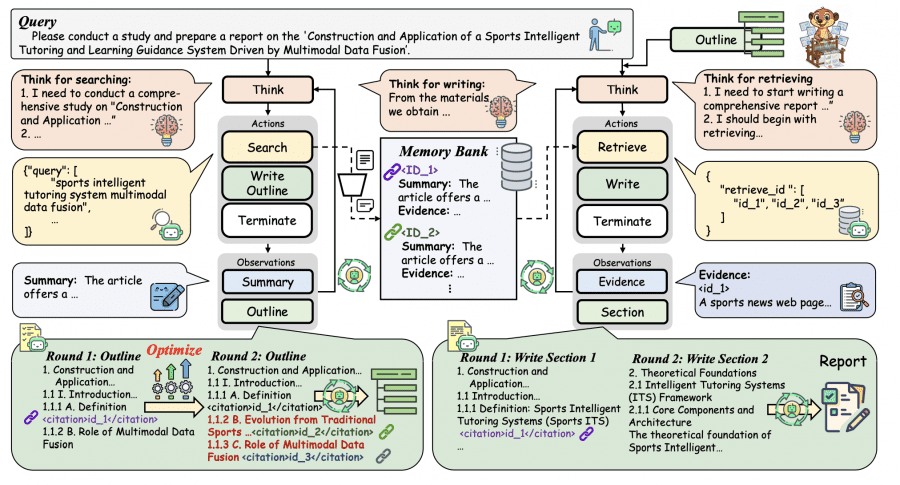

The framework consists of two specialized agents. The Planner works in a dynamic research cycle, iteratively alternating between data collection via web search and structure optimization. The outcome of this phase is not just a source collection but a comprehensive structure with explicit citations linking each section to the memory bank of sources.

When collecting data, the planner applies two-stage filtering: first, the language model selects relevant URLs based on titles and snippets; then, for each page, it extracts a relevant summary (for planner context) and detailed argumentation (for the memory bank). Structural optimization is continuous — the planner expands sections, adds subsections, and restructures the plan based on new information.

The Content Generator (writer) synthesizes outputs using the memory bank. For each section, it extracts only relevant evidence via citations, analyzes content through internal reasoning, and generates text. Once a section is complete, its materials are removed from context to prevent overflow and cross-section interference.

Experimental Results

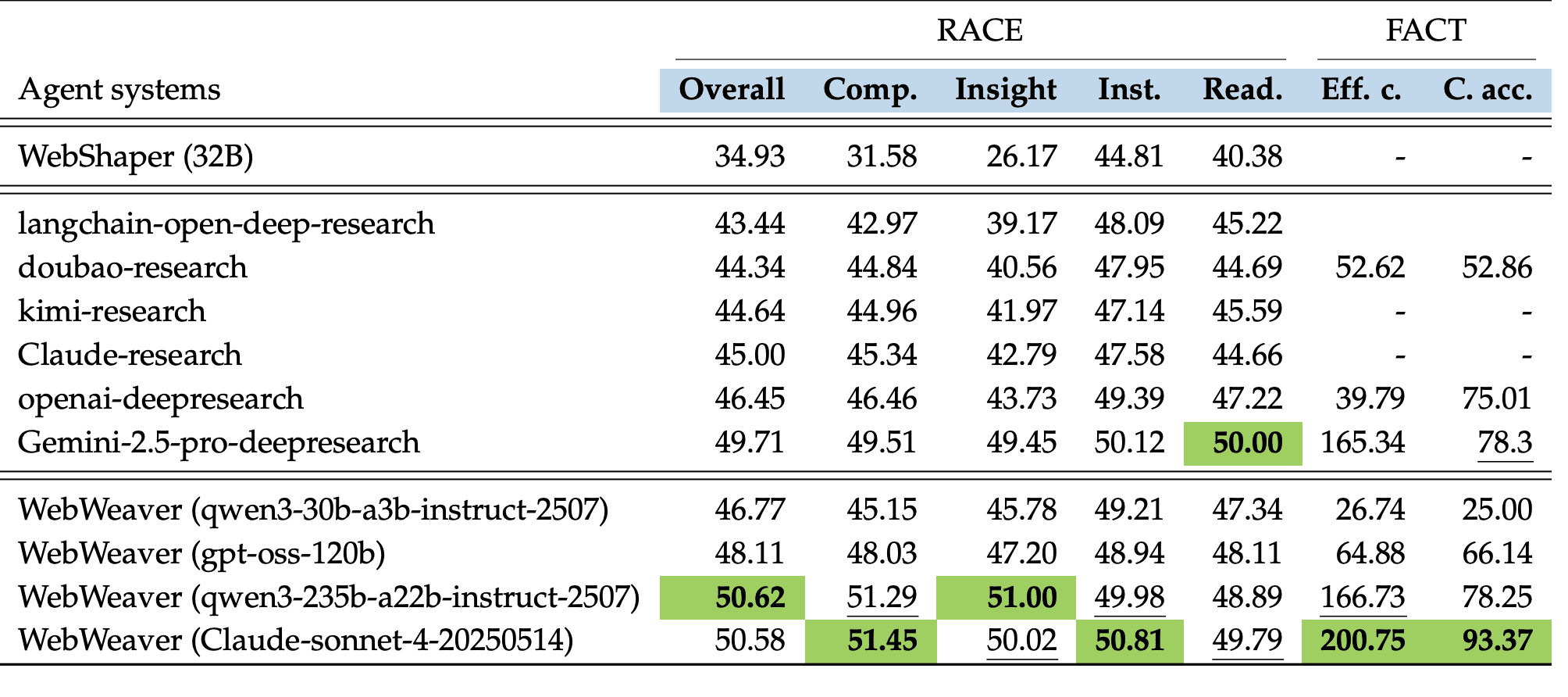

On the DeepResearch Bench, WebWeaver scores 50.58 overall compared to 49.71 for Gemini-2.5-pro-deepresearch and 46.45 for OpenAI DeepResearch. Citation accuracy is especially impressive — 93.37% for WebWeaver versus 78.3% for Gemini and 75.01% for OpenAI. This high accuracy stems from agent synergy: the planner embeds citation identifiers in the structure, and the generator’s hierarchical synthesis leverages this for targeted retrieval.

Statistical analysis highlights the scale of the task: the planner performs about 16 search steps, runs more than 2 structural optimization cycles, and stores over 100 web pages with 67,000 tokens of evidence. The generator produces a 26,000-token report in roughly 25 discrete steps. These figures underline the need for an architecture with a central memory bank and targeted retrieval.

Distillation into Smaller Models

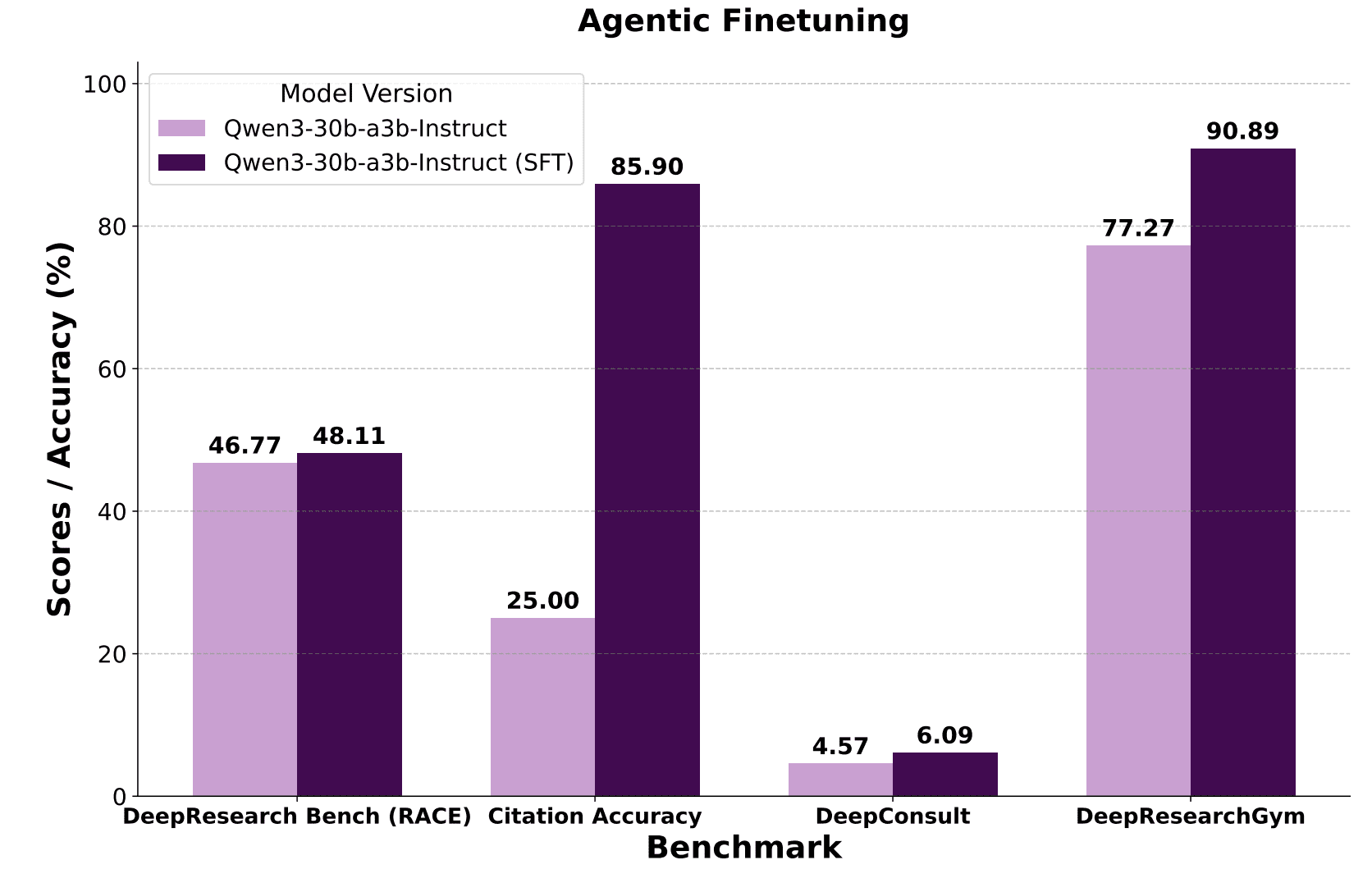

The researchers created the WebWeaver-3k dataset from 3.3k planning strategies and 3.1k generation strategies. Fine-tuning Qwen3-30b on this data improved citation accuracy from 25% to 85.90%, DeepConsult score from 4.57 to 6.09, and DeepResearchGym score from 77.27 to 90.89.

WebWeaver reframes the problem of long-context reasoning as a structured task of systemic information management through a series of precise actions. The planner and generator use tools for dynamic research, structuring, and generation, instead of passive single-pass processing. This sets a blueprint for building agentic systems that acquire knowledge through actions rather than relying solely on attention mechanisms.