Joseph Suarez, a Stanford grad student has released a project where he tried to train a GAN to model a distribution of GANs.

In his short paper named “GAN You Do the GAN GAN?”, he explains his experiments and findings in his attempt to model a distribution of Generative Adversarial Network.

The objective is, in fact, instead of using a GAN to model images, to use a GAN to model a distribution of GANs that model images. As strange as the idea sounds, it actually provides useful insights into modeling with Generative Adversarial Networks and it can be used as a tool to visualize and analyze generative network’s decisions and outputs.

He called the architecture GAN-GAN, and in the published work, he doesn’t explore longer sequences of this (such as GAN-GAN-GAN, etc.).

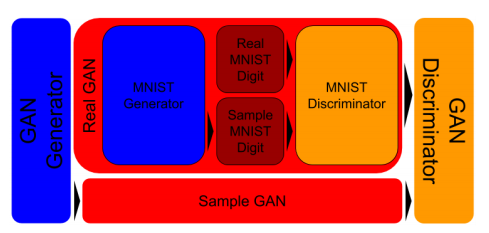

In order to model a distribution of GANs, he trained a set of GAN networks using MNIST data and saved the snapshots of the parameter at each epoch. Then he trained a GAN-GAN architecture by treating each of the snapshots as an individual training example. The GAN-GAN architecture as shown in the picture below.

The evaluation showed that GAN-GAN architecture is able to produce ordered GAN samples according to the image quality. Also, it showed that GAN-GAN exhibits better performance than the MNIST-trained GANs.

The code for the experiments was open-sourced and can be found on Github. The short paper is available here.