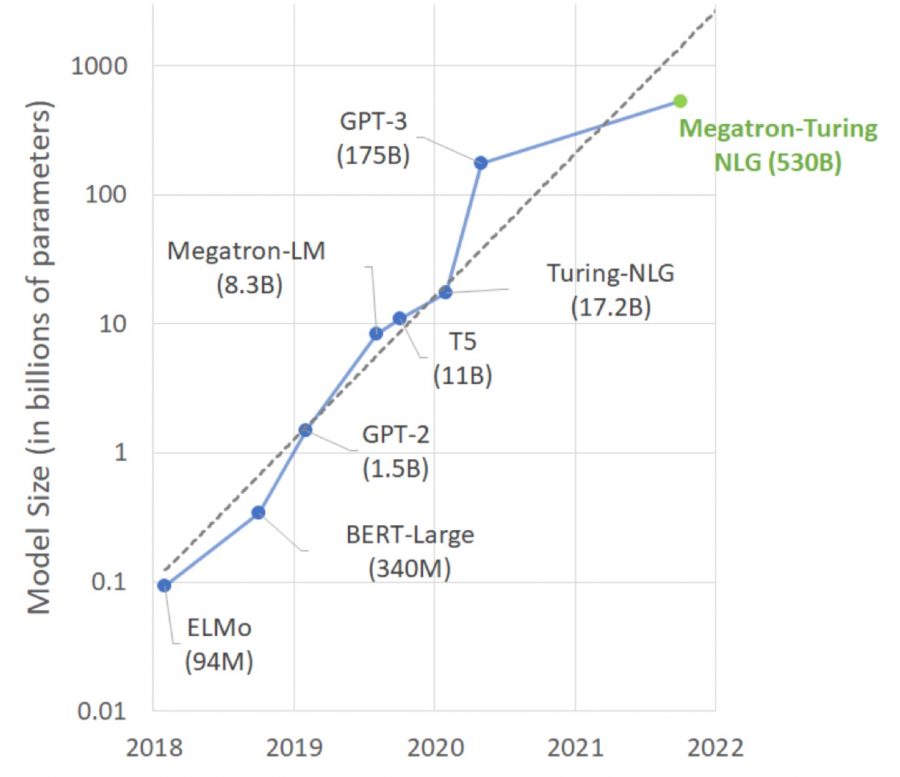

The MT-NLG language model developed by Microsoft and NVIDIA has 530 billion parameters, which is 3 times more than that of GPT-3. MT-NLG consists of 105 layers and surpasses all existing natural language processing models.

The model was trained on an NVIDIA Selene supercomputer consisting of 560 DGX servers, each of which houses 8 A100 GPUs with 432 tensor cores and 80 GB of RAM.

The Pile training dataset was 1.5 TB in size and consisted of several hundred billion units of text data taken from 11 databases, including Wikipedia and PubMed.

MT-NLG demonstrated record-high accuracy in the following tests: predicting the completion of a text by meaning, reading comprehension, generating logical conclusions, creating conclusions in natural language, distinguishing the meaning of words with multiple meanings.

It is curious that MT-NLG, according to the developers, demonstrated an understanding of the simplest mathematics. Also, the developers of the model warned that it has a bias inherent in all language models.

penses-tu qu’il y ait d’autres planètes habitables dans l’univers ?

penses-tu qu’il y ait d’autres planètes habitables dans l’univers ?