A team of researchers from the Center for AI Safety and Scale AI published the Remote Labor Index (RLI) — the first benchmark that tests whether AI agents can perform real freelance work. They collected 240 actual projects from Upwork — ranging from game development to architectural drawings. Results show that even the most advanced AI agents complete only 2.5% of tasks at a level acceptable to clients. This is quite surprising, considering how well AI performs on other benchmarks. The project is partially open. Researchers published the evaluation platform code and 10 example projects on Github, so everyone can see how it works. However, the main test set of 230 projects is kept private — this is standard practice for benchmarks to prevent companies from “fitting” their models to specific tasks. If everything were published, models would memorize these projects, and the test would become useless.

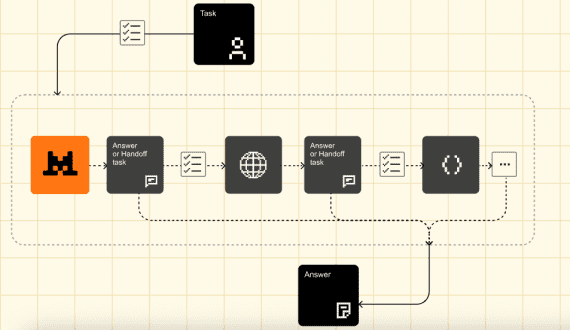

How the Benchmark Works

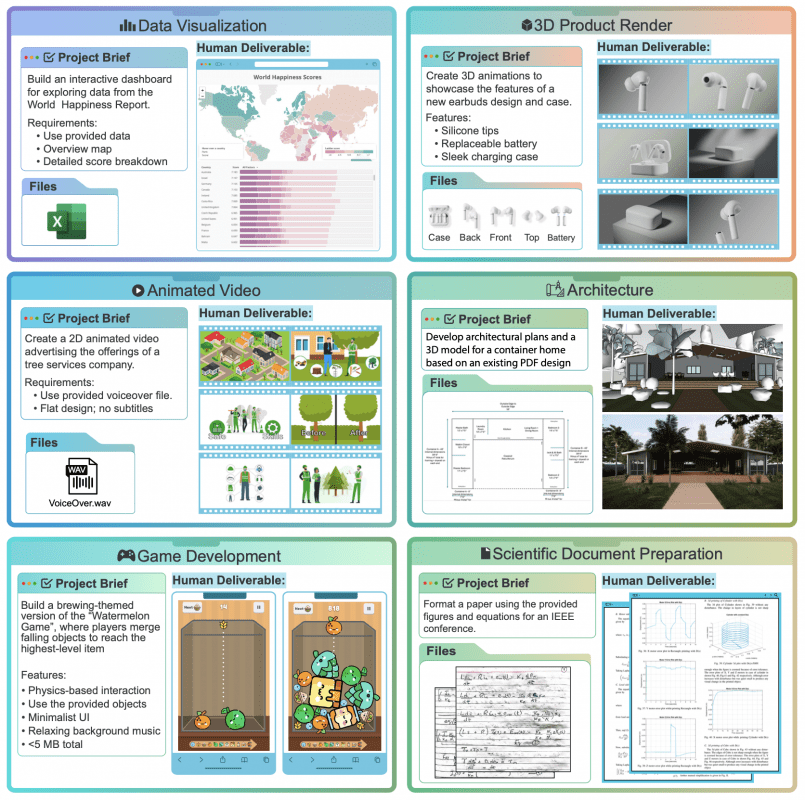

Unlike typical benchmarks where AI solves abstract problems, RLI uses real projects from freelance platforms. Researchers found freelancers on Upwork and paid them $15-200 for examples of already completed work. Each such project consists of three parts: the client’s brief, input files for work, and the finished result that the freelancer once delivered and got paid for.

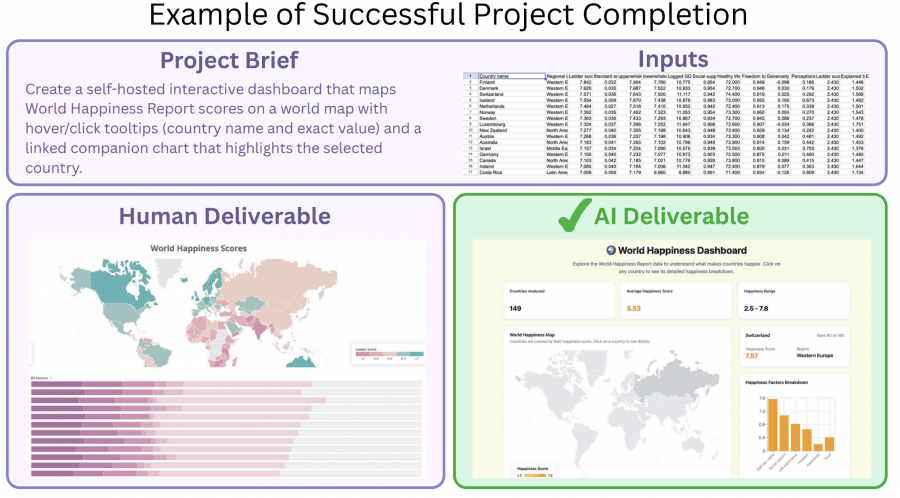

The data collection scheme was as follows: at some point, a real client gave a task to a freelancer, they completed the work, the client was satisfied and paid. Some time later, researchers bought a copy of this project from the freelancer. Now for testing, they give the same brief and the same files to AI and see if it can produce work of the same quality. The professional’s work serves as the “correct answer” — a market-validated quality bar that a real client deemed worthy of payment.

Researchers collected projects in two ways: 207 were purchased through regular orders from Upwork freelancers, and another 40 were added from rare work categories and found in the public domain (with authors’ permission). Projects cover 23 work categories: video and animation (13%), 3D modeling (12%), graphic design (11%), game development (10%), audio (10%), architecture (7%), and product design (6%). On average, a project costs $632, though half cost $200 or less, and completion takes about 29 hours (median — 11.5 hours). In total, all 240 projects represent over 6,000 hours of work valued at over $140,000.

AI Performance Results

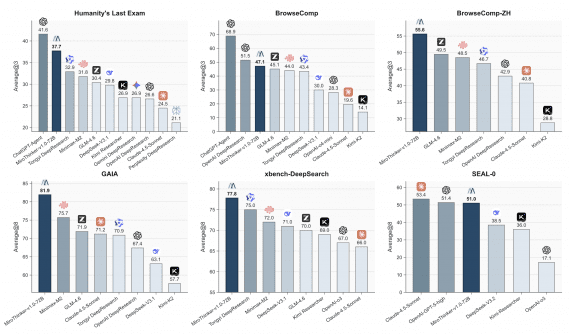

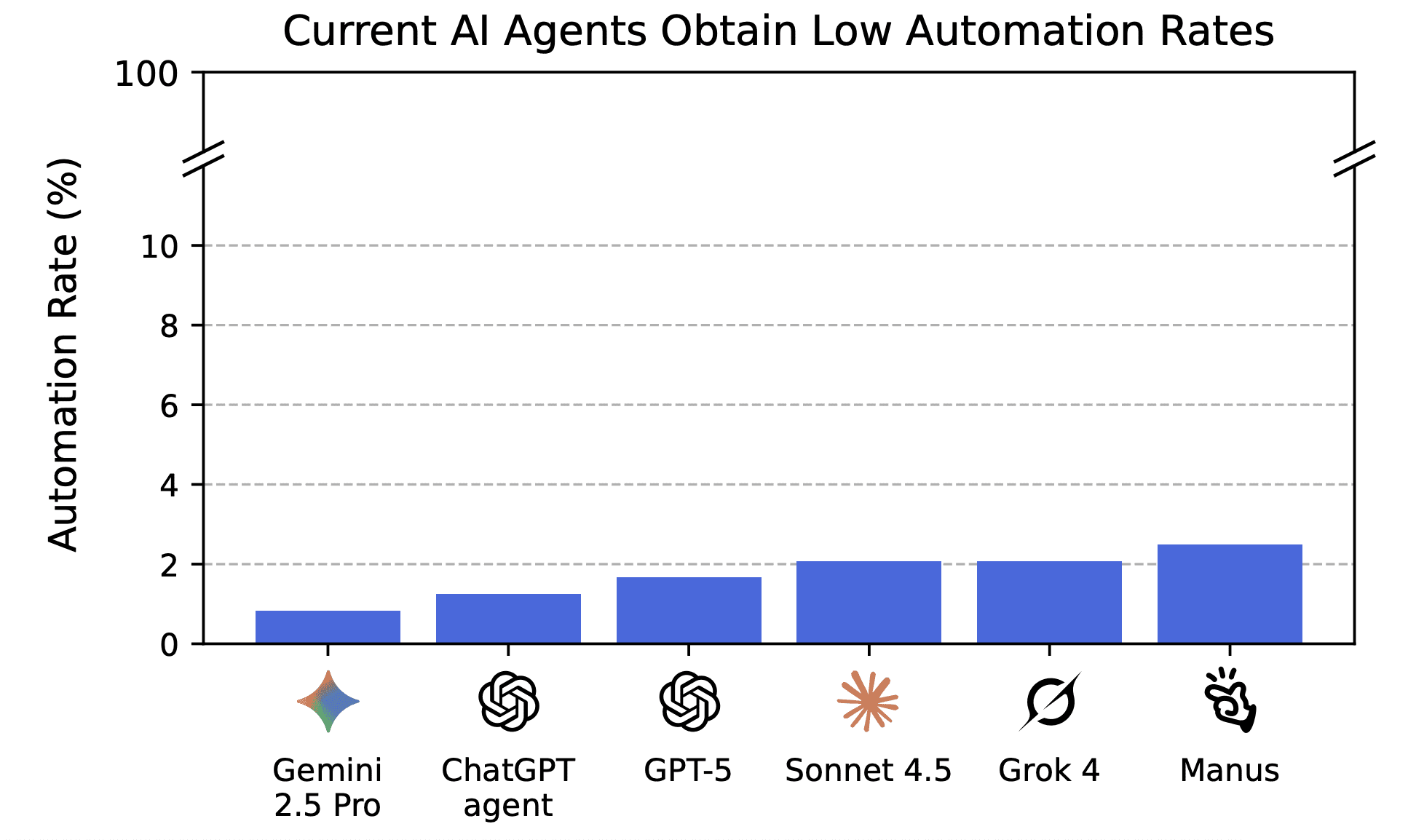

Six models were tested: Manus, Grok 4, Claude Sonnet 4.5, GPT-5, ChatGPT agent, and Gemini 2.5 Pro. Manus performed best — completing 2.5% of projects, followed by Grok 4 and Sonnet 4.5 at 2.1%. By Elo rating, Manus again leads with 509.9 points, but this is still far below the human baseline of 1000 points. A difference of 400 points roughly means a human will beat AI with a probability of 10 to 1.

Analysis of typical errors revealed several patterns. In 17.6% of cases, AI created corrupted or empty files. In 35.7%, work was incomplete — for example, an 8-second video instead of 8 minutes. In 45.6% of cases, quality was simply poor — primitive drawings using basic geometric shapes instead of professional graphics or robotic voices in voiceovers. And in 14.8% of cases, different parts of the work didn’t match each other — for example, a house looked different across various 3D renders.

Where AI Already Performs Well

AI successfully completed a small number of projects. These are mainly creative tasks involving audio and images, plus text writing and simple programming. Specifically: audio editing (creating sound effects for retro games, separating vocals from music, merging voiceovers with music), generating images for advertising and logos, writing reports, and creating interactive data dashboards. Here models showed results comparable to or even better than humans.

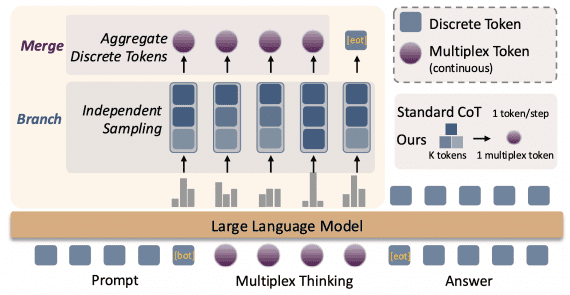

Many failures are related to AI’s inability to verify their own work. This is especially noticeable in projects requiring complex visual verification — architecture, game development, web development. A model can generate a 3D house or game but doesn’t understand when something works incorrectly or looks strange.

Why RLI Is Better Than Other Benchmarks

RLI differs significantly from other benchmarks. Projects here take on average twice as long as similar tests like HCAST and GDPval, matching real Upwork orders. Even more important is the distribution of work types. Existing benchmarks primarily test programming and research with writing, while in reality, freelance platforms have many other tasks: design, operations, marketing, administration, data processing, audio-video production.

In file format diversity, RLI is also ahead — 72 unique types versus 24 in GDPval: 3D models (.obj, .stl, .gltf), CAD drawings (.dwg, .dxf, .skp), design files (.psd, .ai), architectural formats (.rvt, .ifc), and lots of multimedia. The evaluation platform can open all these formats directly in the browser, including interactive 3D models and WebGL games.

Economics of Automation

Besides standard metrics, researchers created autoflation — how much cheaper it became to complete all test projects if using AI where it succeeds. By October 2025, this reduction reached 4%. Sounds small, but it’s just the beginning. They also calculated dollars earned — how much each AI “earned” on successfully completed projects. Leader Manus would have earned $1,720 out of a possible $143,991 if working as a freelancer.

Running AI on one project cost an average of $2.34 (with a $30 API limit), much cheaper than human work. Interestingly, project cost and completion time strongly correlate (0.785 on a logarithmic scale) — the more expensive the project, the longer it takes, which is logical. The cheapest project cost $9, the most expensive $22,500, and the distribution is close to log-normal, as in the real economy.

What’s Not Included in the Test

RLI doesn’t cover all remote work. Tasks requiring client communication (tutoring), teamwork (project management), or waiting for results (SEO promotion) were excluded. Project prices are historical, without inflation adjustment — this work actually costs more now. And even if AI completes all 240 projects perfectly, it doesn’t mean it can handle all types of remote work that exist.

Main conclusion: despite AI performing excellently on academic tests of knowledge and logic, they’re still very far from real freelance work. RLI provides concrete numbers for tracking progress, and these numbers will help companies and governments prepare for how AI will change the labor market. The dataset will be expanded with new projects, and the methodology adapted to improving models.