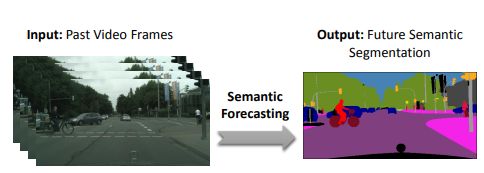

A novel method proposed by researchers at Stanford University encodes RGB frames from videos and predicts future semantic segmentation.

In the past, researchers have tackled a wide variety of prediction problems such as predicting pixels, activities, trajectories, video frames, etc. Most of the developed methods were focusing on encoding specific data type and predicting a future sequence of the same type. Researchers from Stanford in their paper “Segmenting the Future”, propose an interesting approach where they use past video frames to predict future semantic segmented frames.

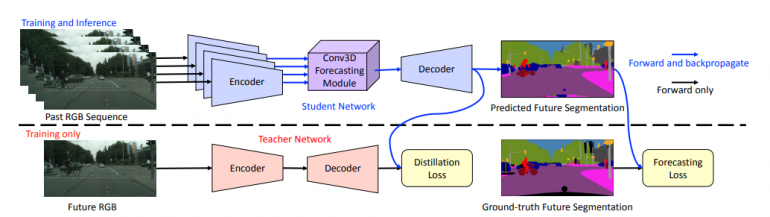

A specific architecture is able to encode a sequence of RGB frames and implicitly model future semantic segments of the frames. The proposed architecture is teacher-student architecture. In such a setting, the teacher network is guiding the learning process of the student network which performs the semantic segmentation forecasting.

The teacher network is an encoder-decoder network which takes the future RGB frames as input. This network provides supervision signal by computing a distillation loss between the output of the student and the teacher network.

The student network, on the other hand, has an encoder, a forecasting unit, and a decoder module. In the end, the overall training loss is the weighted sum of the cross-entropy forecasting loss and the mean-squared error distillation loss.

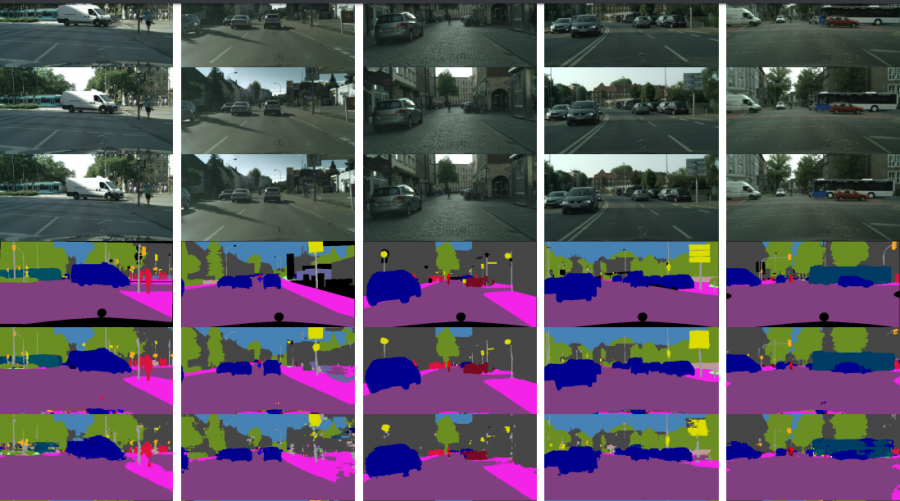

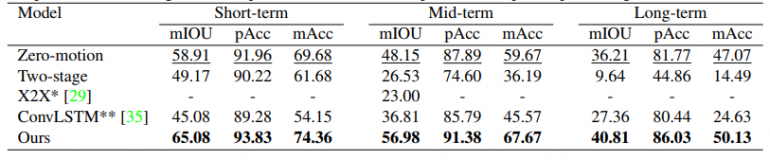

For the evaluation of the method, the researchers use the Cityscapes dataset which contains pixel-wise annotations for some of the frames. Since this is the first attempt to solve a task of predicting the future segmentation masks, the researchers perform the evaluation by employing different models on the same task. They conducted experiments with X2X, ConvLSTM, and other architectures to learn a mapping between the input and output as defined in their problem set.

Researchers show that the proposed method outperforms the defined baseline and the current state-of-the-art method. The implementation of the method was open-sourced and it is available on Github.