Google has introduced MUM, a neural network that answers users ‘ multi-level questions. The neural network is 1000 times more efficient than BERT and is trained in 75 languages at once.

To get an answer to a question, the user often has to make several search queries. For example, to find out how to prepare for a mountain climb, you need to find the height of the mountain, the average air temperature, the difficulty of hiking trails, the right equipment to use, and much more. MUM (“Multitask Unified Model”) solves this problem by searching for the information needed for the response in several directions and giving a single response. Google claims that using MUM reduces the number of search queries for multi-level answers by about 8 times. The neural network is built on the Transformer architecture, like the similar Google BERT neural network, but is 1000 times more efficient.

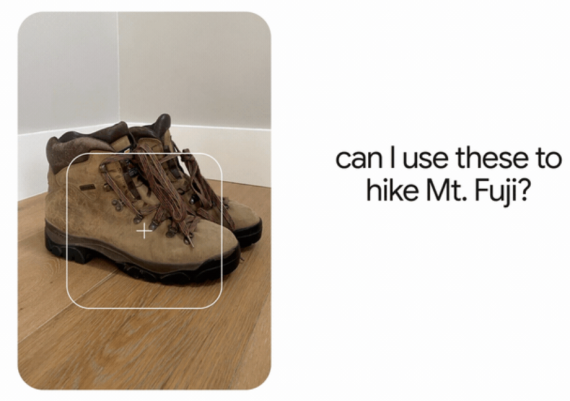

MUM is trained in 75 languages, which improves the quality of the answers provided: you can ask a question in one language, and the neural network will search for the answer in materials also in other languages to find information that may not be available in the language of the request. Also, the neural network is multi-modal, that is, it accepts requests containing text and images at the same time. For example, if you take a photo of hiking boots and ask, “Can I use them to hike Mount Fuji?”, MUM will link the text request to the image and provide an answer. In the future, Google plans to expand the functionality of the neural network by adding the recognition of requests with audio and video recordings. Another feature of MUM is the search for information not only in texts, but also in images and videos.

In addition to MUM, Google has introduced LaMDA, a neural network for the next-generation voice assistant. It differs from competitive models in that it needs to be retrained in order to conduct a dialogue on a new topic. As a result, LaMDA can immediately support a conversation on any topic. The tool is currently under development, but in the future it can be used to develop dialog applications. In order to bring the style of LaMDA’s answers closer to natural language, Google works on their insight, wit, and surprise without compromising actual accuracy.