Scientists have revealed the similarity of the work of natural language processing models with brain activity during the perception of language information. In particular, predicting the next word based on already entered words, widely used in search suggestions, turned out to be a key part of the human brain’s ability to process language.

The brain’s perception of language is one of the most researched topics in neuroscience. A new study demonstrates how artificial intelligence algorithms, not originally designed to simulate the brain, can help to explore this area of neuroscience.

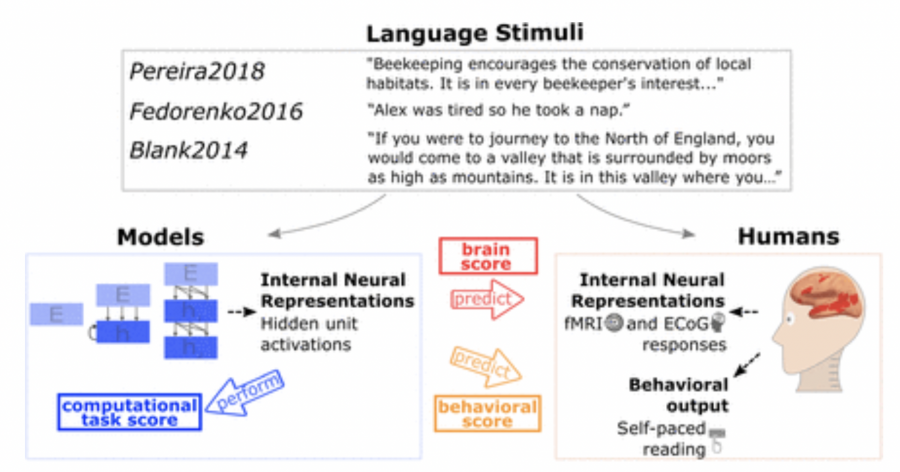

Scientists compared 43 machine learning language models, including OpenAI’s GPT-2, optimized for predicting the next words in a text, with brain scan data on how biological neurons react when someone reads a text or hears speech.

To do this, words were fed to the input of each model and the reaction of their nodes was measured. These reactions were then compared with the activity of neurons measured by fMRI or electrocorticography while people were performing various tasks related to language processing.

The activity of nodes in artificial intelligence models that best predict the next word turned out to be similar to the patterns of behavior of neurons in the human brain.