A team of NVIDIA researchers presented Nemotron-Nano-9B-v2 — a hybrid Mamba-Transformer language model that generates responses 6 times faster than Qwen3-8B on reasoning tasks while exceeding it in accuracy. The model with 9 billion parameters was compressed from the original 12 billion specifically for operation on a single NVIDIA A10G GPU. Nemotron-Nano-9B-v2 is based on the Nemotron-H architecture, where most self-attention layers of the traditional Transformer architecture are replaced with Mamba-2 layers to increase inference speed when generating long reasoning traces.

The base model Nemotron-Nano-12B-v2-Base was pre-trained on 20 trillion tokens using FP8 training format. After alignment, developers applied the Minitron strategy for model compression and distillation so it could process input sequences up to 128 thousand tokens on a single NVIDIA A10G GPU (22 GB memory, bfloat16 precision). Compared to models of similar size, Nemotron-Nano-9B-v2 achieves comparable or better accuracy on reasoning benchmarks while increasing throughput up to 6 times in scenarios with 8k input and 16k output tokens.

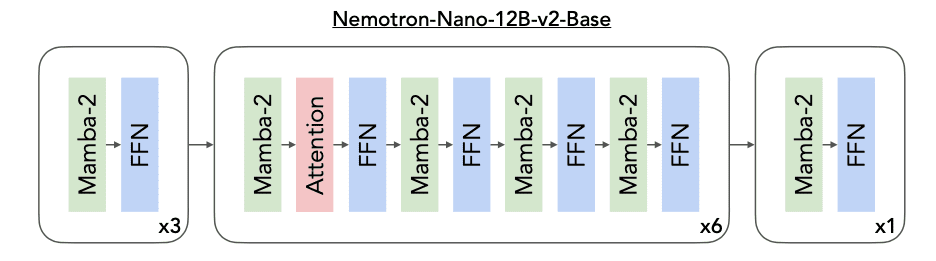

NVIDIA Nemotron Nano 2 Model Architecture

Nemotron-Nano-12B-v2-Base architecture: combination of Mamba-2, self-attention and FFN layers (Figure 1, page 3)

Nemotron-Nano-12B-v2-Base uses 62 layers, of which 6 are self-attention layers, 28 are FFN, and 28 are Mamba-2 layers. Hidden dimension is 5120, FFN intermediate dimension is 20480, Grouped-Query Attention with 40 query heads and 8 key-value heads. For Mamba-2 layers, 8 groups are used, state dimension 128, head dimension 64, expansion factor 2 and convolution window size 4.

Like Nemotron-H, the model does not use positional embeddings, applies RMSNorm normalization, separate weights for embedding layer and output layer, does not use dropout and bias weights for linear layers. For FFN layers, squared ReLU activation is applied.

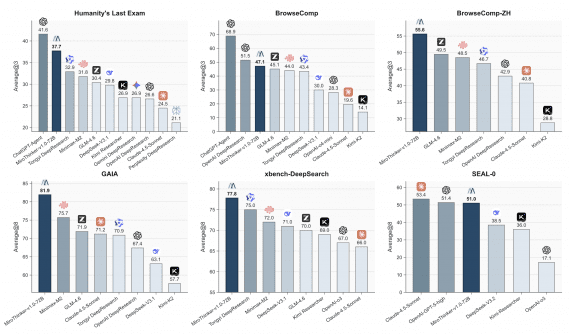

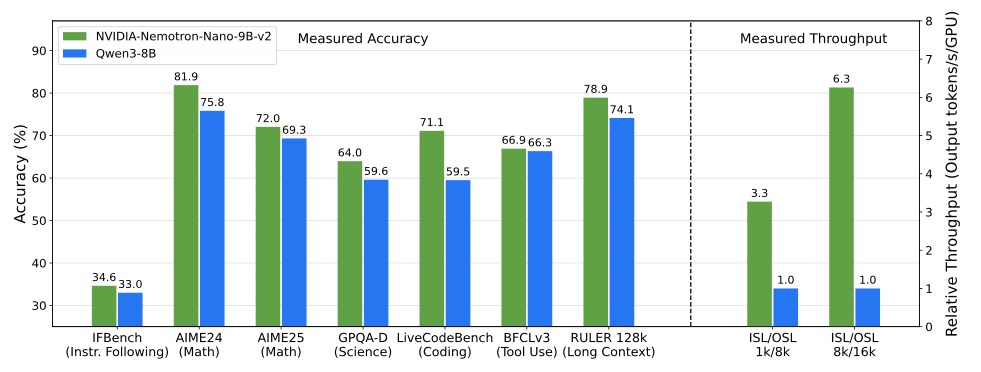

Comparison of Nemotron Nano 2 and Qwen3-8B in accuracy and throughput across various benchmarks (Figure 2, page 5)

Pre-training

Nemotron-Nano-12B-v2-Base was trained on a corpus of curated and synthetically generated data. Mathematical data was obtained through the new Nemotron-CC-Math-v1 pipeline with 133 billion tokens from Common Crawl using the Lynx + LLM pipeline. The pipeline preserves equations, standardizes to LaTeX and outperforms previous mathematical datasets on benchmarks.

Code was obtained from GitHub repositories through multi-stage filtering, deduplication and quality filters. Code Q&A data in 11 programming languages was included.

Model Alignment

After pre-training, the model underwent post-training through a combination of supervised fine-tuning (SFT), group relative policy optimization (GRPO), direct preference optimization (DPO) and reinforcement learning from human feedback (RLHF). Multiple SFT stages were applied across various domains, followed by targeted SFT on key areas: tool use, long context performance and truncated training.

GRPO and RLHF improved instruction following and dialogue capabilities, while additional DPO stages further enhanced tool use. Post-training was performed on approximately 90 billion tokens.

Model Compression

For operation on a single NVIDIA A10G GPU, the model was compressed using the Minitron strategy. Depth reduction was applied (removing 6-10 layers from the original 62-layer architecture) combined with width reduction of embedding channels (4480-5120), FFN dimension (13440-20480) and Mamba heads (112-128).

The final architecture uses 56 layers with 4 attention layers, embedding channels reduced from 5120 to 4480, and intermediate FFN size from 20480 to 15680. This achieved compression from 12B to 9B parameters while maintaining performance.

Results

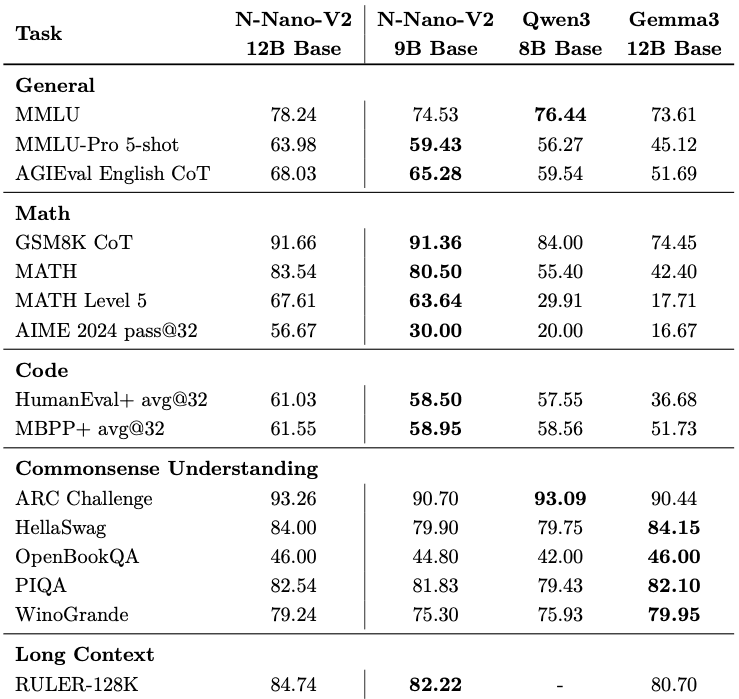

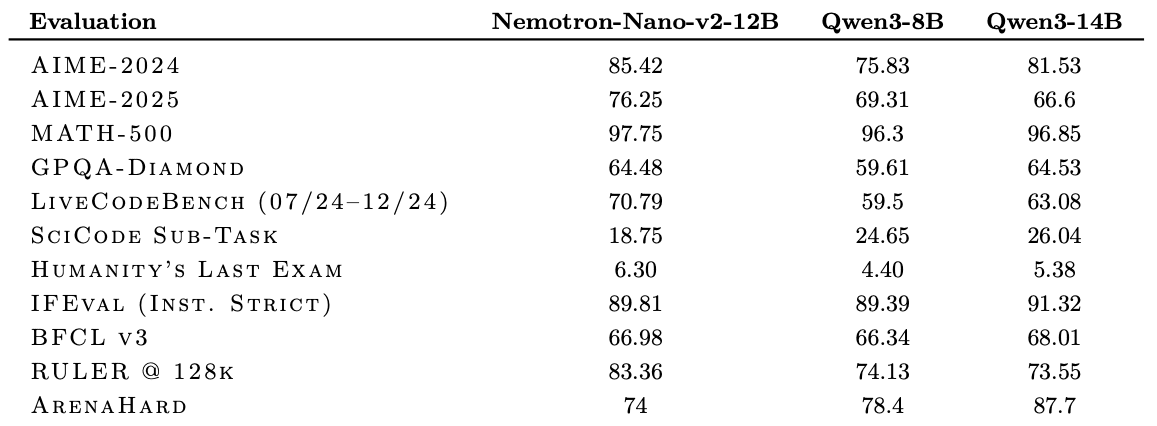

Nemotron-Nano-9B-v2 achieves comparable or better accuracy compared to Qwen3-8B on reasoning benchmarks while increasing throughput by 3-6 times for scenarios with high generation volume. On AIME-2024, the model shows 56.67 pass@32 versus 20.00 for Qwen3-8B, on MATH-500 — 97.75 versus 96.3, on GPQA-Diamond — 64.48 versus 59.61.

Comparative results of Nemotron-Nano-9B-v2 with baseline models on popular benchmarks (Figure 3, page 7)

For multilingual mathematical tasks (MGSM), the average result is 84.80 versus 64.53 for Qwen3-8B. On long context tasks, RULER-128k shows 82.22 points.

Evaluation results highlight competitive accuracy compared to other open small models. Tested in “reasoning enabled” mode using the NeMo-Skills suite, Nemotron-Nano-9B-v2 achieves 72.1 percent on AIME25, 97.8 percent on MATH500, 64.0 percent on GPQA and 71.1 percent on LiveCodeBench.

Across all directions, Nano-9B-v2 shows higher accuracy than Qwen3-8B — the general comparison point. As shown in the technical report, NVIDIA’s reasoning model Nemotron-Nano-v2-9B achieves comparable or better accuracy on complex reasoning benchmarks than the leading comparable-sized open model Qwen3-8B while increasing throughput up to 6 times.

Deployment Features and Usage

NVIDIA-Nemotron-Nano-9B-v2 represents a versatile model for reasoning and dialogue tasks, designed for use in English and programming languages. Other non-English languages (German, French, Italian, Spanish and Japanese) are also supported. The model is optimized for developers creating AI agents, chatbots, RAG applications and other AI applications.

For correct operation, the –mamba_ssm_cache_dtype float32 flag is required to maintain response accuracy. Without this option, model accuracy may decrease. The model supports context up to 128K tokens and works with 15 languages and 43 programming languages.

NVIDIA Nemotron Nano 2 Reasoning Budget Control

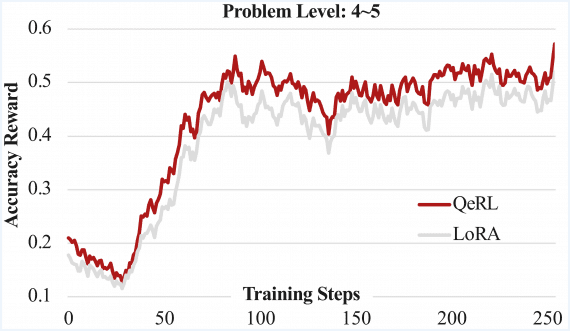

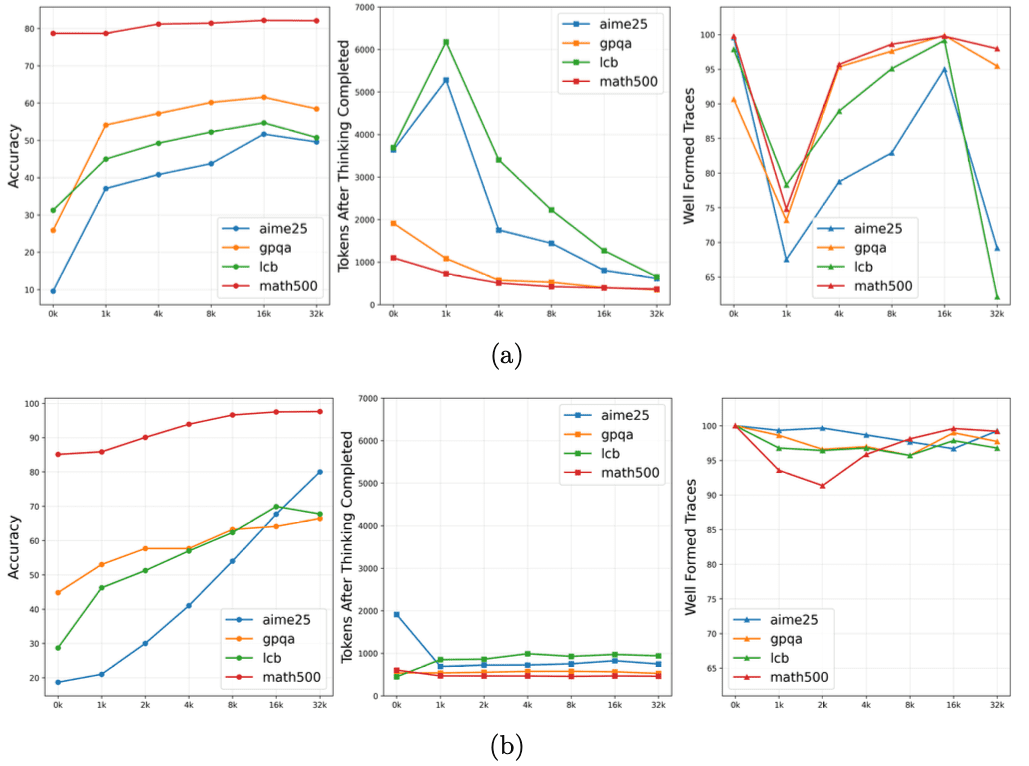

Results of reasoning budget control evaluation for Nemotron Nano 2 under various token limitations (Figure 4, page 9)

A feature of Nemotron Nano 2 is the ability to specify the number of tokens the model can use for reasoning during inference. The model by default generates a chain of reasoning before providing the final answer, though users can toggle this behavior through simple control tokens such as /think or /no_think.

The model also introduces runtime “thinking budget” control, allowing developers to limit the number of tokens devoted to internal reasoning before the model completes the response. This mechanism aims to balance accuracy and latency, which is particularly valuable for customer support applications or autonomous agents.

License

The Nano-9B-v2 model is released under the Nvidia Open Model License Agreement. The license is explicitly designed for commercial use. NVIDIA does not claim ownership of any outputs generated by the model, leaving full rights and responsibility with the developer.

Nemotron-Nano-9B-v2 is released along with corresponding base models and most pre-training and post-training data on HuggingFace as part of the Nemotron-Pre-Training-Dataset-v1 collection, containing 6.6 trillion tokens of premium web crawling, mathematics, code, SFT and multilingual Q&A data.