Chain-of-Experts: Novel Approach Improving MoE Efficiency with up to 42% Memory Reduction

11 March 2025

Chain-of-Experts: Novel Approach Improving MoE Efficiency with up to 42% Memory Reduction

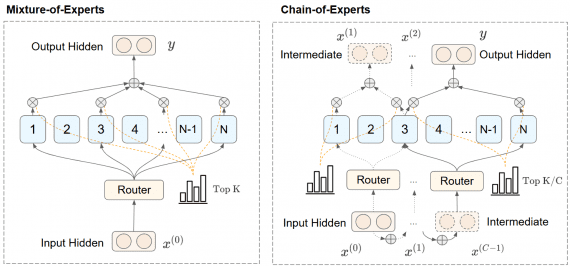

Chain-of-Experts (CoE) – a novel approach fundamentally changing how sparse language models process information, delivering better performance with significantly less memory. This breakthrough addresses key limitations in current Mixture-of-Experts (MoE)…