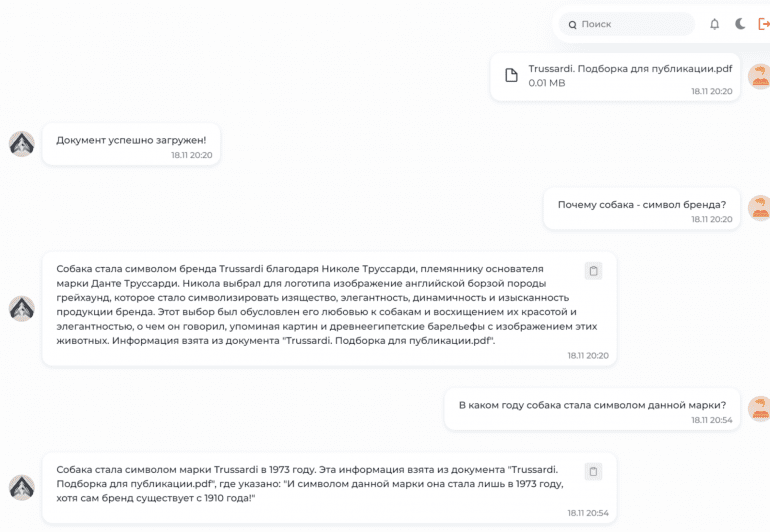

Developers at FractalGPT have rolled out a QA agent FractalGPT designed for interacting with documents, allowing users to engage in dialogues using uploaded PDF, TXT, and DOCX files.

Key Features of FractalGPT QA:

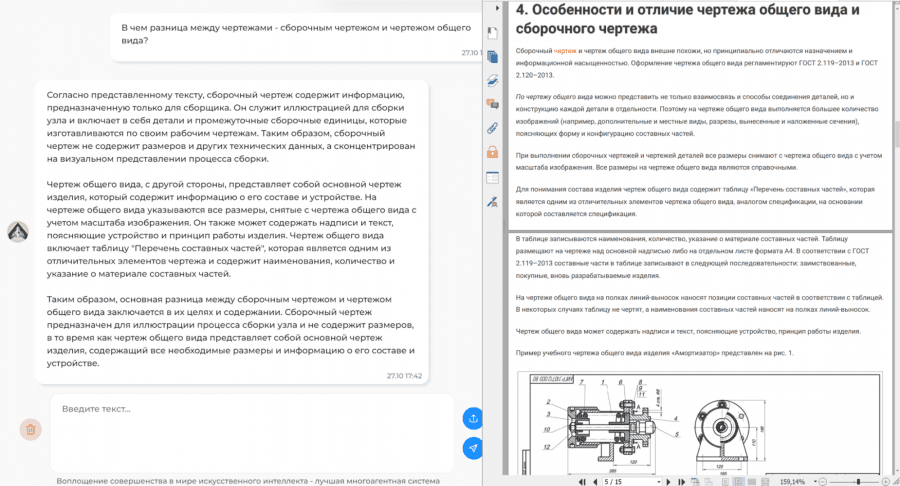

- Processing documents of any length, without the typical context limitation of most LLMs;

- Utilization of the proprietary Fractal Answer Synthesis algorithm, creating a complex internal document structure in its own language, significantly reducing hallucination rates and improving answer accuracy (see results below);

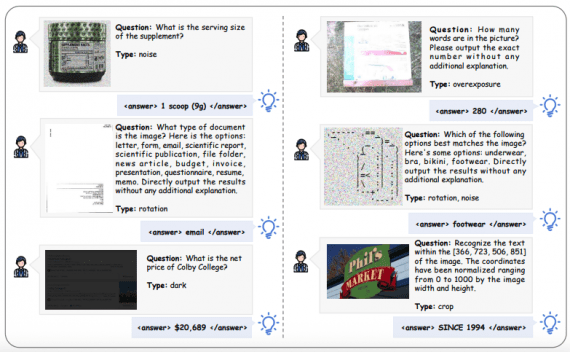

- Own vector search: works with legal documents, scientific articles, tutorials, product listings, understanding terms, definitions, and jargon.

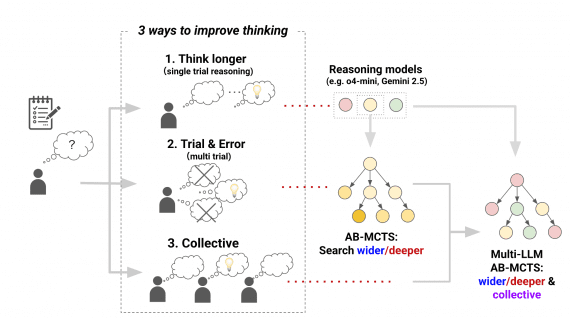

The system is built on a multi-agent architecture (hence the product being called an “agent”)—a departure from traditional classifiers, rankers, and orchestrator-based approaches. Instead, the system includes agents that “negotiate” with each other, ensuring automatic knowledge extraction for comprehensive and accurate answers, redirecting user queries to the right document even if multiple similar versions are in the knowledge base.

Developers successfully addressed critical negative aspects of RAG (Retrieval-Augmented Generation) by moving away from the typical document snippet approach and using their own vector search. As a result, answer reliability increased by 70%.

Results

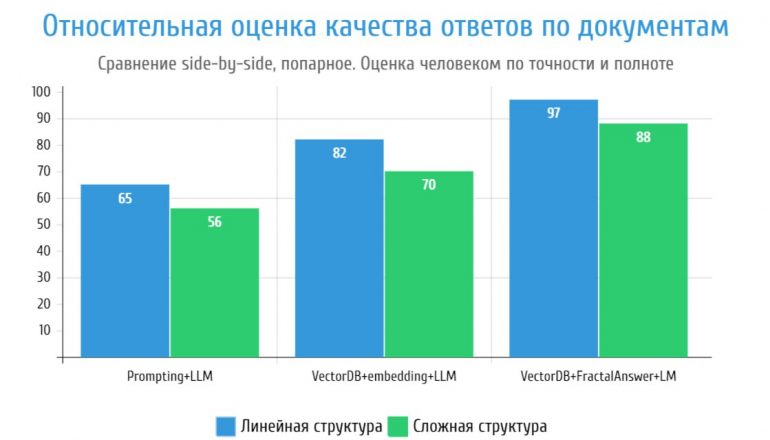

The RAG method, based on document structuring with Fractal answer synthesis, reduces hallucination growth by 18 percentage points in complex texts such as regulations, instructions, legal acts, and design documentation:

Internal tests demonstrated that the answer quality for complex topics, such as lectures, design documentation, scientific publications, laws, and legal acts, surpasses, on average, OpenAI’s solution in 30% of cases (according to internal tests). The system consistently refuses to provide an answer when there is insufficient data in the original document text or when the user’s question is incomplete or incorrect. In demonstrations, the system correctly understood question clarifications when there were multiple sections with possible correct answers in the text.

Despite significant progress in answer quality, FractalGPT QA agent still has limitations—correct handling of tabular data, formulas, and questions related to them is not guaranteed.

This text is a press release from the FractalGPT company.