Google AI has announced the release of the world’s largest landmark recognition dataset called Google-Landmarks-v2. The dataset is the extension or the second version of the Google-Landmarks dataset released last year.

The new dataset contains more than 5 million images of landmarks (two times more than the version 1 of Google-Landmarks) with more than 200 thousand different landmarks covered, which is seven times more than the first version.

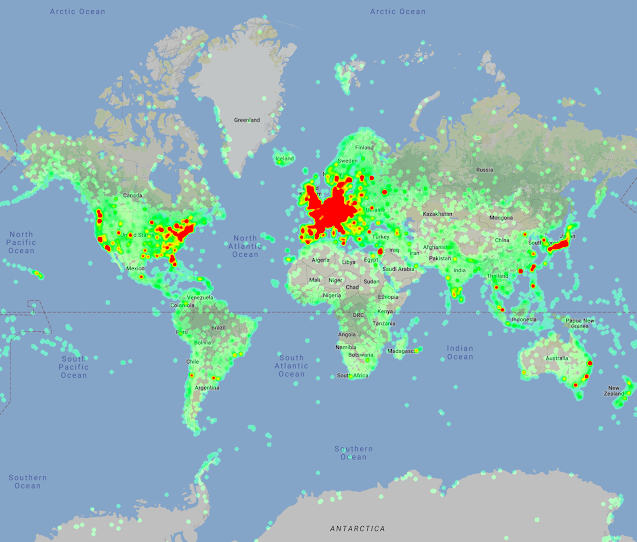

The dataset was created by crowdsourcing the labeling and using the efforts of a world-spanning community of hobby photographers, each familiar with the landmarks in their region.

As with the first version, Google announced the launch of two new Kaggle Competitions using the novel dataset: Landmark Detection 2019 and Landmark Retrieval 2019. The Landmark Recognition 2019 challenge is to recognize a landmark presented in a query image, while the goal of Landmark Retrieval 2019 is to find all images showing that landmark. Along with the Kaggle competitions, Google released Detect-to-Retrieve – a new image representation suitable for object retrieval from images.

The new dataset is available on Github. The images are split into three sets: train, index, and test. For the time being, only the train images are released since the Kaggle competitions are active. The index and test parts of the dataset will be published in the second stage of the Kaggle challenges.