Researchers from Samsung AI Center have proposed a novel method for instance segmentation that achieves state-of-the-art results on several benchmark datasets.

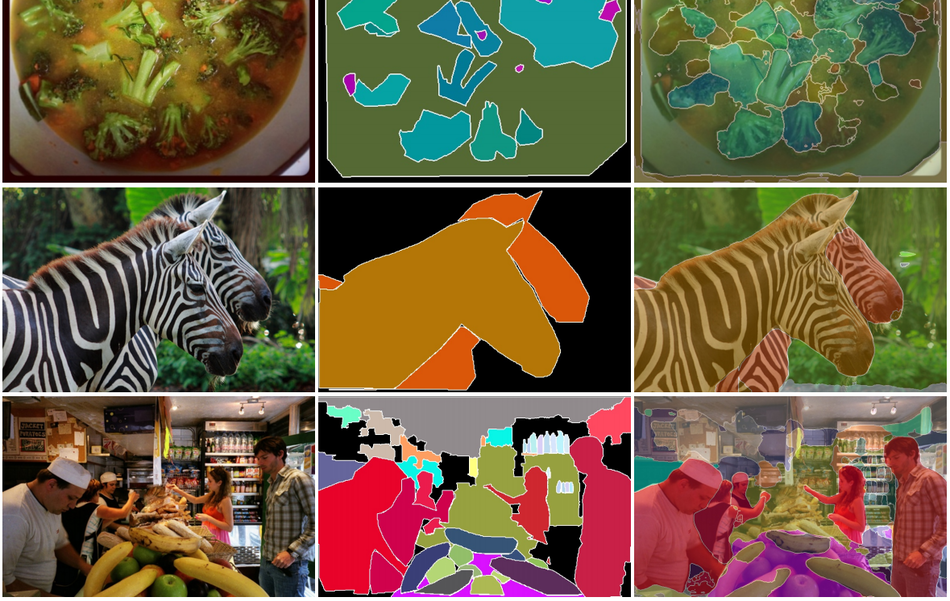

AdaptIS or Adaptive Instance Selection Network is a class-agnostic segmentation model that uses AdaIN layers to produce segmentation masks for different objects present in an image. The model, according to the researchers is able to perform precise, pixel-accurate segmentation of objects of complex shapes, as well as objects partially and severely occluded.

In the past several years, a number of segmentation models have been proposed to solve the task of segmenting all the pixels in an image that belong to a specific object or a class of objects. However, segmentation and even more instance segmentation remains a challenging task.

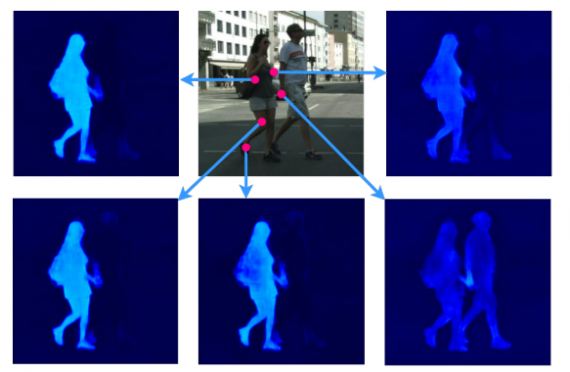

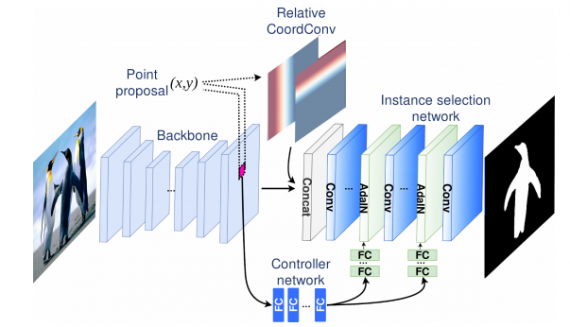

In their novel paper, researchers propose AdaptIS as a novel method that adaptively segments specific objects in an image. The method is based on Convolutional Neural Networks and the AdaIN mechanism (Adaptive Instance Normalization). Three “subnetworks” work together in order to provide an object instance segmentation given an input image and a point (belonging to the specific object).

The first network, which is, in fact, a feature extractor is a pre-trained backbone network which encodes the input visual information. A controller network then encodes a feature at the defined position (point of interest) and provides the input for the AdaIN mechanism. Last, the Relative CoordConv block helps disambiguate similar objects at different locations. The instance Selection Network, in the end, takes the provided inputs and tries to generate a binary mask that will give the object segmentation.

The evaluations that researchers conducted show that the method achieves state-of-the-art results on the Cityscapes and Mapillary datasets even without pre-training. Researchers open-sourced the code of the method as well as the pre-trained models and they can be found in the following link. More in-depth about the method can be read in the paper.