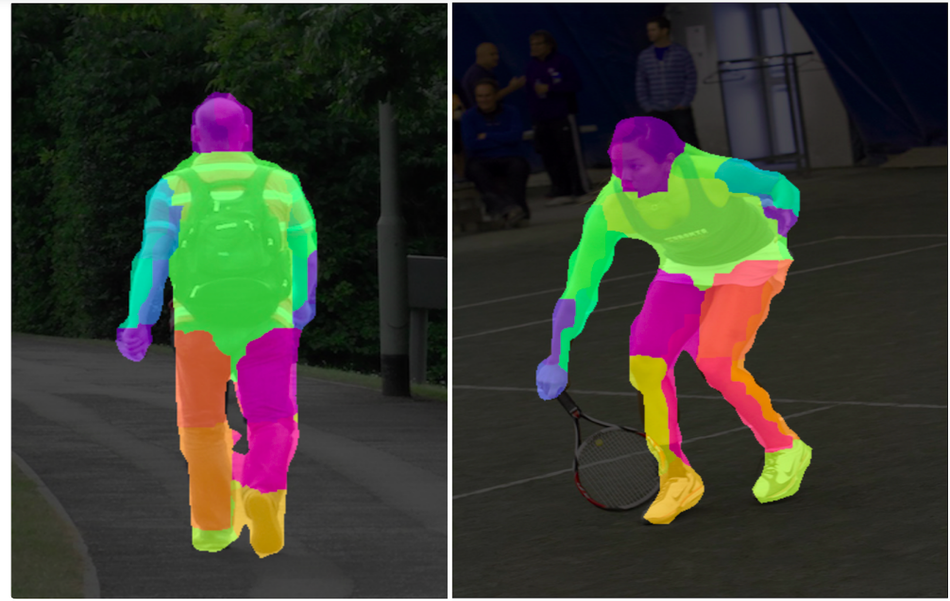

Google announced the release of BodyPix 2.0 – an update of Google’s open-source machine learning model for a real-time person and body-part segmentation in the browser.

The first version of BodyPix was released back in February this year and included a TensorFlow model deployed in the browser using TensorFlow.js. It was able to do person segmentation as well as simultaneous body-part segmentation from a video in real-time (roughly on 25 frames per second on a laptop, and 21 fps on a mobile phone). The model was based on the ResNet architecture and was trained to do pixel-wise semantic segmentation with twenty-four body parts as classes.

Now, the new release of BodyPix – 2.0, comes with several improvements such as multi-person support, a new improved ResNet model and an API. The implementation was open-sourced and researchers released few different versions of the model with different characteristics in terms of performance and inference time. A ResNet50 model is part of this set and achieves higher accuracy than more efficient and smaller models such as MobileNet.

Additionally, a number of utility functions for visualization come together with BodyPix as part of the API. A demo showing the deployed model in the browser was released and users can simply use their webcam to test person segmentation with BodyPix models.

More details about BodyPix 2.0 can be found in the Github repository. The demo is available here.