Valentin Bazarevsky and Fan Zhang, Research Engineers from Google Research have presented a real-time hand tracking software that can run on mobile devices.

Deep learning-based methods have shown unprecedented performance in a number of computer vision tasks and hand tracking is one of them. However, when it comes to deployment it’s often a challenge to make them run on mobile devices which do not provide the computational power of desktop environments.

Valentin and Fan presented their new approach, which leverages a specifically-designed machine learning pipeline to make it possible to do hand tracking in real-time and on-device. Their solution is built on top of MediaPipe – an open-source framework for building multimodal data ML pipelines.

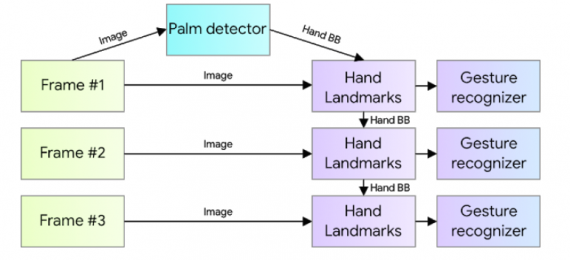

The proposed pipeline employs three different models: a palm detector model, a handmark detector model and a gesture recognizer. The palm detector takes input frames (or images) and outputs an oriented bounding box. Then, the hand landmark takes the cropped region defined by the bounding box and tries to find 3D hand keypoints. The final model, the gesture recognizer is classifying a gesture-based on the set of 3D keypoints provided by the landmark model.

For the task of palm detection and cropping, researchers use a single-shot detector model – BlazePalm, which is optimized for inference on mobile devices, They introduce a few additional modifications to the method to make it more robust and more aligned to the task at hand. The final model that they use, achieves a precision of around 96%.

For detecting key points on the palm images, researchers first manually annotated around 30K real-world images with 21 coordinates. Additionally, they generated a synthetic dataset to improve the robustness of the hand landmark detection model.

The obtained key points were used to form a skeleton from which the hand gesture is derived. In fact, the researchers use the angles between joints in the skeleton to learn a mapping schema to the output gestures.

As we mentioned before, the solution was built using the MediaPipe framework. Within this framework, the pipeline was built as a directed graph of modular components. This makes the whole pipeline to be highly efficient and to run in real-time across a number of platforms and devices. More details about the proposed approach can be read in the official blog post.