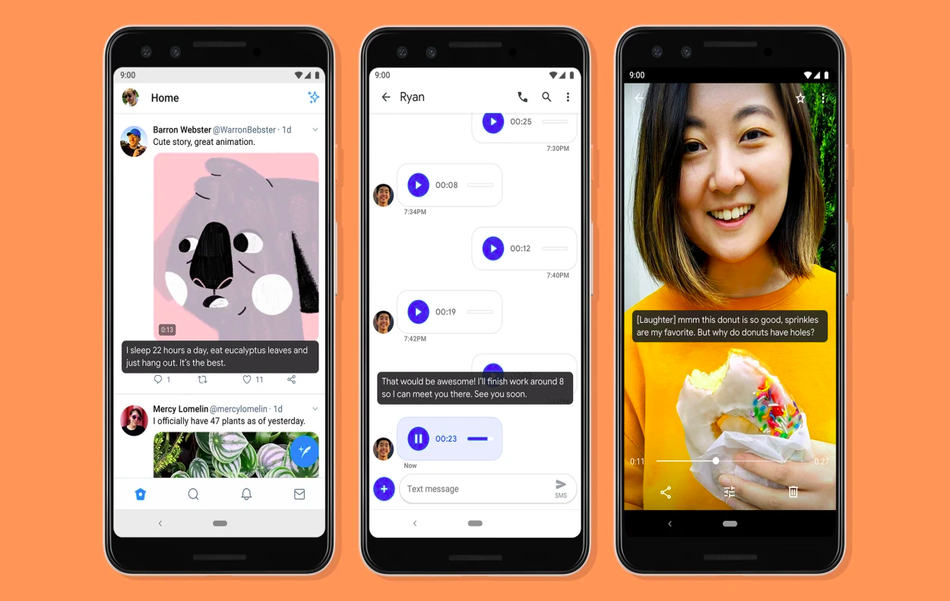

Google has announced a new feature for Google Pixel and Android called Live Caption. The novel feature can automatically caption media playing on the phone in real-time. It leverages advances in deep learning to make real-time captioning possible on mobile devices.

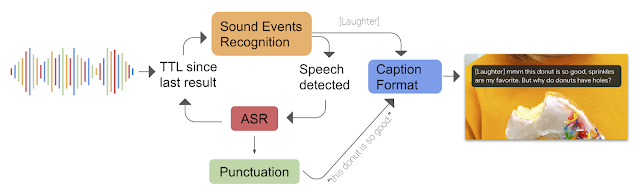

Live Caption is running completely on-device and without any network connection preserving lower latency and showing instant results. It uses three on-device neural network models that work together in order to deliver a single caption track. A sequence transduction recurrent neural network (RNN-T) is used for speech recognition. Another text-based recurrent neural network is used for unspoken punctuation, while a convolutional neural network (CNN) model is utilized for sound events classification.

All of the models were quantized and serialized using the Tensorflow Lite runtime and are optimized for running on a mobile device. For example, the speech recognition model is running only during speech periods and the model is loaded and unloaded from memory based on the usage.

The new feature – Live Caption, is currently available only on Pixel 4 and Pixel 4 XL phones and will become available for Pixel 3 models later this year. According to Google Live Caption will also come to other Android devices soon.