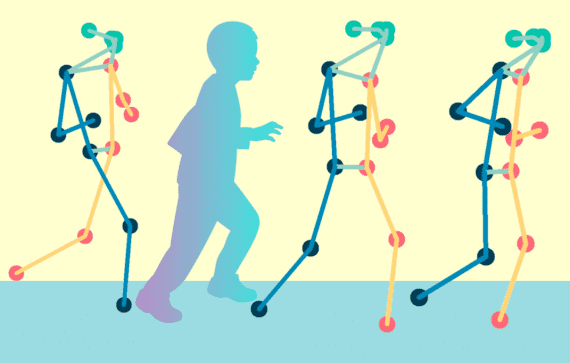

Researchers from the Massachusetts Institute of Technology (MIT) and the Toyota Research Institute have proposed a new method that can detect where people are looking in videos.

In their novel paper, published at this year’s ICCV 19, the group of researchers describes a novel dataset for large-scale gaze-tracking together with a method for robust 3D gaze estimation. The new dataset – Gaze360, consists of labeled 3D gaze in videos from indoor and outdoor environments using 238 subjects. According to researchers, it is the largest 3D gaze dataset both by subject and variety that is publicly available.

The dataset was used to train a number of 3D gaze estimation models which include temporal information and output an estimate of the gaze uncertainty. The final model that researchers built was based on a multi-frame input and employed a pinball regression loss in order to provide an output estimate of the gaze uncertainty. In order to incorporate temporal information, the model uses LSTM cells which take as input the encoded frames and they output the gaze direction.

Researchers performed an ablation study to evaluate the method and its generalization capabilities. They also performed a cross-dataset evaluation using their trained model to evaluate the value of the novel Gaze360 dataset. The study showed that the best results are achieved with the novel dataset for training as opposed to three other datasets: Columbia, MPIIFaceGaze, RT-GENE.

More details about the method can be read in the paper.