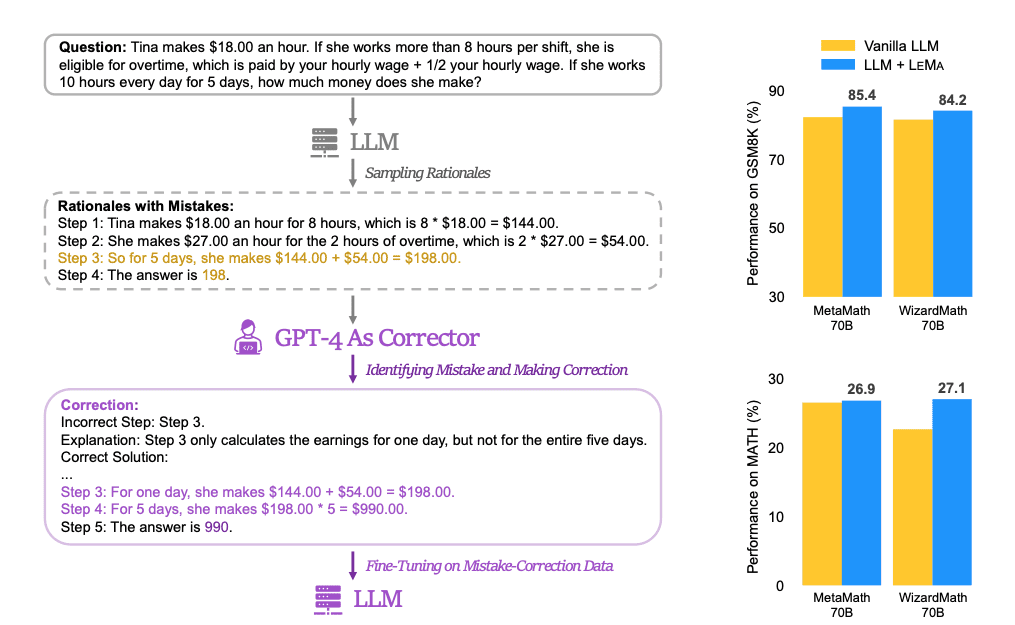

Microsoft researchers have introduced LeMa (Learning from Mistakes), an open-source algorithm designed to enhance the ability of large language models to solve mathematical problems. LeMa encourages models to learn from their errors, mimicking the human learning process.

The algorithm’s principle relies on the fact that humans solve problems more effectively when they analyze each step of their reasoning and correct errors as they find them.

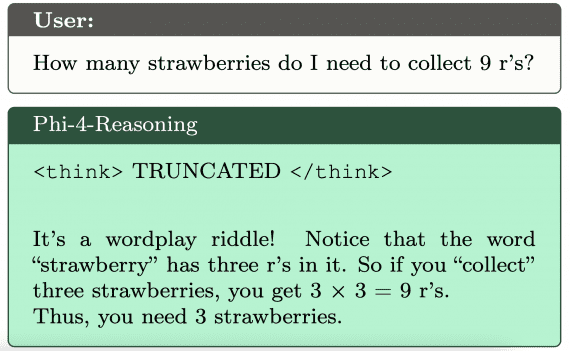

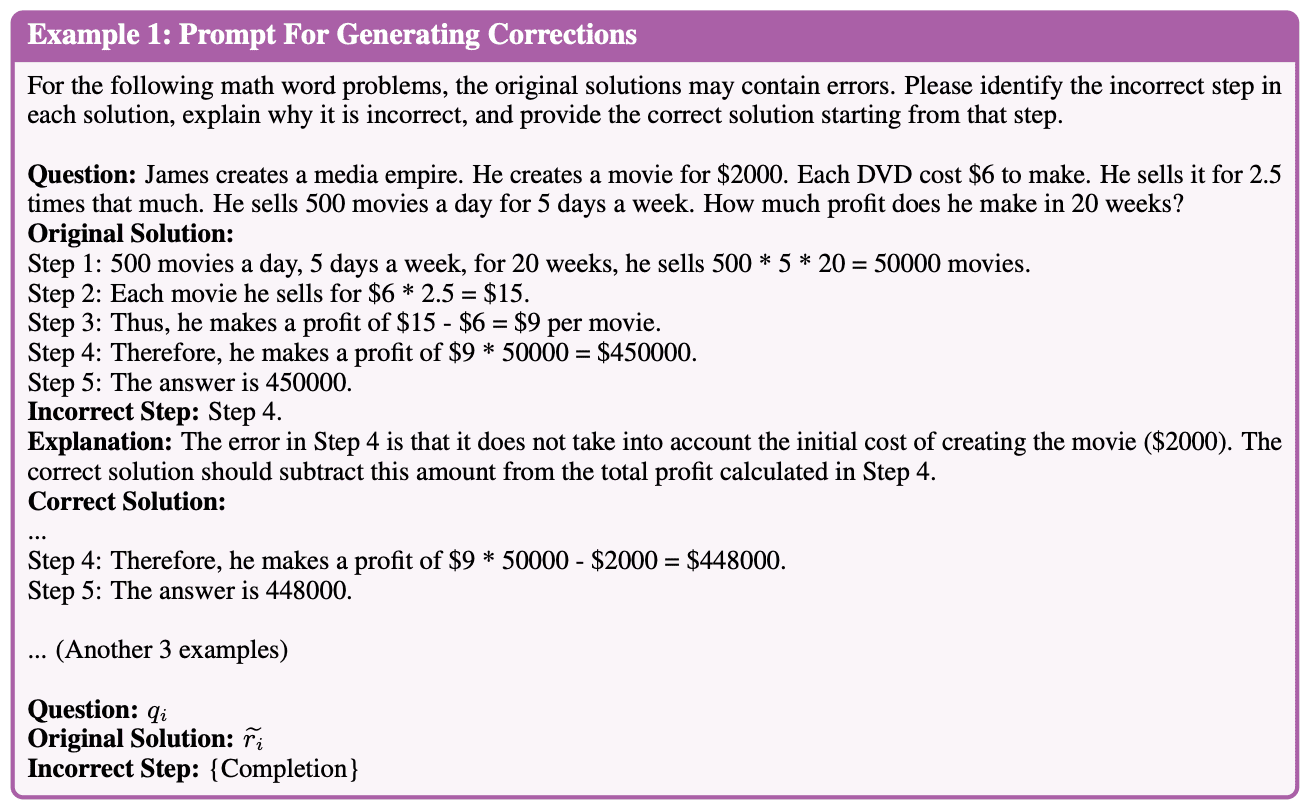

Scientists compiled a training dataset based on responses from several language models that generated incorrect reasoning paths when solving mathematical problems. These responses were fed to GPT-4. The GPT-4 request included the problem statement, model answers, and the task to identify incorrect reasoning, explain the errors, and provide the correct solution from the point of the first mistake:

Choosing GPT-4 for error correction was due to its superior performance in manual testing for this task. Depending on the original model, between 26,000 and 45,000 examples of ‘model solution – corrected solution’ pairs were used for training. The resulting dataset then served to further train the models.

According to Microsoft, the algorithm managed to increase the accuracy of solving mathematical problems in each of the five key language models involved in the study. Furthermore, specialized models for solving mathematical problems, WizardMath and MetaMath, achieved record accuracy rates of 85% and 27% on the GSM8K and MATH benchmarks, respectively, after retraining with LeMa.

The algorithm’s code and all research data are published on GitHub.