Researchers from MIT University have conducted a research project where their goal was to understand what makes deepfakes detectable.

With the numerous advances in image generation in the past decade, we have also seen a large number of techniques for generating fake data or fake images, which got the popular name “deepfakes” in the community. It has been a “cat vs. mouse” game since then between people working to improve image generation models and people who were developing deepfake detectors whose goal was to detect if a sample comes from a generative model.

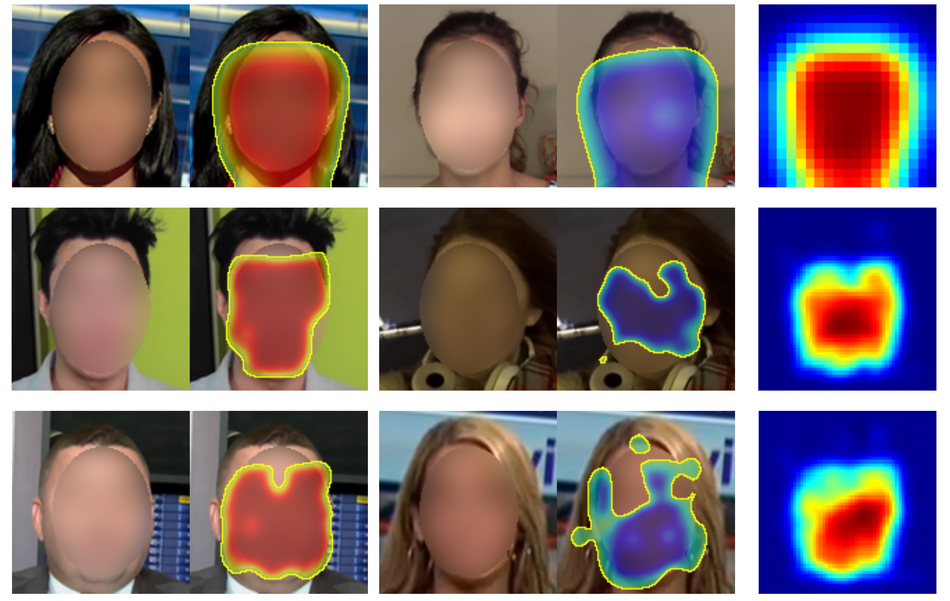

In their latest paper, researchers tried to answer the question: “What makes deepfakes detectable? They tried to identify a subset of properties of deepfake images that give clues for detecting its origin. This identification was done using a patch-based classifier model with a limited receptive field from which researchers extracted the regions in the fake images that are more detectable.

Another important finding in this project was the fact that adversarial training of the generative model provides still imperfections in the generated samples and these can be considered as detectable artifacts.

Experiments were conducted using several existing image generation models such as PGAN, SGAN, SGAN2, and the CelebA and FFHQ datasets. Results confirmed the hypothesis that deepfake generated images contain artifacts that make them detectable.

More in detail about the project can be read on the project’s website. The implementation was open-sourced and it is available on Github.