Researchers from the University of North Carolina and the University of Maryland have proposed a new method for detecting deception from walking patterns and gestures.

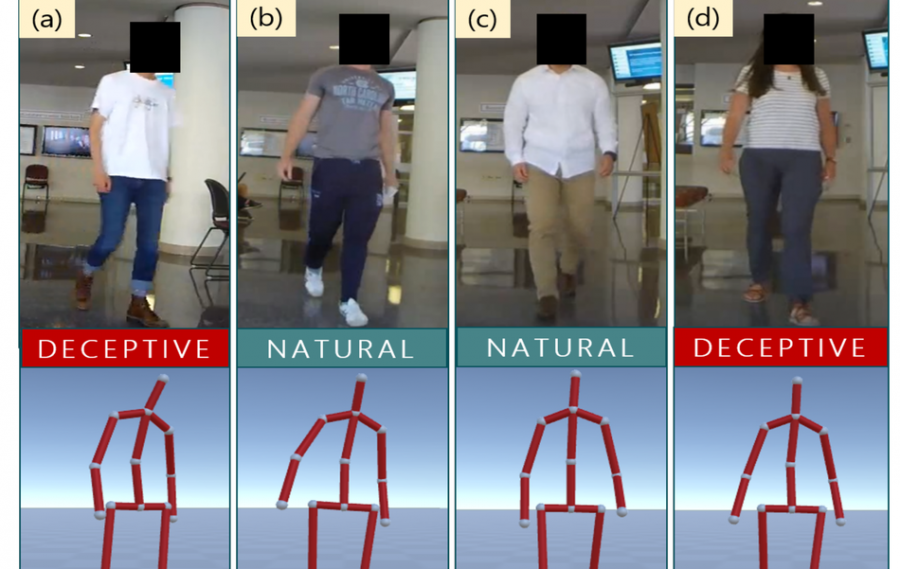

In their novel paper named “The Liar’s Walk Detecting Deception with Gait and Gesture” they present their method which is based on recurrent neural networks and learns deception detection directly from data. Researchers employed a specific neural network architecture that from a video input first extracts walking gaits as a series of 3D poses. These poses are then split into two main categories of gaits and gestures before being fed to an LSTM network that does the actual classification.

For the purpose of developing such a method, researchers built a novel dataset called DeceptiveWalk that contains data in the form of gaits and gestures accompanied by their corresponding labels regarding deception.

The proposed method achieves 93.4% of classification accuracy on the novel DeceptiveWalk dataset. According to researchers, this is the first method that is able to detect deception from non-verbal cues. In order to compare the method to existing methods, they performed a different experiment for emotion and action recognition from gaits since the inherent task remains the same – classification of gaits. The evaluations showed that the method outperforms other state-of-the-art methods for emotion and action recognition by a significant margin.

More details about the method can be found in the paper.