A new method proposed by researchers at Google AI is able to identify “magic” moments in videos and create animations from them.

As a new feature in Google Photos, the novel algorithm is capable of detecting important actions within videos that can be highlighted and extracted as animations. In the paper named “Rethinking the Faster R-CNN Architecture for Temporal Action Localization” researchers tackled exactly this problem – of identifying “important” actions in natural videos.

To do so, the researchers crowdsourced the collection of labeled data. They asked people to find and annotate all moments within videos that might be considered important or “special”. The collected large, labeled dataset was used to train models that identify those moments in the videos.

The problem of action identification can be seen as a similar problem to object detection. However, the fact that the result, in this case, is again a sequence of frames (or video) and the starting and ending frames are needed along the label of the action, makes this task more complex than simple object detection.

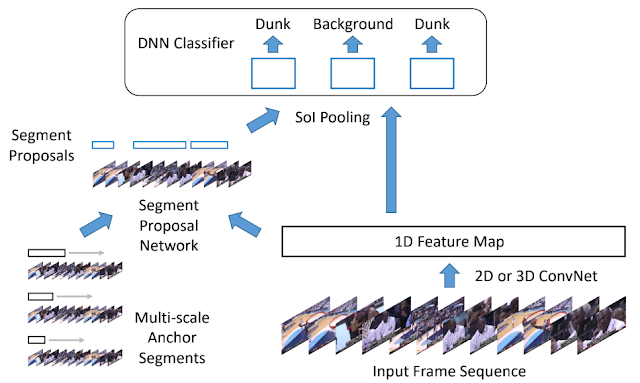

The solution, proposed by the researchers is inspired by faster R-CNN, where instead of using region proposal module, they employ a segment proposal network that gives a number of proposals to the classifier in the next step. The whole architecture can be seen in the diagram.

The new model, named TALNet achieves state-of-the-art performance on both action proposal and action localization tasks in the THUMOS 14 benchmark. The model is now part of a new feature in Google Photos, that creates animations with interesting moments from user’s videos.