In a new paper, researchers from Google DeepMind led by Ang Li, have introduced a generalized framework for population-based training of neural network models.

Population-based training is an approach that optimizes neural network model weights and its hyperparameters jointly. This kind of approach was introduced also by DeepMind, back in 2017.

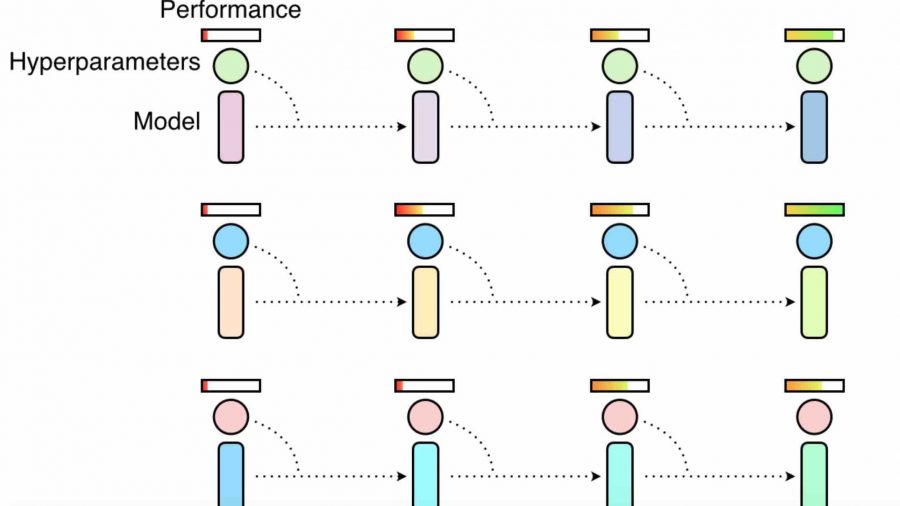

The technique of population-based training is simple and it is actually a hybrid of the two most commonly used methods for hyperparameter optimization: random search and hand-tuning. To do the optimization, PBT is dynamically changing hyperparameters and model weights. In fact, PBT (or population-based training) periodically copy weights of the best performers and mutates hyperparameters during training.

Arguing that population-based training was always implemented as synchronized glass-box systems, the team at DeepMind proposes a new generalized black-box framework for population-based training.

The core idea behind the proposed framework is to decompose the whole model training into multiple trials, wherein each trial a worker only trains for a limited amount of time.

Each of these trials will be dependent on one or more other trials and a controller is introduced to control the whole population of trials.

According to DeepMind, this framework will drastically improve training extensibility and scalability. The framework allows tuning hyperparameters no matter if they are defined in the computation graph, allows for training with non-differentiable objectives, allows dynamic hyperparameters etc.

All these things are expected to help deep learning practitioners and researchers in terms of faster model training and tuning. More about the new framework can be read in the paper that is available on arxiv.