A group of researchers from the University of Oxford, Adobe Research and UC Berkeley, has proposed an interactive method for sketch-to-image translation based on Generative Adversarial Networks. The whole approach is based on an interesting idea of having a neural network model work together with the user to create the desired result.

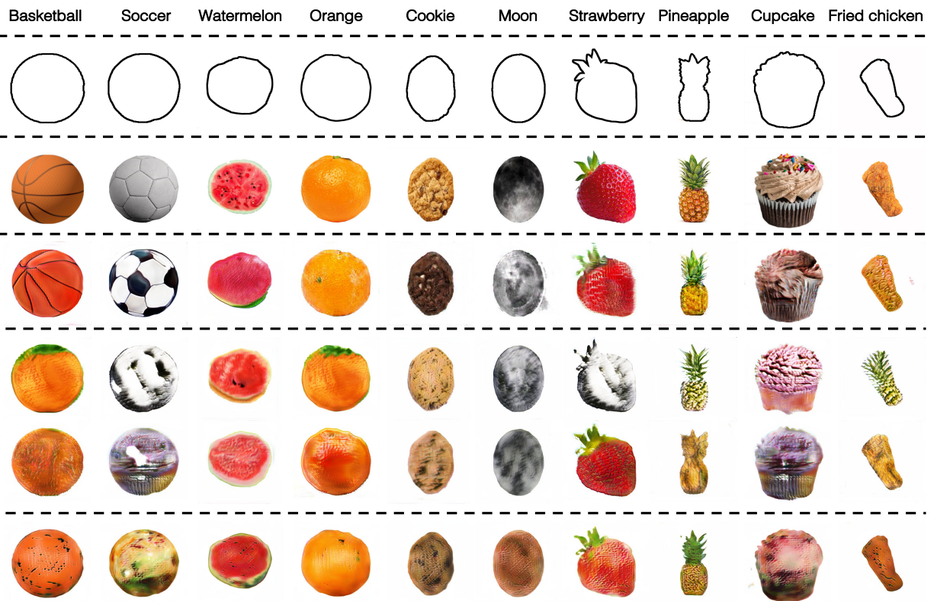

The proposed method incorporates a feedback loop, where the network interactively recommends plausible completions as the user starts to draw a sketch. Researchers leveraged the power of generative models such as Generative Adversarial Networks (GANs) to build a multi-class sketch&fill method. They tackle the problem by dividing it into two smaller sub-problems: object shape completion and appearance synthesis. A shape generator model was built that automatically generates shape or outline from a user drawing. The appearance generator, used for the appearance synthesis part, takes this shape as input and synthesizes the final image, which in the end is “judged” by a discriminator.

In order to make this approach work for a wide variety of objects using just a single model, researchers introduced a gating-based approach for class conditioning. The method was evaluated on both single-class as well as multi-class image generation using a newly generated dataset which consists of several hundred images of 10 object classes.

The implementation of the method was open-sourced and is available on the project’s website along with the paper and sample results from the method.