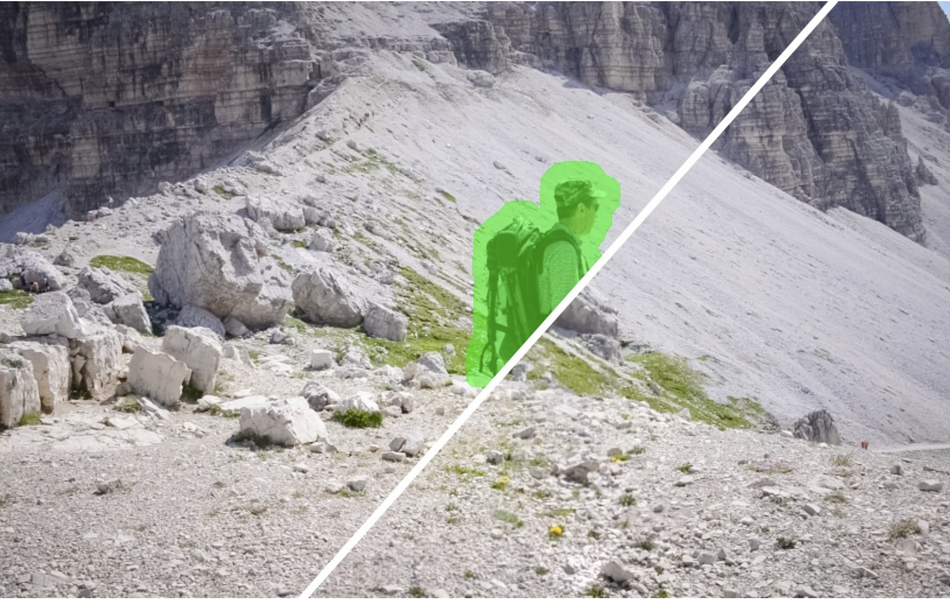

Researchers from Virginia Tech and Facebook have developed a video completion method that can smoothly remove objects from videos.

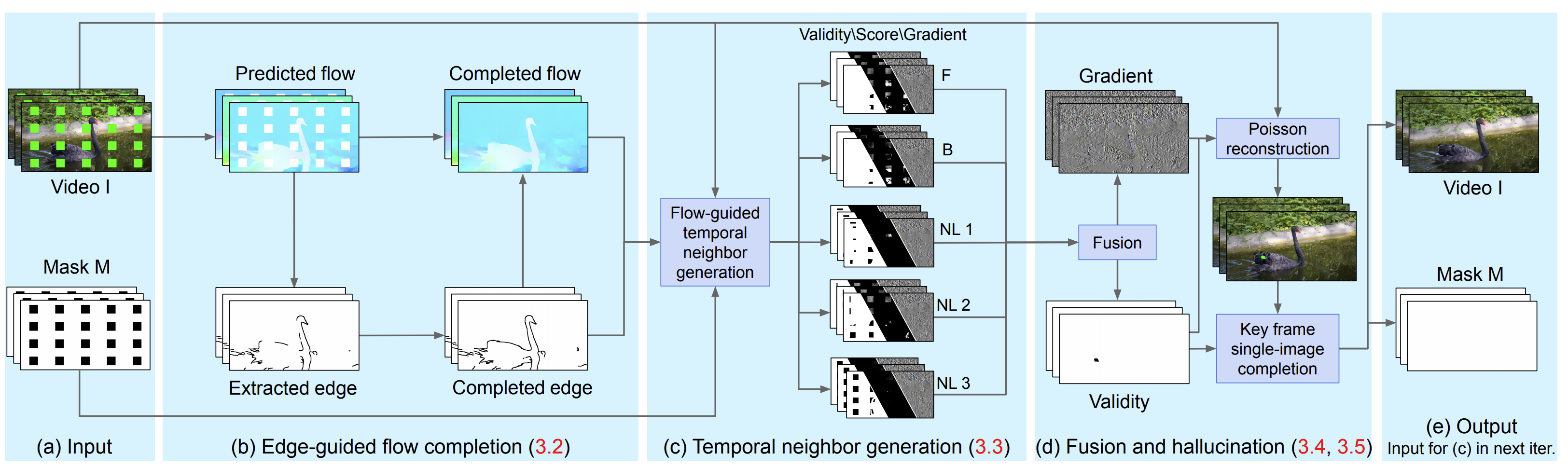

In their paper, “Flow-edge Guided Video Completion” the group describes their novel method that uses edge completion as a guide for piecewise smooth flow completion. In comparison to existing methods, the proposed does not follow the standard practice of color propagation between adjacent frames. One of the contributions in the paper is the introduction of non-local flow connections that allow connecting temporally distant frames.

The proposed method starts by extracting motion edges from a given input video and a binary mask. Then the method computes forward and backward flow for each pair of adjacent frames as well as for a set of non-adjacent frames and produces complete flow edges. These edges are used then to do the smooth flow completion. From the generated flow trajectories, the method computes a set of candidate pixels for each missing pixel accompanied by a confidence score. Later in the so-called “Fusion and hallucination” step the method chooses the frame with the most missing pixels and applies an image inpainting method to fill them. This result is passed to the next iteration and the procedure is repeated until all pixels are “inpainted”

Researchers used the DAVIS dataset that contains 150 video sequences. Both qualitative and quantitative evaluations showed that the method outperforms or performs on par with existing state-of-the-art methods. Researchers reported a speed performance of approximately 7.2 frames per second for their method.

More details about the architecture, the training experiments, and the evaluation of the new method can be found in the paper or in the project website. The implementation is open-sourced and can be found on Github.