Researchers from Shanghai Jiao Tong Univers, University of California and Google, have proposed a novel method for video frame interpolation based on depth information.

Video frame interpolation has been a difficult problem within computer vision for the past few decades. Most recent approaches addressed this problem using convolutional neural networks. However, the task of video frame interpolation is non-trivial due to object motion and occlusions present in videos.

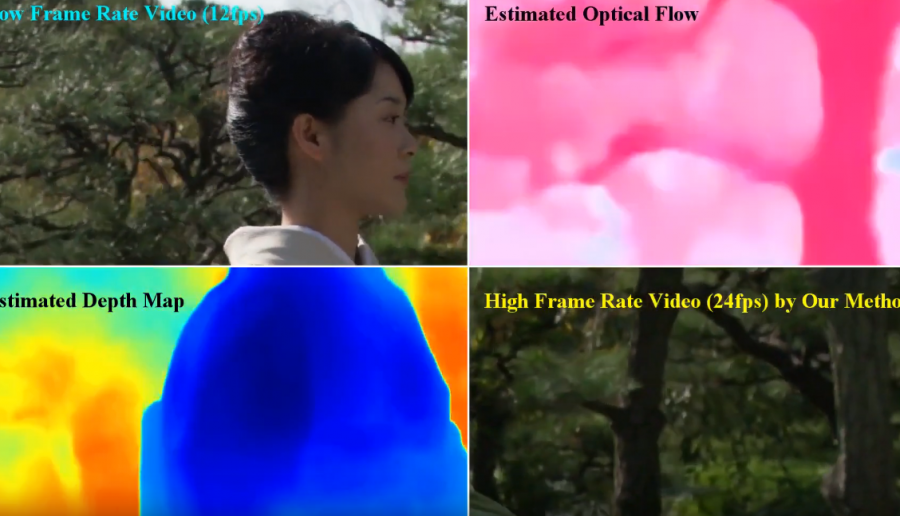

To overcome these problems, researchers proposed a video frame interpolation method that exploits depth information in order to detect partially or fully occluded objects.

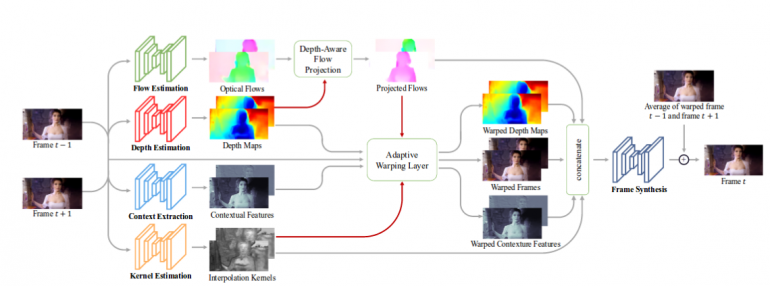

In the novel method, researchers propose a depth-aware flow projection that takes optical flows and depth maps as features extracted from video frames and output projected flows. Then, an adaptive warping layer takes the projected flows along with the depth maps, encoded contextual features and interpolation kernels to produce warped depth maps, warped frames and warped contextual features. The warped features are concatenated and a frame synthesis module gives the resulting frame. The architecture is given in the diagram below.

The method quantitative and qualitative evaluation showed that it achieves favorable results against state-of-the-art methods in video frame interpolation. The source code along with a pre-trained model is available at Github.