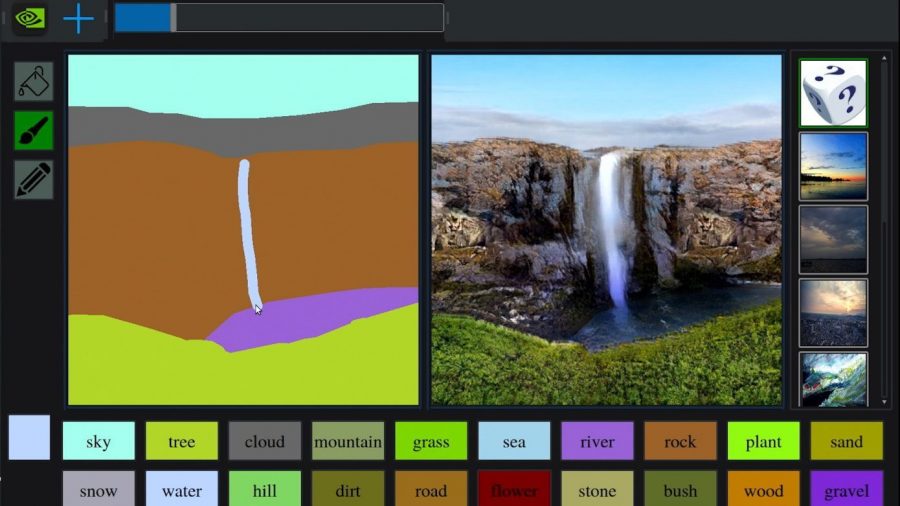

Today, at this year’s NVIDIA GTC (GPU Technology Conference), Nvidia introduced GauGAN – an AI-based tool that turns sketches into photorealistic images.

The novel image creation tool is utilizing Generative Adversarial Networks to convert a so-called segmentation map (or simply user’s sketch) into a lifelike image. GauGAN is a powerful tool developed in order to compile an image just as a human would imagine it.

/cdn.vox-cdn.com/uploads/chorus_asset/file/15972196/nvidia_gaugan_gif.gif)

There are a number of applications of a software tool such as GauGAN. Having the ability to output very realistic images (thanks to the advances of Generative Adversarial Networks, of course), the tool will certainly be useful for many people.

“It’s much easier to brainstorm designs with simple sketches, and this technology is able to convert sketches into highly realistic images,” says Bryan Catanzaro, vice president of applied deep learning research at NVIDIA.

GauGAN’s generative neural network model was trained on millions of images to be able to synthesize photorealistic landscapes just given a segmentation map. It learned a multi-modal distribution that enables GauGAN to produce different results for the same user input (or sketch). The method used by GauGAN, called spatially-adaptive normalization for semantic image synthesis is proposed in this paper, accepted as a conference paper at CVPR.

The tool is able to produce results in real-time running on a Tensor computing platform. More about Nvidia’s new image creator can be read at the official blog post.