A group of researchers from Columbia University has proposed a new dataset and method for prediction unintentional action in video.

In their paper – “Oops Predicting Unintentional Action in Video”, researchers have described their new dataset called OOPS together with a baseline method for predicting unintentional action.

Humans have the ability to instantly tell whether a person’s action is intentional or not, but is this the case with machines? The group actually tried to give an answer to this question and it served as motivation to create a dataset and explore possible solutions to this problem.

They proposed a dataset that consists of more than 20 000 videos from YouYube, collected from video compilations of people’s failures. The source and the amount of data actually provide a large variety of actions, intentions, and scenes which researchers exploited in order to create a diverse dataset. It contains more than 50 hours of data filmed by amateur videographers in the real world. The video clips were manually annotated with the temporal locations of failure using Amazon Mechanical Turk.

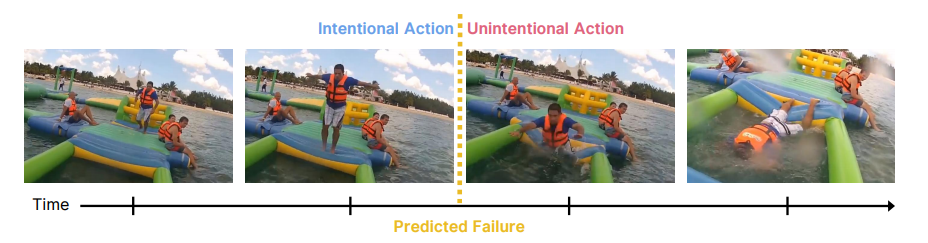

The dataset was then used by researchers to create a self-supervising method for classifying the action into three classes: intentional, unintentional and transitional. They showed that there exist perceptual clues in unlabeled video that can be leveraged to recognize “intentionality” in realistic videos. However, researchers note that there is still a big gap between human performance and the one from the models, meaning that recognizing intentional/unintentional actions in the video remains a fundamental challenge.

The OOPS dataset was open-sourced and is available here, together with extracted dataset statistics. The dataset creation and the proposed method are explained more in detail in the published paper as well as on the project’s website. According to the site, the implementation of the method will also be open-sourced.