Researchers from OpenAI have proposed new implicit data generation methods for Energy-Based Models.

Energy-Based Models (EBMs) are able to discover data dependencies by applying a measure of compatibility (called energy) to each configuration of the variables. This energy is just a scalar assigned to each data point.

Energy-Based Models are quite flexible models, but they require a process of energy minimization to be able to sample from the generative distribution.

New methods proposed by researchers at OpenAI combine Energy-Based Models with a refinement process called Langevin Dynamics.

By combining the two, researchers explain that there are a few benefits. First of all, adaptive computation time, which brings a lot of flexibility when using the generative model. So, for sharp diverse samples, long refinement is necessary but coarser and less diverse samples can be obtained in a much shorter time.

Second, the method does not rely on a generator network (that learns a high-dimensional mapping from latent space to potentially disconnected space with multiple different data modes). EBMs are able to assign low energy at regions where the space is “disconnecting”.

And lastly, the models are compositional since they are simply unnormalized probability distributions.

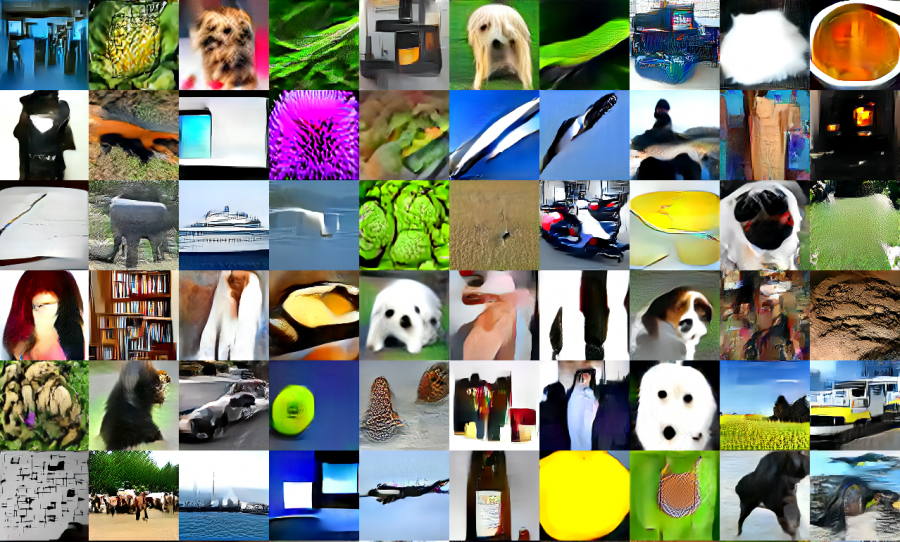

Researchers found that EBMs are able to generate high-quality images and even stay competitive with GANs and Variational Autoencoders.

More about the new methods can be read at OpenAI’s blog. The paper is available on arxiv and the code along with pre-trained models is available here.