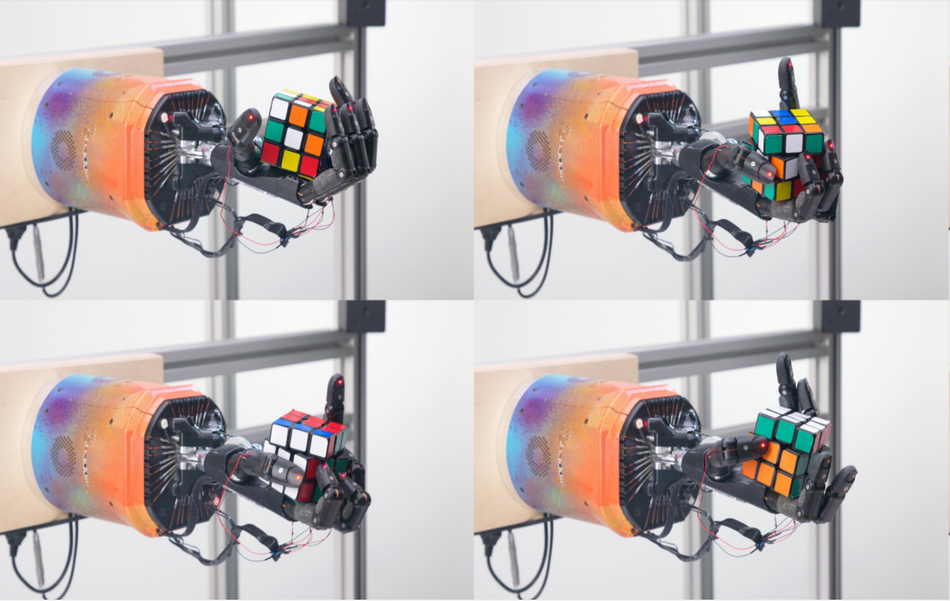

Researchers from OpenAI announced that they have developed a human-like robot hand that can solve Rubik’s Cube. In OpenAI’s latest blog post, the group of researchers explains their efforts for the past two and a half years to build a robot that can do a task as complex as solving the Rubik’s Cube.

A pair of neural networks were trained using the same method as the Dota-winning OpenAI’s Five along with a new technique called Automatic Domain Randomization (ADR). The networks were trained completely in a virtual environment (simulation) using reinforcement learning and the Kociemba’s algorithm for choosing the sequence of manipulations. The technique of Automatic Domain Randomization allowed training the networks in many different variants, therefore, enabling it to be trained solely on simulation data, without any exposure to the real world. It also allowed iterative improvements by progressively changing the environment and it’s complexity as the network solves the Rubik’s Cube. The two of the networks, the vision network, and the dynamics (control) network work together in order to deliver each solution step. The vision network estimates the position and the state of the cube, while the control networks learn dynamics to perform the actual finger movements.

According to the researchers, the networks have learned how to perform the challenging task of solving Rubik’s Cube. They conclude that the networks seem to be surprisingly robust to perturbations even though they never trained with them.

This week another group of researchers from Google’s Deepmind has also published their work on learning dextrous manipulation using deep learning. More details about the method and the results can be found in the official blog post or in the paper.