Researchers from the University of Basilicata have proposed a deep learning based method that turns a regular flash selfie into a studio portrait.

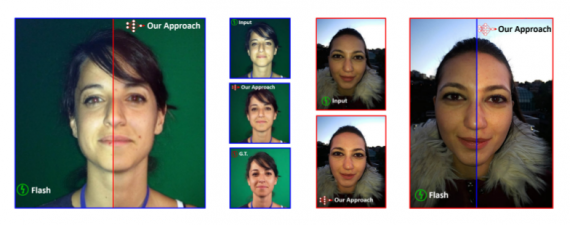

Their method is based on deep convolutional neural networks and is capable of transforming a selfie photo into a photograph as if it was taken in a studio setting. It does so by detecting and removing defects introduced by close-up camera flash: highlights, shadows, skin shine etc.

Arguing that smartphone cameras perform poorly in low light conditions, besides smartphone camera improvements over the last year, the researchers present their correction techniques in their paper.

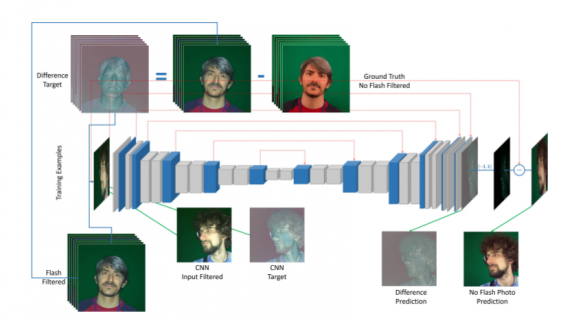

To tackle the problem, they trained a CNN with a series of pairs of portraits, where one is taken with the smartphone flash and one with photographic studio illumination. Since the photos are taken simultaneously, the pose remains the same (or almost the same in both photos).

A dataset of photo pairs was collected for the purpose of solving the problem. The dataset contains 495 pairs of photos of 101 people in different poses. Data augmentation techniques were used to augment the dataset and finally have a dataset of 9900 images of high resolution (3120×4160).

The researchers employed an encoder-decoder CNN architecture. The encoder network maps the input flash image to a lower-dimensional latent representation. Then, the decoder takes the encoded output and transforms it into a full-resolution image removing malicious artifacts introduced by the flash.

The encoder network is a VGG-16 network, while the decoder is custom built concatenating different layers. Finally, they introduce skip connections from the encoder to the decoder, to improve learning.

The qualitative and quantitative analysis shows that the method is able to achieve the goal and successfully reconstruct flash selfie photos. The full paper can be read on arxiv.