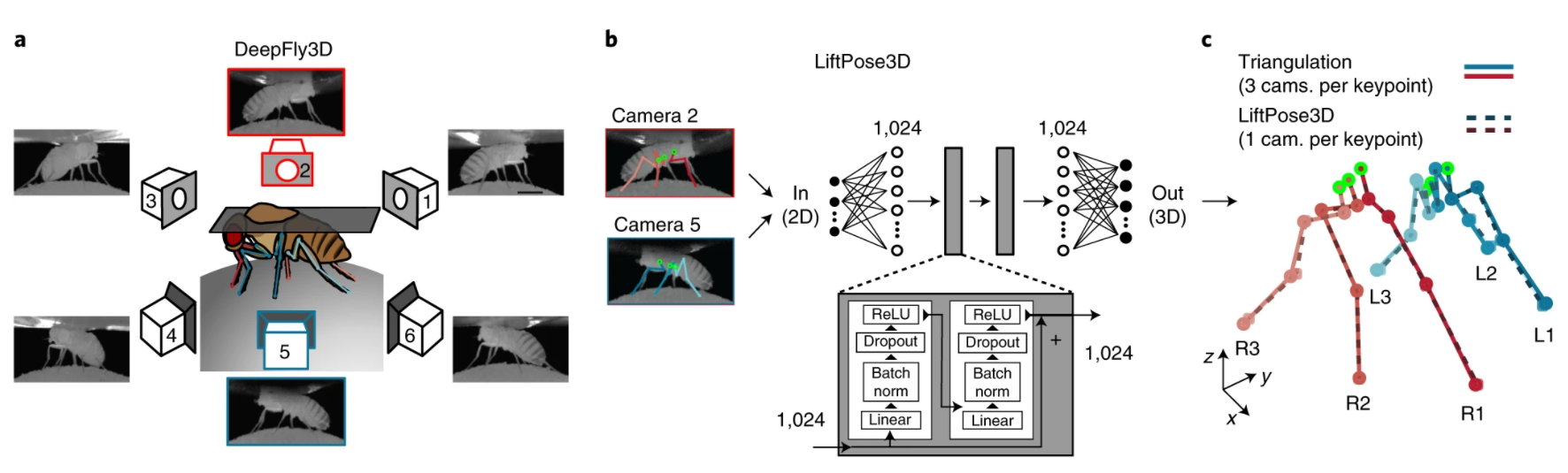

A group of scientists from the Federal Polytechnic School of Lausanne presented LiftPose3D – a neural network that restores a 3D pose from an image from one angle. The model has been successfully tested on laboratory animals and allows you to evaluate a 3D pose without using a set of synchronized cameras.

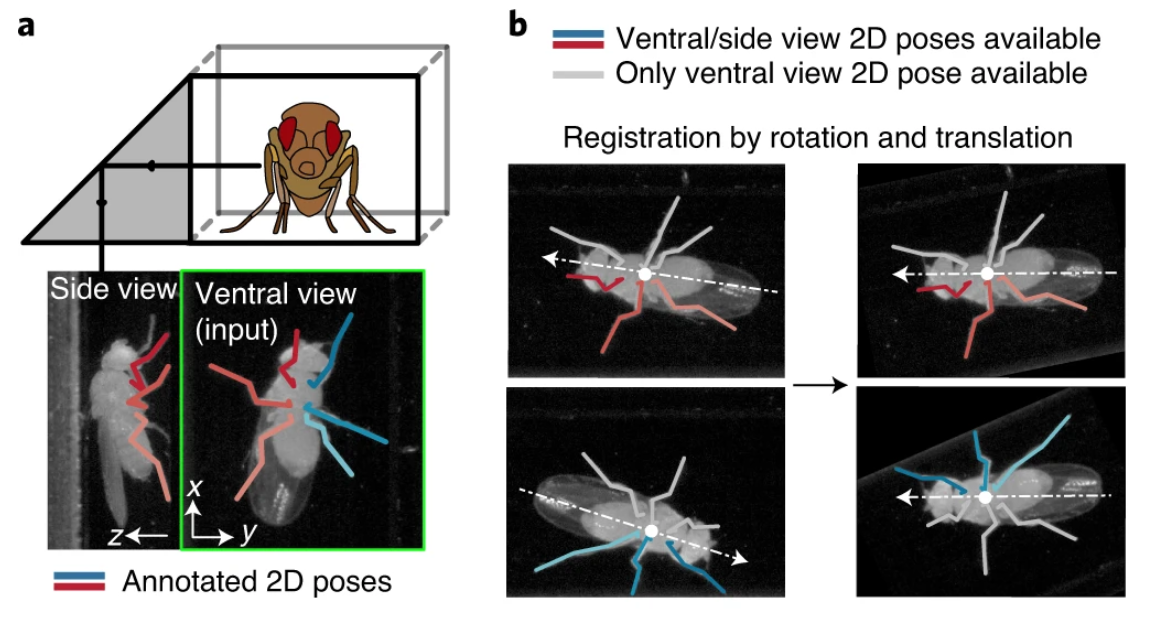

Marker-free three-dimensional assessment of posture is a key tool for kinematic studies of laboratory animals. Most modern methods allow us to evaluate a 3D pose using multi-angle triangulation of two-dimensional pose estimates based on neural networks. However, such triangulation requires several cameras and complex calibration protocols, which make it difficult to widely apply the method in laboratory studies.

To train the neural network, the scientists used a set of photos of laboratory animals (drozdophila) from various angles obtained using a set of synchronized cameras. Simultaneous consideration from different angles allows you to train a neural network with geometric relationships inherent in animal poses. After training, LiftPose3D learned to evaluate the 3D pose of an animal from a single image without additional information, for example, about the orientation of the camera.

The LiftPose3D architecture consists of two linear layers of dimension 1024, a ReLU activation function, a dropout and skipped connections. The model contains 4 million trainable parameters that are optimized by stochastic gradient descent using the Adam optimizer. In addition, batch normalization was performed.

To prove the versatility of LiftPose3D, scientists tested the algorithm on flies, mice, rats and macaques, as well as in conditions where 3D triangulation is impractical or impossible. The network was implemented in PyTorch on a computer with an Intel Core i9-7900X CPU (32 GB of RAM) and a GeForce RTX 2080 Ti Dual O11G GPU. The training time for each individual animal was less than 10 minutes.