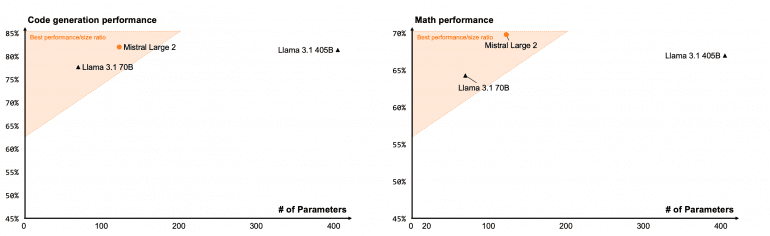

Mistral AI has announced Mistral Large 2, the latest iteration of its flagship model, setting a new state of the art (SOTA) in open-source code generation models. This new model brings substantial improvements in code generation, mathematics, reasoning, and multilingual support.

Mistral Large 2 boasts an impressive architecture with 123 billion parameters and a 128k context window, designed for single-node inference. This makes it highly efficient for long-context applications, ensuring robust performance even with extensive data sequences.

Key Features

- Context Window: Supports up to 128k tokens, allowing for the processing and generation of extensive sequences.

- Multilingual Support: Includes support for 13 languages, such as French, German, Spanish, Italian, Portuguese, Arabic, Hindi, Russian, Chinese, Japanese, and Korean.

- Code Generation: Provides support for over 80 coding languages, including Python, Java, C, C++, JavaScript, and Bash, making it highly versatile for various programming needs.

Performance and Benchmarks

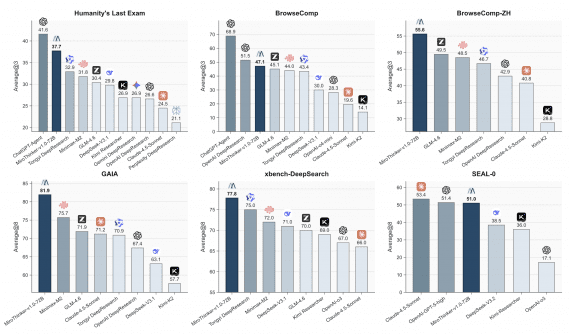

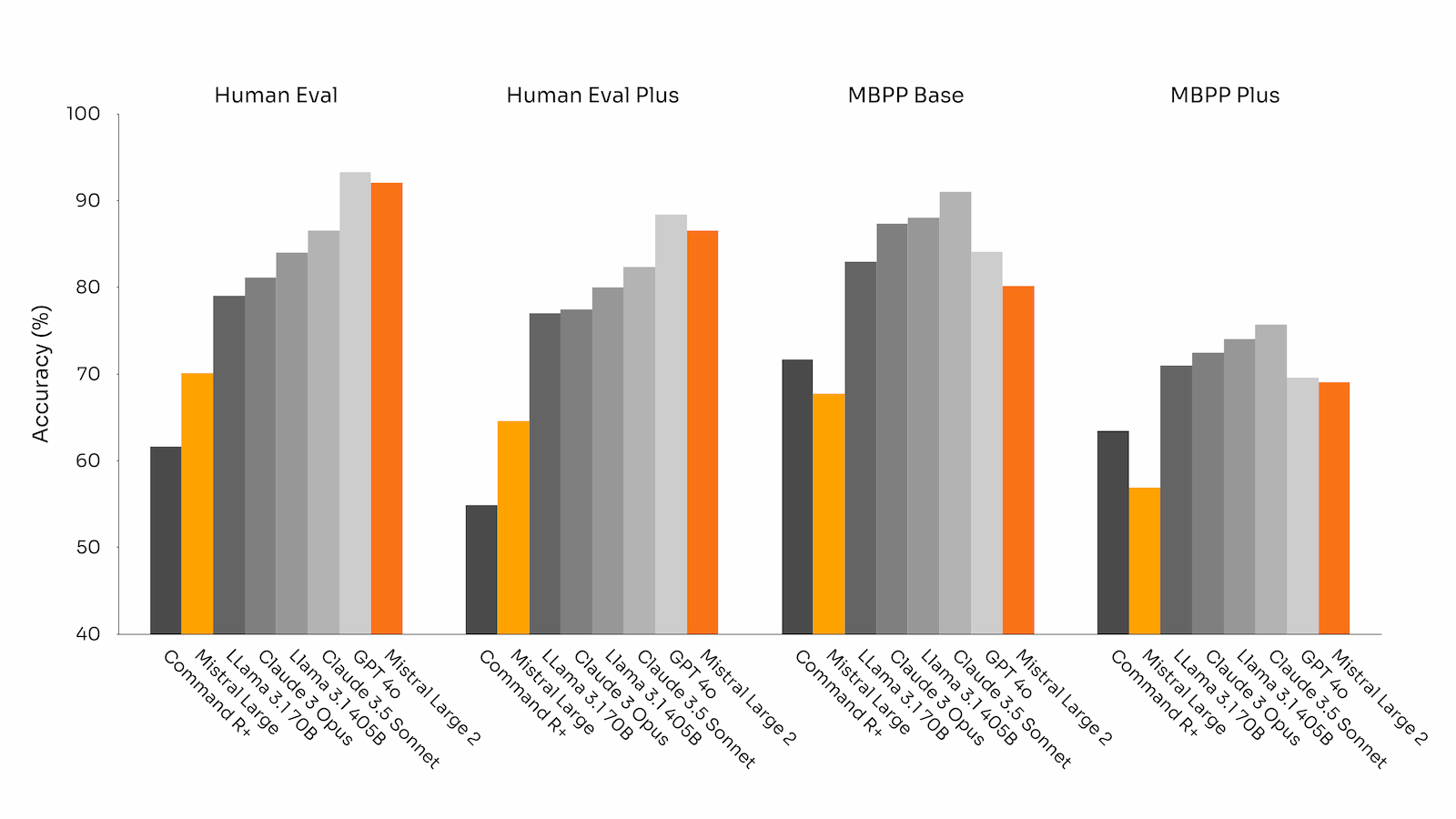

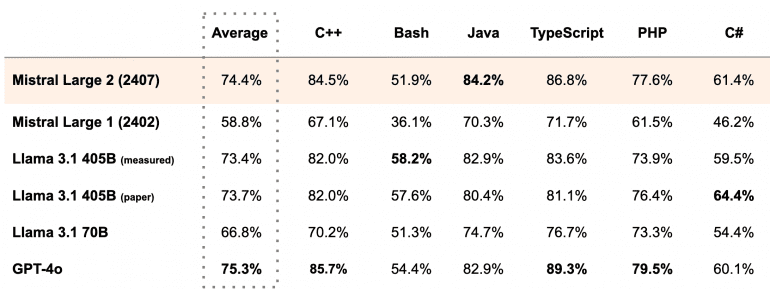

Mistral Large 2 sets a new frontier in performance and cost efficiency. The model achieves an accuracy of 84.0% on the MMLU benchmark, surpassing previous models. It also performs on par with leading models like GPT-4o, Claude 3 Opus, and Llama 3 405B in code generation benchmarks.

Enhanced Capabilities

- Reasoning and Accuracy: The model has been fine-tuned to minimize hallucinations, ensuring it provides reliable and accurate outputs. It can also recognize when it lacks sufficient information to answer confidently.

- Instruction Following and Alignment: Significant improvements in instruction-following make Mistral Large 2 highly effective at managing long, multi-turn conversations.

Implementation and Availability

Mistral Large 2 is available on la Plateforme under the name mistral-large-2407, with weights for the instruct model hosted on HuggingFace. The model is also accessible through leading cloud service providers, including Google Cloud Platform, Azure AI Studio, Amazon Bedrock, and IBM watsonx.ai.

Licensing

- Research License: Allows for usage and modification for research and non-commercial purposes.

- Commercial License: Required for self-deployment in commercial applications, available upon contacting Mistral AI.

Conclusion

Mistral Large 2 establishes a new standard in open-source code generation models, offering unparalleled capabilities in code generation, reasoning, and multilingual support. Its efficient architecture and outstanding performance make it an invaluable tool for developing innovative AI applications.