OpenAI introduced over ten products and features for developers at DevDay 2023. Here’s a rundown of the new models and API updates:

- The GPT-4 Turbo model, trained on data up to April 2023, responds to queries faster than other OpenAI models. It boasts a 128 KB context window, equivalent to 300 pages of text;

- GPT-3.5 Turbo is a smaller version of GPT-4 Turbo with a 16 KB context window;

- GPTs are customizable versions of GPT tailored for specific tasks, shareable with other users. Creating GPTs requires no coding. By the end of 2023, OpenAI will launch the GPT Store, where developers can host their models and earn commissions from their use by others;

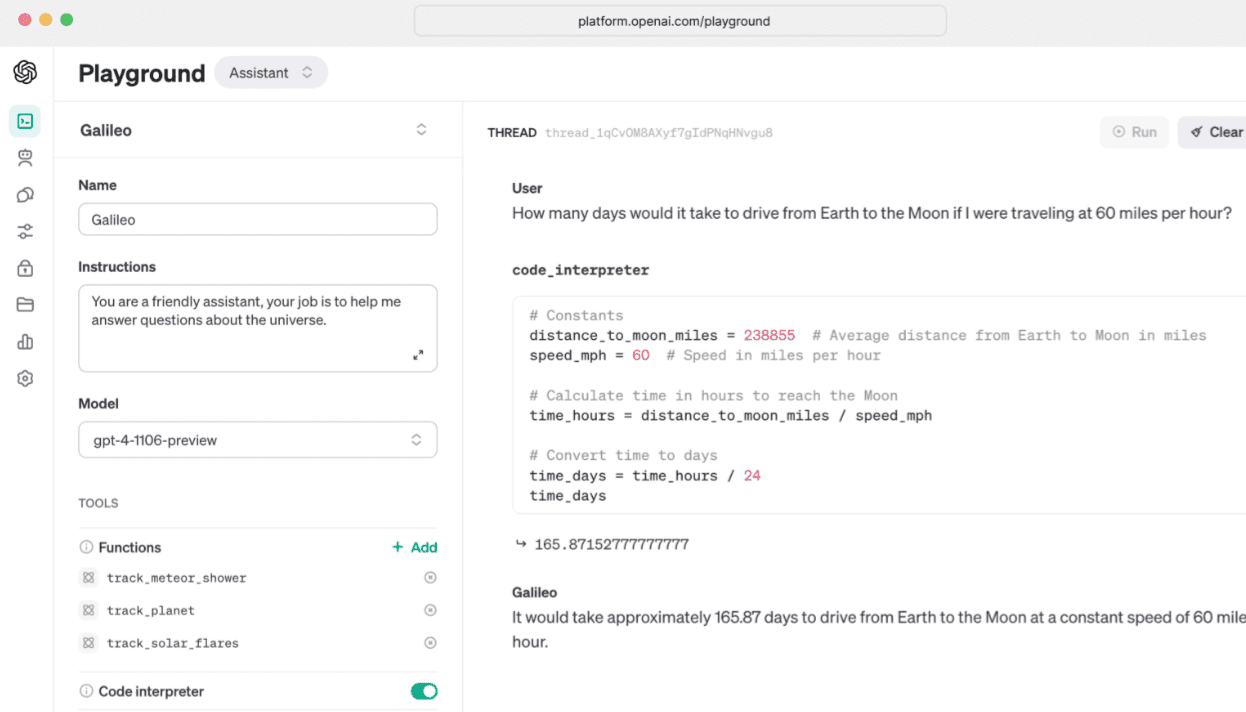

- The Function calling feature, allowing models to describe functions of applications or external APIs and receive JSON format responses, now supports multiple function calls within a single request (e.g., “open the car window and turn off the air conditioner”) and more accurately returns the correct function parameters;

- GPT-4 Turbo more precisely executes instructions, such as generating responses in a specific format (e.g., “always respond in XML”). It also supports a new mode – JSON, ensuring model responses in correct JSON format.

- The seed parameter ensures output reproducibility, crucial for debugging and writing unit tests with identical queries;

- The feature of returning logarithmic probabilities for the most likely output tokens generated by GPT-4 Turbo and GPT-3.5 Turbo enables developers to create autocomplete search functions;

- Assistants API for creating NLP agents with a Python interpreter, search through developer-provided data, and integrated Function calling;

- GPT-4 Turbo with vision can take images as input through its API for caption creation, detailed scene analysis, and reading documents with illustrations. Notably, OpenAI’s partner BeMyEyes is already using this tool to assist the blind and visually impaired with navigation;

- DALL-E 3 is now callable through the API;

- The API now includes text-to-speech transformation. The feature allows choosing between two models: one optimized for real-time applications, the other for the best speech quality. Both models support six voices.

All features and products, except for the GPT Store, are already available to users.