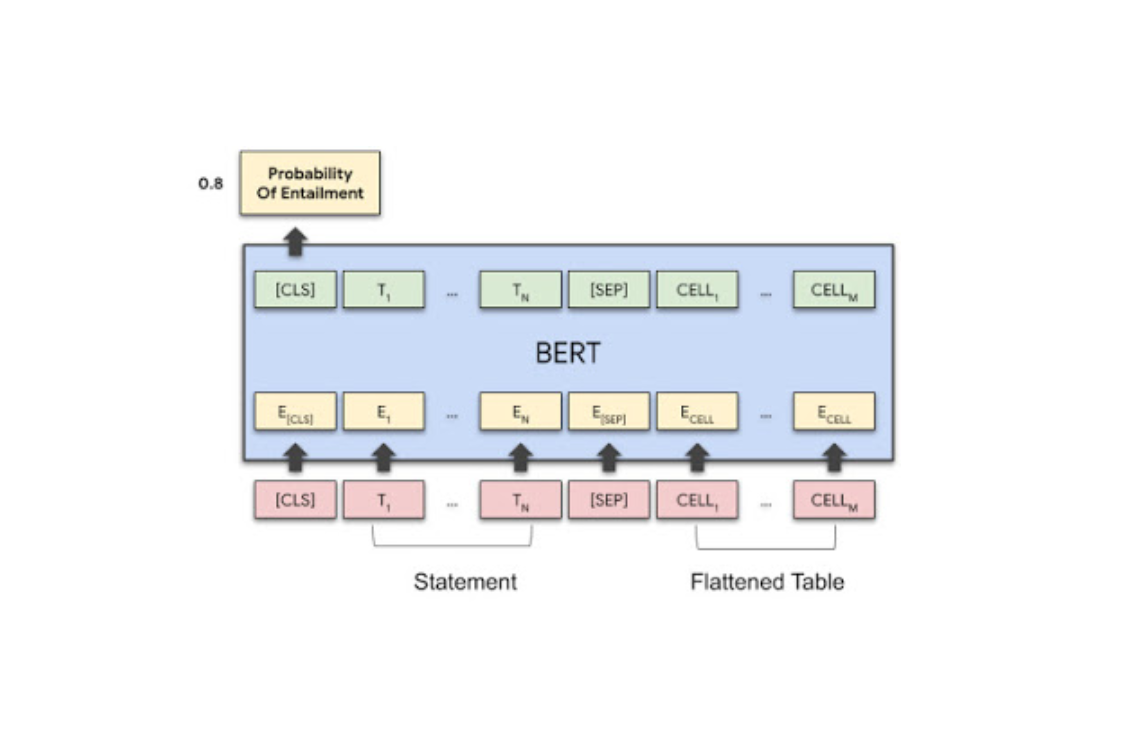

TAPAS is a neural network model for finding answers to questions in tabular data. The neural network is an extension of the BERT bi-directional Transformer model with special embeddings looking for the right answers. The model was developed in Google AI. Researchers have introduced a new target function. Experiments show that TAPAS bypasses state-of-the-art models to analyze tabular data. The researchers have published variations of the model in different sizes in a repository on GitHub.

Why is it important

The task of recognizing the relationship between parts of the text (natural language inference) is to determine whether a part of the text can support or refute another part of the text (hypothesis). While this problem is being tested for textual data, not enough attention has been paid to using structured data, like tabular, to test hypothetical statements. Such models can find application in question-answering systems and virtual assistants.

Model testing

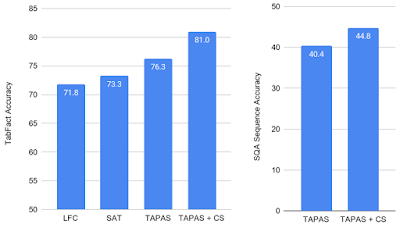

TAPAS brings the model’s response accuracy 50% closer to human response accuracy on the TabFact dataset. Also, the researchers tested computational efficiency approaches. TAPAS learned for times faster and required less memory while retaining 92% accuracy.