Google has developed a neural network that separates the object from the background in the image with high accuracy. The model is used in portrait shooting mode on Pixel 6.

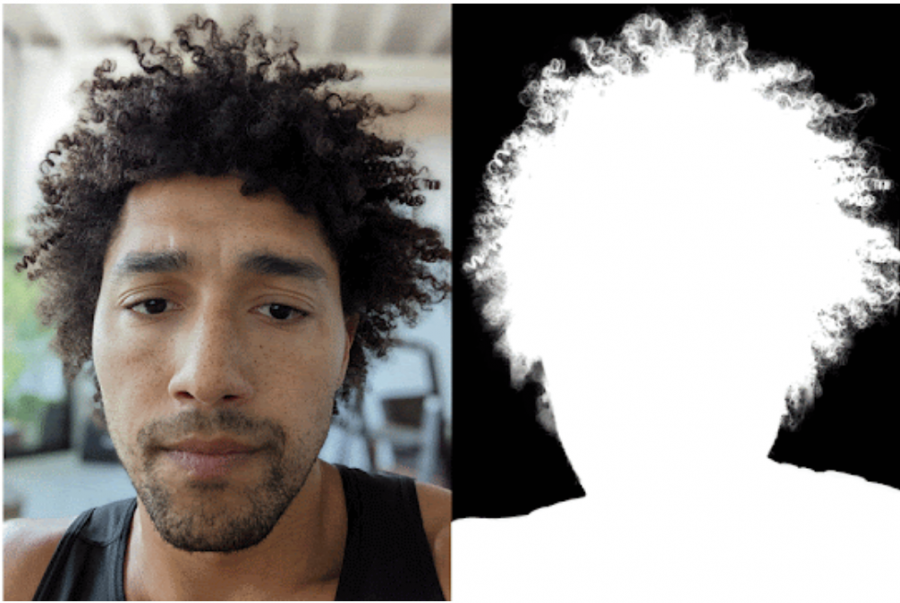

In classical image segmentation, each pixel belongs either to the foreground or to the background. This type of segmentation does not work well for small details, such as hair. In Google’s approach, the intensity of each pixel is a linear combination of foreground and background, which allows you to accurately determine the boundaries of the object and, consequently, improve the quality of selfies in portrait mode.

The developers have trained a convolutional neural network consisting of a sequence of encoder-decoder blocks. First, using MobileNetV3 and a shallow (i.e. consisting of a small number of layers) decoder, an approximate estimate of the linear combination is formed. Then a shallow encoder-decoder and a series of residual blocks refine the linear combination.

The model was trained on a Light Stage dataset consisting of high-quality pairs of background/background+person images.

The neural network works in real time on Pixel 6 using Tensorflow Lite.