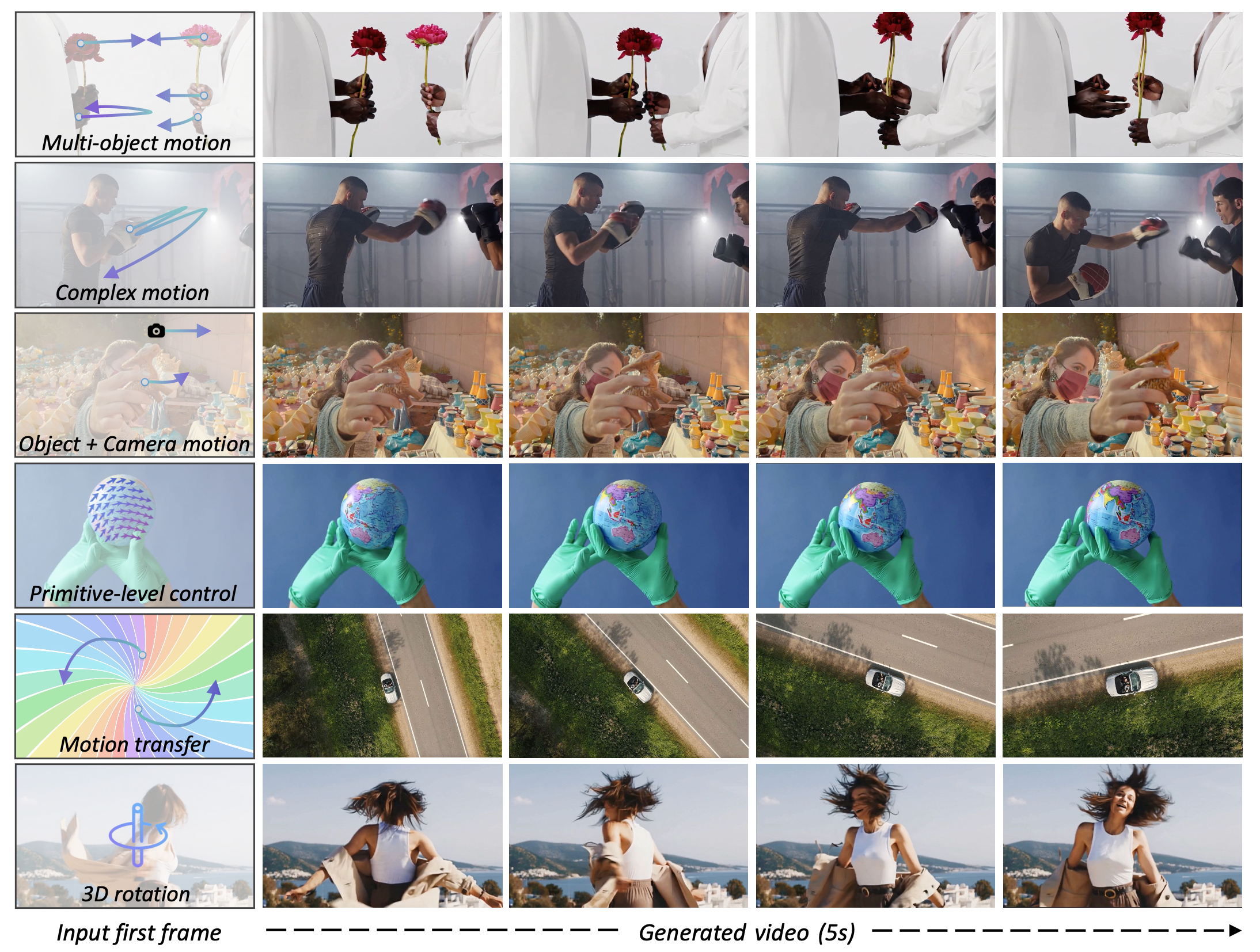

A team of researchers from Tongyi Lab (Alibaba Group), Tsinghua University, and the University of Hong Kong presented Wan-Move — a new approach to precise motion control in generative video models. Unlike existing methods that require additional motion encoders, Wan-Move directly edits condition features, embedding motion information without modifying the base model architecture. The method generates 5-second 480p videos with motion control accuracy comparable to the commercial Motion Brush from Kling 1.5 Pro. The Wan-Move-14B-480P model is available for download on Hugging Face, Github, and ModelScope under the open Apache 2.0 license.

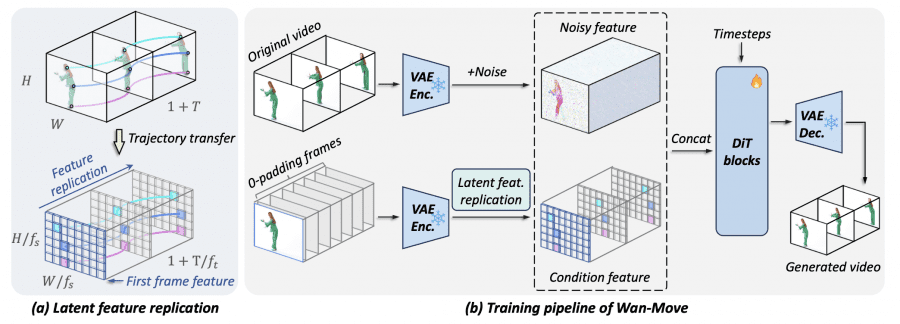

How Wan-Move Works

Wan-Move builds on top of the existing image-to-video model Wan-I2V-14B without adding auxiliary modules. The core idea is to embed motion information by directly editing the condition features of the first frame. These updated features become a latent guidance signal that contains information about both the visual content of the first frame (objects, textures, colors) and how these objects should move in subsequent frames.

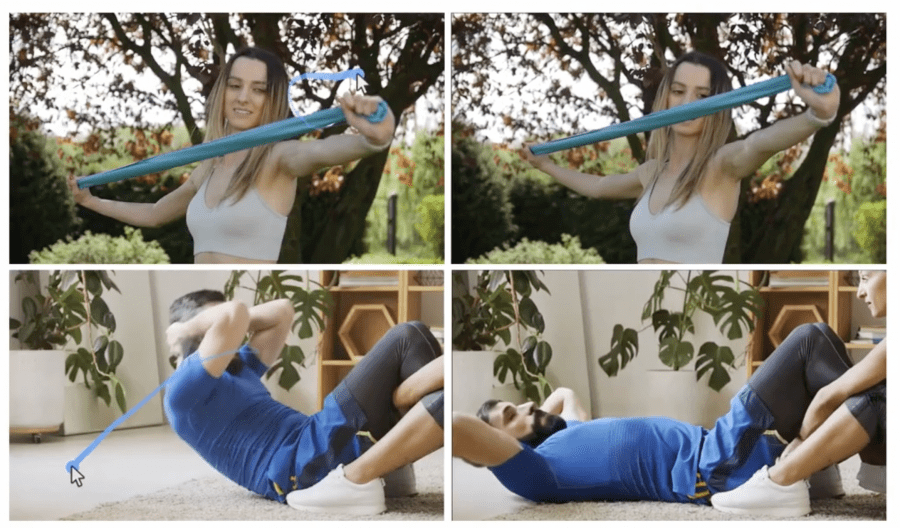

Motion is represented by point trajectories. Unlike previous work, researchers transfer each trajectory from pixel space to latent coordinates. The method works as follows: for each motion trajectory, the model takes the feature from the starting point on the first frame and copies it to all corresponding positions in subsequent frames, following that trajectory. Each copied feature preserves rich context, creating more natural local motion.

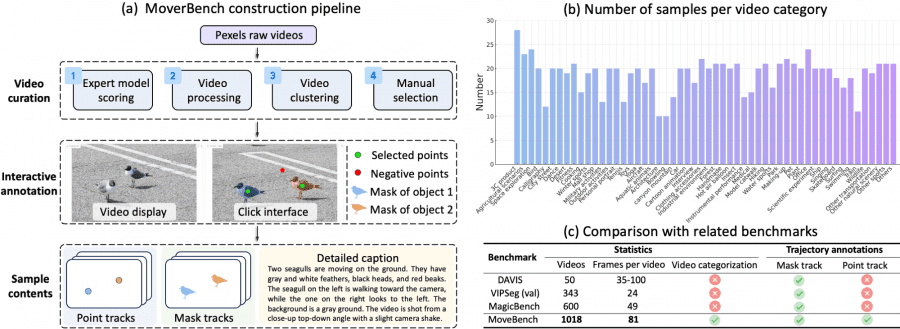

MoveBench: A New Benchmark

Researchers created MoveBench — a new benchmark of 1,018 videos (832×480 resolution, 5-second duration) with detailed motion annotations. This allows testing how accurately models control object movement over extended time intervals and in diverse scenarios.

Videos undergo a four-stage preparation pipeline: quality assessment using an expert model, processing with cropping to 480p and sampling to 81 frames, clustering into 54 content categories, and manual selection of 15-25 representative examples for each category.

Two types of annotations are provided for each video: point trajectories and segmentation masks. This allows testing methods with different control approaches. Annotation was performed using an interactive interface: annotators indicated the target area in the first frame, and SAM automatically generated a segmentation mask. As a result, each video contains at least one annotated trajectory, and 192 videos include simultaneous motion of multiple objects.

Experimental Results

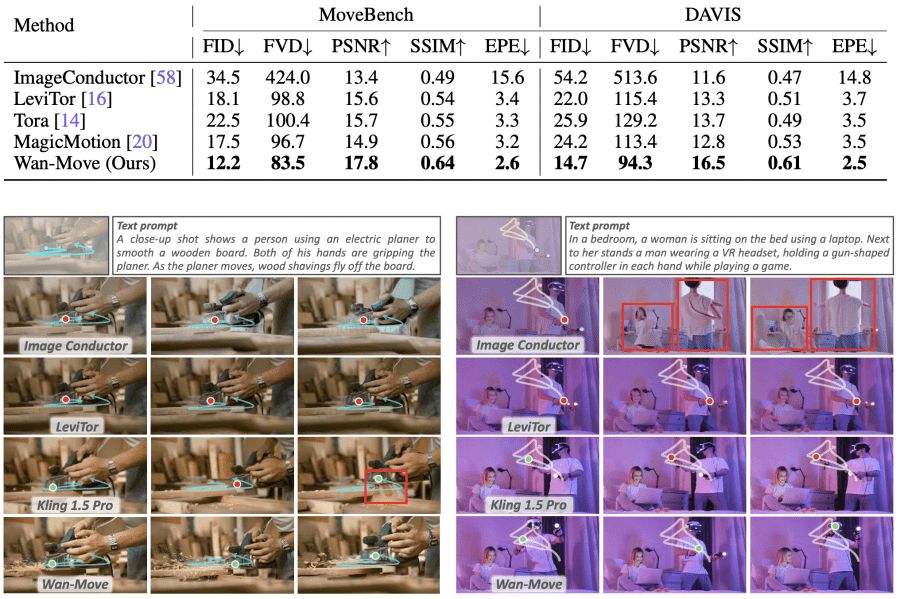

Wan-Move demonstrated the best results among all academic methods on the MoveBench and DAVIS benchmarks, significantly outperforming competitors in multi-object scenarios.

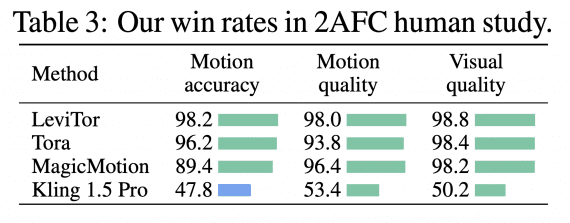

To evaluate against professional-level performance, a 2AFC (two-alternative forced-choice) user study was conducted comparing Wan-Move with commercial Kling 1.5 Pro. Participants evaluated motion accuracy, motion quality, and visual quality. Wan-Move showed competitive results: winning in motion accuracy (52.2%) and motion quality (53.4%), with comparable visual quality (50.2%). Compared to academic methods, Wan-Move achieved a win rate above 96% across all categories.

Ablation Studies

Comparing ControlNet with direct concatenation showed comparable performance (FID 12.2 vs 12.4), but ControlNet increases inference time by 225 seconds, while Wan-Move adds only 3 seconds.

Optimal performance is achieved with 200 trajectories during training. At inference, the model demonstrates strong generalization ability, achieving a minimum EPE of 1.1 with 1,024 trajectories, despite being trained on a maximum of 200.

Conclusions

Wan-Move represents a simple and scalable framework for precise motion control in video generation without architectural changes to the base model. Extensive experiments show that the method generates high-quality videos with motion controllability comparable to commercial tools. The project is distributed under the Apache 2.0 license, allowing free use of the model for both commercial and non-commercial purposes.