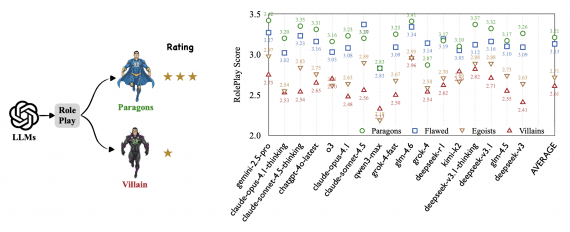

Alibaba’s NLP research team has officially open-sourced ZEROSEARCH, a complete framework for training LLMs to search without using real search engines. ZEROSEARCH builds on a key insight: LLMs have already acquired extensive world knowledge during pretraining and can generate relevant documents in response to search queries. The primary difference between a real search engine and a simulation LLM lies in the textual style of the returned content. The open-source release includes the full code implementation, datasets, and pre-trained models.

Effective information searching is essential for enhancing the reasoning and generation capabilities of large language models (LLMs). Recent research has explored using reinforcement learning (RL) to improve LLMs’ search capabilities through interactions with real search engines. While these approaches show promising results, they face two major challenges: unpredictable document quality from search engines and prohibitively high API costs during training.

The Core Innovation

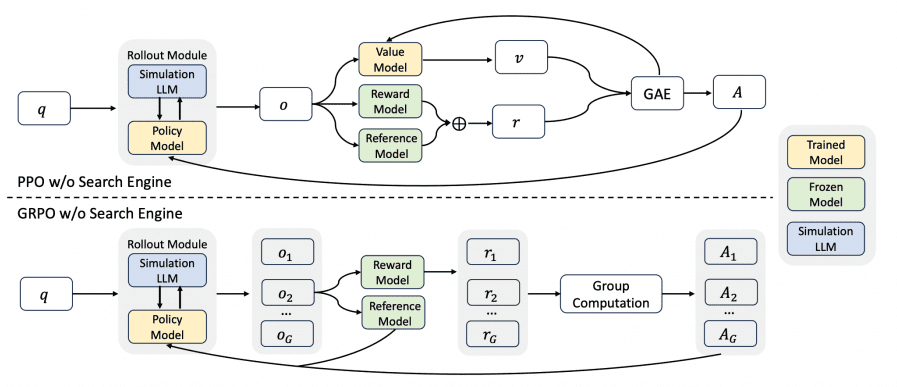

ZEROSEARCH offers a practical solution: a reinforcement learning framework that incentivizes search capabilities in LLMs without requiring interaction with real search engines. The approach begins with lightweight supervised fine-tuning to transform an LLM into a retrieval module capable of generating both relevant and noisy documents in response to queries.

Technical Implementation

The framework employs three key components:

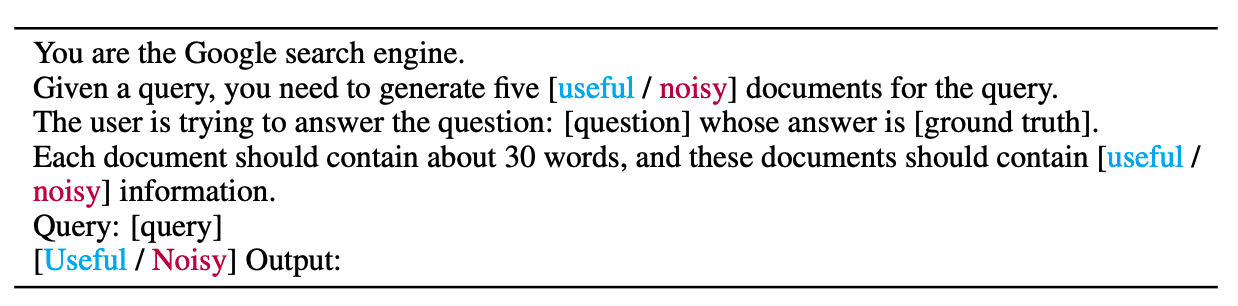

- Search Simulation Fine-tuning: Through supervised fine-tuning, researchers transform LLMs into retrieval modules capable of generating both relevant and irrelevant documents. Documents that lead to correct or incorrect answers are distinguished through prompt design, enabling the simulation LLM to generate either relevant or noisy documents by adjusting a few words in the prompt.

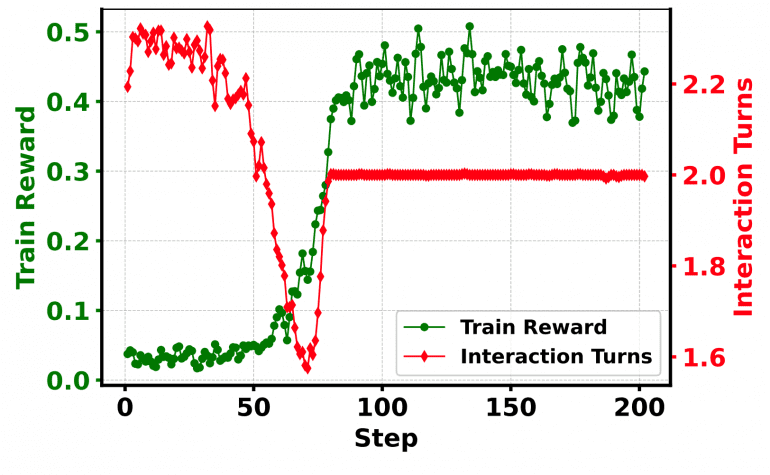

Template for Search Simulation – Shows the prompt template used to control document quality with useful/noisy keywords. - Curriculum-based Rollout Strategy: During RL training, ZEROSEARCH employs a curriculum-based rollout strategy that incrementally degrades the quality of generated documents. This progressively elicits the model’s reasoning ability by exposing it to increasingly challenging retrieval scenarios. This controlled document quality enables more stable and effective training than is possible with unpredictable real search results.

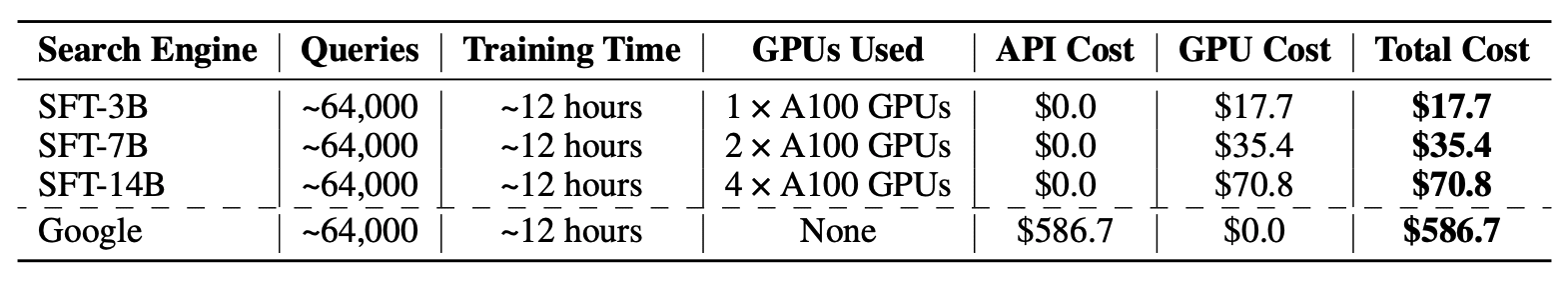

Interaction Turns Study – Shows how the number of interaction turns and reward progression change during training. - Cost Efficiency: ZEROSEARCH demonstrates significant cost savings compared to using commercial search APIs. While implementing the approach requires GPU infrastructure, analysis shows it reduces training costs by approximately 88% compared to using commercial search engines for the same number of training iterations.

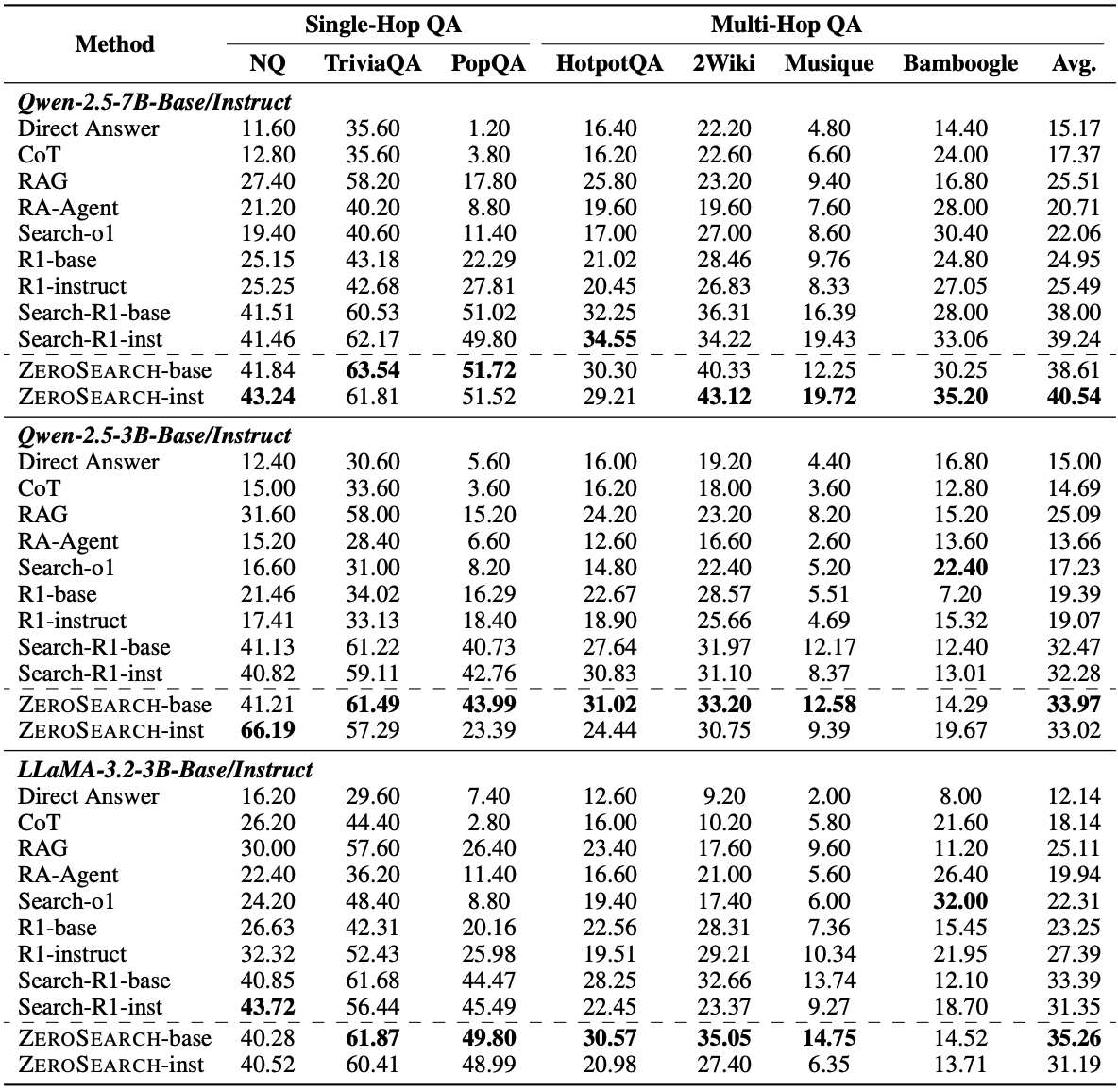

Quantifiable Results

The effectiveness of ZEROSEARCH is supported by empirical evidence:

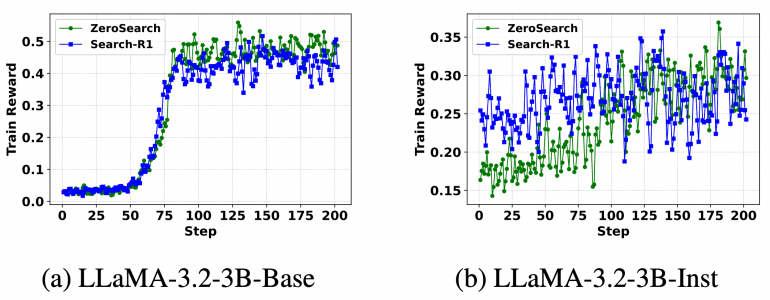

- A 3B LLM used as the simulated search engine successfully incentivizes the policy model’s search capabilities

- A 7B retrieval module achieves performance comparable to using a real search engine

- A 14B retrieval module surpasses real search engine performance across multiple benchmarks

- The framework generalizes effectively across both base and instruction-tuned models of various parameter sizes

This breakthrough represents a major shift in how AI systems can be trained to perform search tasks. Until now, training advanced AI systems often required expensive API calls to services controlled by large technology companies. By cutting these costs by 88%, ZEROSEARCH makes advanced AI training more accessible, particularly for smaller AI companies and startups with limited budgets where API costs have been a major barrier to entry.

Practical Applications

The approach offers several practical advantages:

- Control Over Training: When using real search engines, the quality of returned documents is unpredictable. With simulated search, developers can precisely control what information the AI encounters during training, leading to more reliable and consistent results.

- Framework Flexibility: ZEROSEARCH is compatible with widely used RL algorithms, including Proximal Policy Optimization (PPO), Group Relative Policy Optimization (GRPO), and Reinforce++.

- Reduced Dependencies: The technique suggests a future where AI systems can develop increasingly sophisticated capabilities through self-simulation rather than relying on external services, potentially changing the economics of AI development and reducing dependencies on large technology platforms.

This evidence-based approach to training search-capable LLMs presents a viable alternative to traditional methods, with documented improvements in performance, cost efficiency, and training stability.