Baichuan-M3: An Open Medical Model That Conducts Consultations Like a Real Doctor and Outperforms GPT-5.2 on Benchmarks

10 February 2026

Baichuan-M3: An Open Medical Model That Conducts Consultations Like a Real Doctor and Outperforms GPT-5.2 on Benchmarks

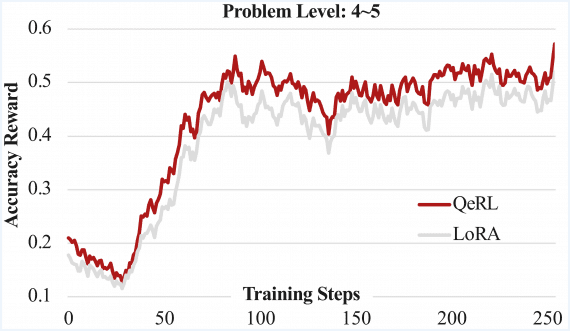

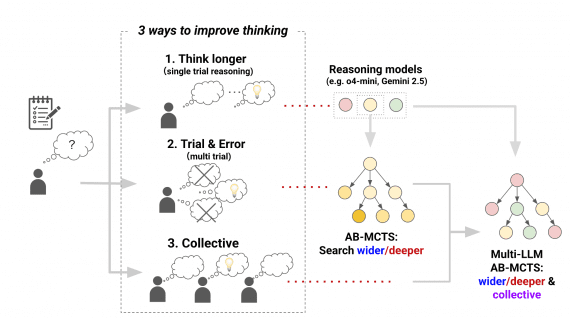

A research team from the Chinese company Baichuan has introduced Baichuan-M3 — an open medical language model that, instead of the traditional question-and-answer mode, conducts a full clinical dialogue, actively…