The Deep learning prerequisites: Logistic Regression in Python from The Lazy Programmer is a course offered on Udemy. It’s one of the first courses in a long line of courses focussed on teaching Deep Learning using python. This course is a very natural progression to the Linear Regression course which is offered in the same series of Deep Learning prerequisites.

Skills you’ll develop

This course will teach you how to tackle classification problems. With linear regression, you were only able to predict continuous values, which leaves out at least half of the problems that will be tackling in real life. Classification problems can be binary, multiclass or ordinal (not shown in this course). You will learn how to train, evaluate and predict for classification problems using logistic regression.

Requirements

As for the requirements for this course are similar to those of the Linear Regression course, however, the cost function and resulting equation of the logistic regression requires a little more mathematical knowledge. You need to understand basic linear algebra, as well as basic calculus. Basic knowledge of Python and NumPy is also recommended.

It is also recommended to have either good knowledge of linear regression or to have completed the Linear Regression course in the Deep Learning prerequisites series. This course, as well as the Linear Regression course, are very important foundations for learning Neural Networks and so it is important to have the prerequisites in order to get the most of those courses.

Contents of the course

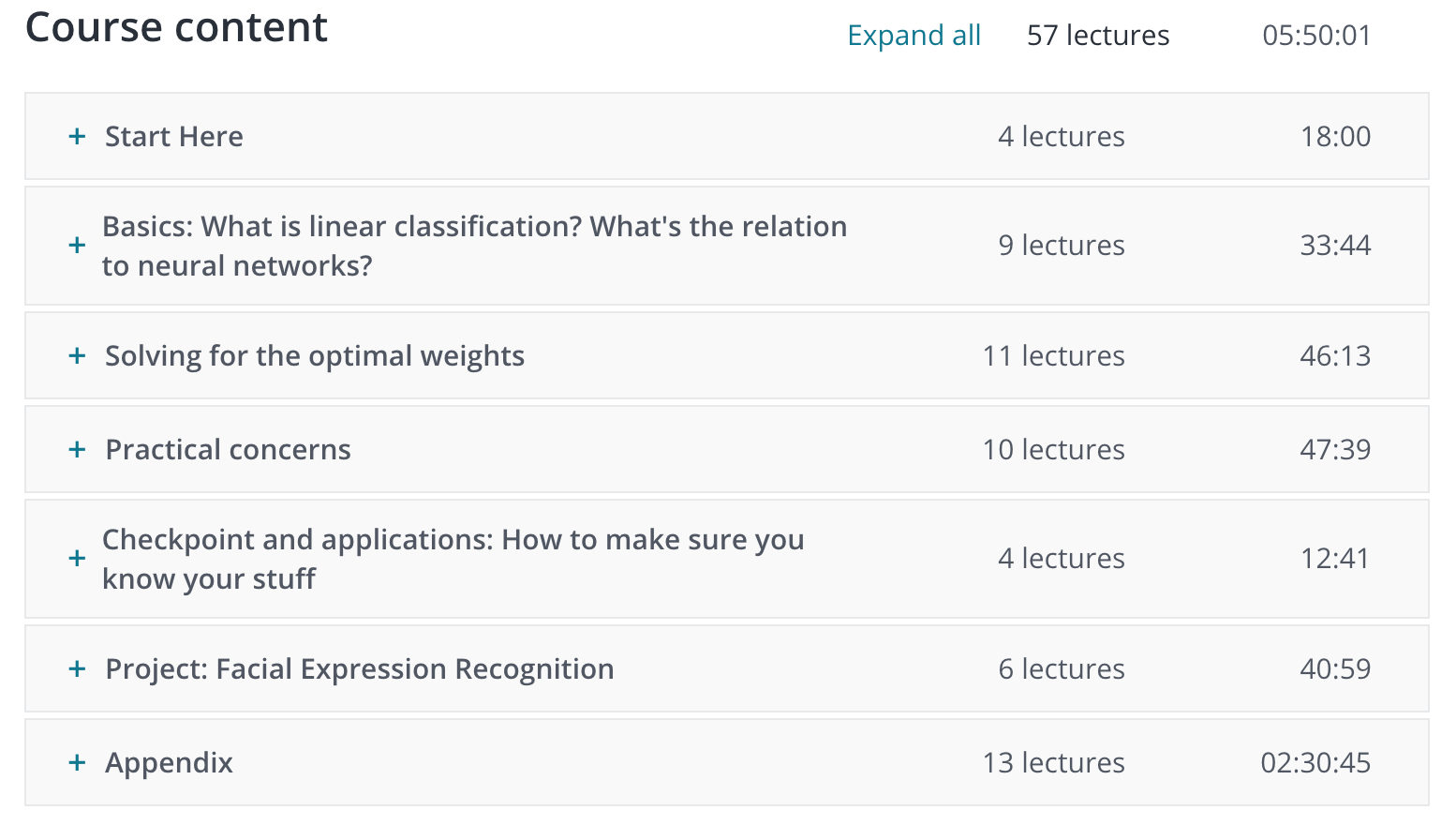

Deep Learning Prerequisites: Logistic Regression in Python course starts off by showing how to solve classification problems using linear regressions and why we need to develop new methods to solve such problems. You will learn how to minimize the cross-entropy error function, as well as how to maximize the maximum-likelihood function to find the optimal weights. Since there are no closed form solutions to find the optimal weights, as is the case with the linear regression, there are multiple equivalent ways to find the define the cost function.

Regularization

After talking about gradient descent, an algorithm to find the optimal weights, you will learn some vital practical concepts needed to apply logistic regression on real-life problems. It first talks about L1 and L2 regularization, topics which are similar to the regularization for linear regression but applied to a different cost function. Adding a regularization aspect to functions is very important when working with high dimensional data and/or messy data in order to avoid overfitting.

Generalization

After working through a few quick examples, you will be able to work by yourself on a facial expression recognition problem. This project is one of the most valuable learning experiences in this course. Other than working with sklearn for the first time in the series, you will be learning how to generalize the binary classification model to a multi-class model. Other than that, you will learn how to work through problems with unbalanced classes. This is useful since many binary classification problems have this characteristic, like rare disease prediction, fraud detection, etc.

Price and certificate

This course does not offer any certificate and the advertised price is around 200$, however, there is always a discount going on and you can expect to pay around 10-20$.

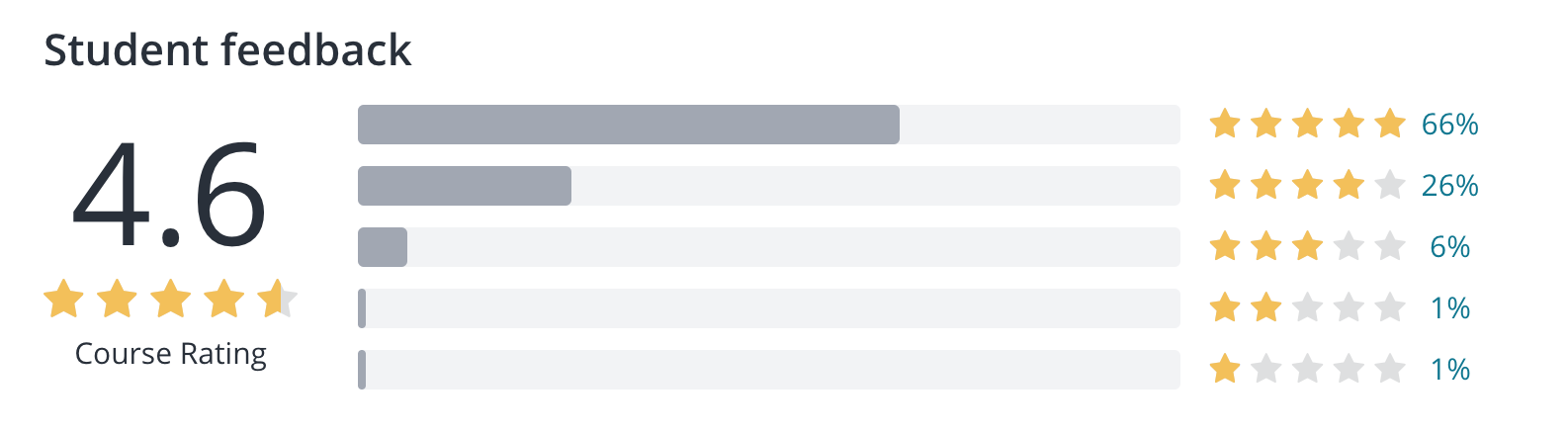

Overall, this is a good course for anyone serious about starting a career in data science or machine learning. It is a good introduction to the matter of logistic regression, especially when talking about the theory necessary for Neural Networks. There is also a good practical example, which wasn’t the case with previous courses in the series.