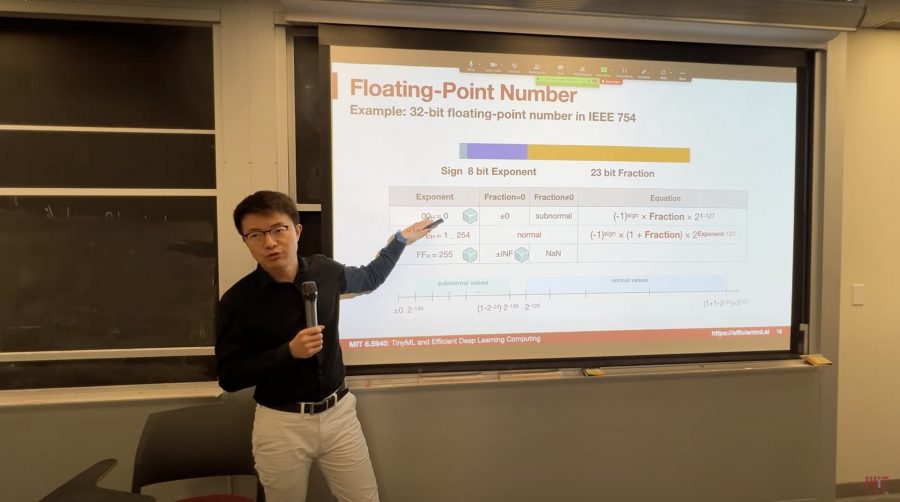

In recent years, large language and diffusion models have showcased impressive results. However, their demands on computational resources and memory consumption pose significant challenges for researchers and developers. The TinyML & Efficient DL Computing course, now available on Youtube, serves as a valuable guide for professionals and students aiming to optimize resource utilization in deep learning.

This course, taught by MIT professors Song Han, Han Kai, and Ji Lin, places a strong emphasis on developing methods and techniques that enable the deployment of deep neural networks on resource-constrained devices. You can choose between recorded lectures from in-person classes or Zoom videos. Lectures are released twice a week, on Tuesdays and Thursdays.

Currently, there are 7 lectures available, covering the following topics:

- Introduction – Tasks addressed by deep learning;

- Fundamentals of neural networks;

- Pruning;

- Sparsity;

- Quantization (Part 1);

- Quantization (Part 2);

- Neural Architecture Search.

The core subjects explored in this course include:

- Model Compression: A method to significantly reduce the size of deep models while preserving their performance, crucial for mobile device usage.

- Pruning: The process of eliminating redundant network parameters, reducing memory requirements, and improving training speed.

- Quantization: Decreasing the precision of weight coefficients in models, achieving minimal model accuracy loss, and saving memory.

- Neural Architecture Search: Automated search for optimal network architectures tailored to specific tasks.

- Distributed Training: Efficient utilization of computing clusters to expedite the training process.

- Data/Model Parallelism: Distributing data and models across multiple devices to enhance performance.

- Gradient Compression: Methods for compressing gradient information to reduce data transmission volume.

- On-Device Optimization: Adapting models to specific devices for more efficient operation.

- The course also covers the applications of these methods in areas such as natural language processing, video recognition, and point cloud processing, making it essential for professionals in various domains.

Students in this course have the opportunity to gain practical experience in deploying and utilizing large generative models on resource-constrained devices. This is particularly relevant today, as efficient resource utilization becomes increasingly critical in the field of deep learning.