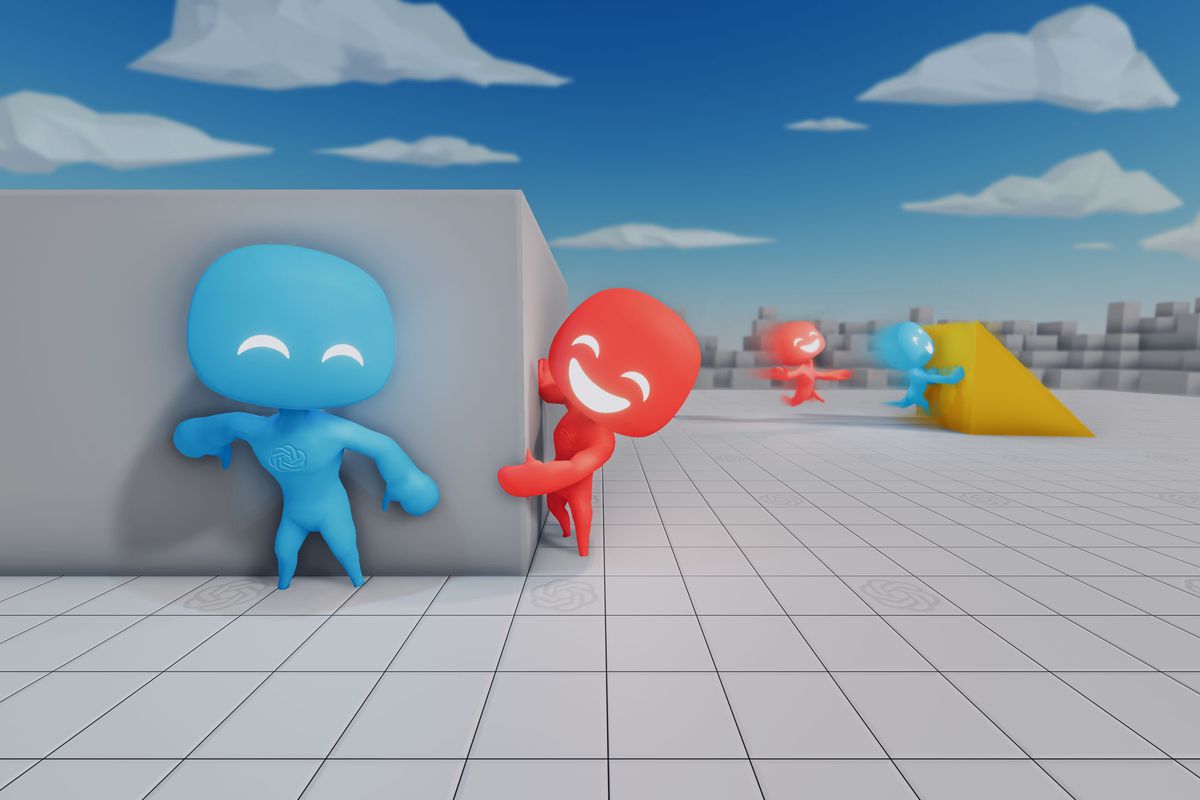

The latest research from OpenAI researchers shows that AI agents are able to use clever tricks and manipulate the environment to reach their predefined goal. In the novel paper “Emergent tool use from multi-agent autocurricula”, researchers present their work on multi-agent interaction during gameplay of a simple game of hide-and-seek.

They describe how the AI agents learned increasingly sophisticated ways to hide from and seek each other, after playing a large number of games, or more precisely 500 million games. According to them, as the training progressed and the number of games increased, the agents were getting better and better in exploiting the environment and finding smart ways to use the available tools. In 25 million games agents learned how to use boxes to block exits and form rooms. After 75 million games they learned how to work with each other, pass boxes and use ramps to get over obstacles.

For the purpose of training hide-and-seek agents, OpenAI researchers had to build yet another simulation, after a large number of RL simulations developed in the past few years. The new hide-and-seek simulator was built using high-fidelity physics engine, to allow real object manipulation.

The agents were trained using self-play and Proximal Policy Optimization. During optimization, agents were allowed to use privileged information about obscured objects and other agents in their value function.

More about the method and the hide-and-seek simulation environment can be read in the official blog post, where researchers argue the potential and significance of this research work.