Researchers from Google have proposed a new type of deep neural networks – Weight-agnostic Neural Networks, which are able to perform various tasks even when they use random weights.

In their novel paper, Adam Gaier and David Ha explore the possibility of designing a neural network architecture such that it can encode solutions without learning any weight parameters. They introduce an architecture search method that can find minimal neural network architectures able to perform a number of reinforcement learning tasks.

Inspired from biological neural networks, researchers tried to answer the question if neural networks can actually encode solutions for a set of (most probably similar) problems. In order to do so, they evaluate architectures based on their performance when weights are drawn from a random probability distribution rather than on their performance with optimal weights.

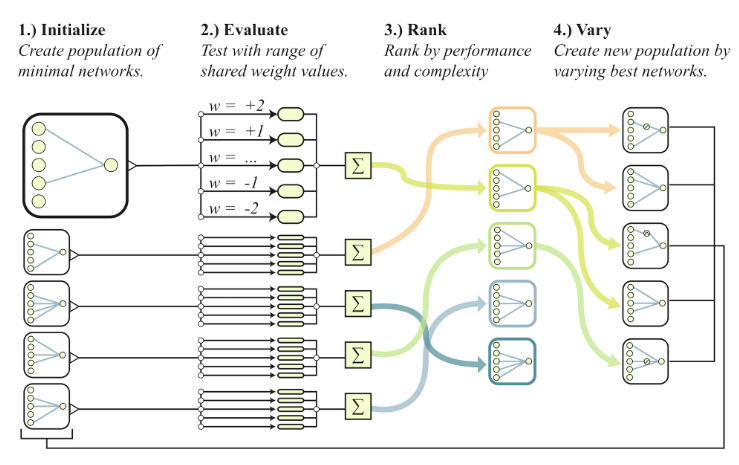

The method for neural architecture search in the context of weight-agnostic networks uses a population of networks which are sharing weights. This population consists of a large number of minimal networks. Each of the networks is then evaluated while using the same range of shared weight values. The evaluation is done by averaging the cumulative reward over all rollouts using the different weight values. Finally, the networks are ranked by performance and complexity (to avoid super-complex architectures) and the new population is generated by modifying the best networks.

Researchers evaluated the proposed method on several reinforcement learning tasks as well as supervised learning tasks. They were able to show that a weigh-agnostic network can perform well on several tasks without weight training. Also, the method was able to find network architectures that achieve higher than change accuracy on MNIST, again, without training.