NVIDIA has introduced DatasetGAN, a synthetic image generator with annotations. The system requires up to 40 manually annotated images as input and is superior to existing state-of-the-art models.

The use of synthetic data for training neural networks is becoming increasingly popular, because in this case, the labor costs associated with creating large datasets are reduced. Although generative-adversarial neural networks can produce an infinite number of unique high-quality images, computer vision training algorithms require datasets with a large number of annotations. The system works like this: first, the images are manually annotated, and then the interpreter is trained on this data to create object annotations based on the space of hidden variables. To create realistic images, DatasetGAN uses NVIDIA StyleGAN technology. DatasetGAN can be trained on a minimum of 16 manually annotated images and has an efficiency comparable to fully managed systems that require 100 times more annotated images.

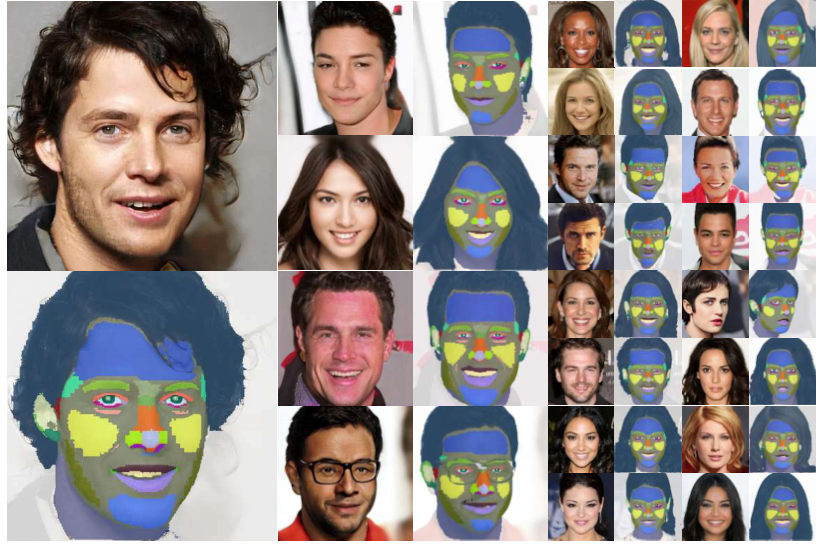

DatasetGAN is a generative-adversarial neural network consisting of a generator that learns to create realistic images, and a discriminator that learns to distinguish them from real images. After training, only the generator is used to create new images. The NVIDIA approach is that the hidden variable space used as input to the generator contains semantic information about the generated image and therefore allows for the creation of annotations. NVIDIA created a training dataset for its system by generating multiple images and storing the associated hidden variables. The synthetic images were manually annotated, and then the hidden variables were paired with the annotations for training. After that, the dataset was used to train an ensemble of classifiers based on multi-layer perceptrons used as a style interpreter. The input data of the classifier consists of feature vectors created by the neural network to generate each pixel, and the output data is a label for each pixel. For example, when a neural network generates an image of a human face, the interpreter generates annotations that point to a part of the face, such as” nose “or”ear”.

To determine the capabilities of DatasetGAN, the researchers trained the interpreter on synthetic, manually annotated images of rooms, cars, people’s faces, birds, and cats. In each case, 16 to 40 sample images were used. The neural network performance evaluation performed using the Celeb-A and Stanford Cars benchmarks showed that DatasetGAN is superior to state-of-the-art models.