Researchers from Facebook AI Research (FAIR) have performed an interesting experiment analyzing how object recognition systems perform for people coming from different countries and with different income levels.

To be able to perform such study researchers used the Dollar Street image dataset which contains images of common household items all over the world. This dataset was collected by photographers to point out and emphasize the difference between ‘everyday life’ among people with different income levels.

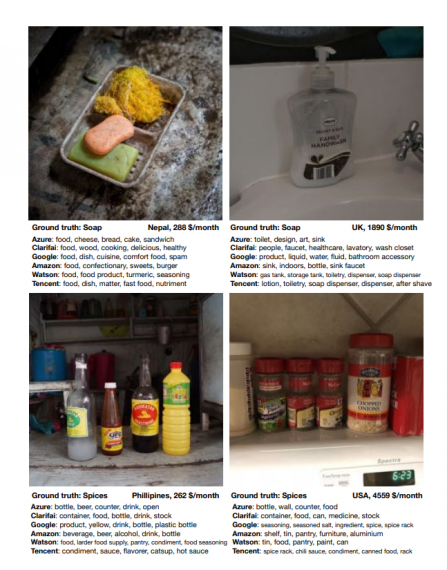

Five publicly available and powerful object recognition systems were included in this study: Microsoft Azure, Clarifai, Google Cloud Vision, Amazon Rekognition and IBM Watson.

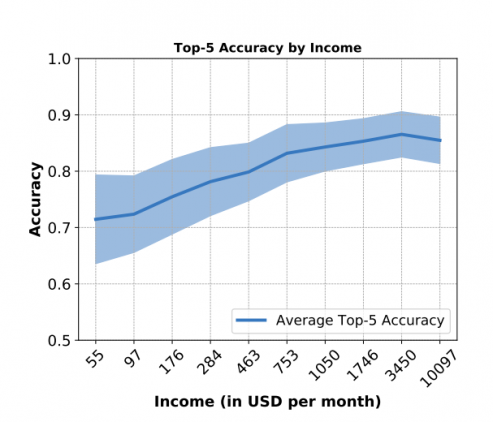

Within the study, researchers tried to find a correlation between the accuracy of object recognition and the income levels and they tried to compare the accuracy across different regions of the world.

They found that all the systems perform poorly on household items in countries with low household income. In fact, for all systems, they found that the accuracy increases as the monthly consumption income increases.

To explain this, researchers analyzed the results of all models and concluded that object recognition systems fail due to two main things: difference in object appearance and context. This means systems fail because common objects look different and the context in which they appear is also different.

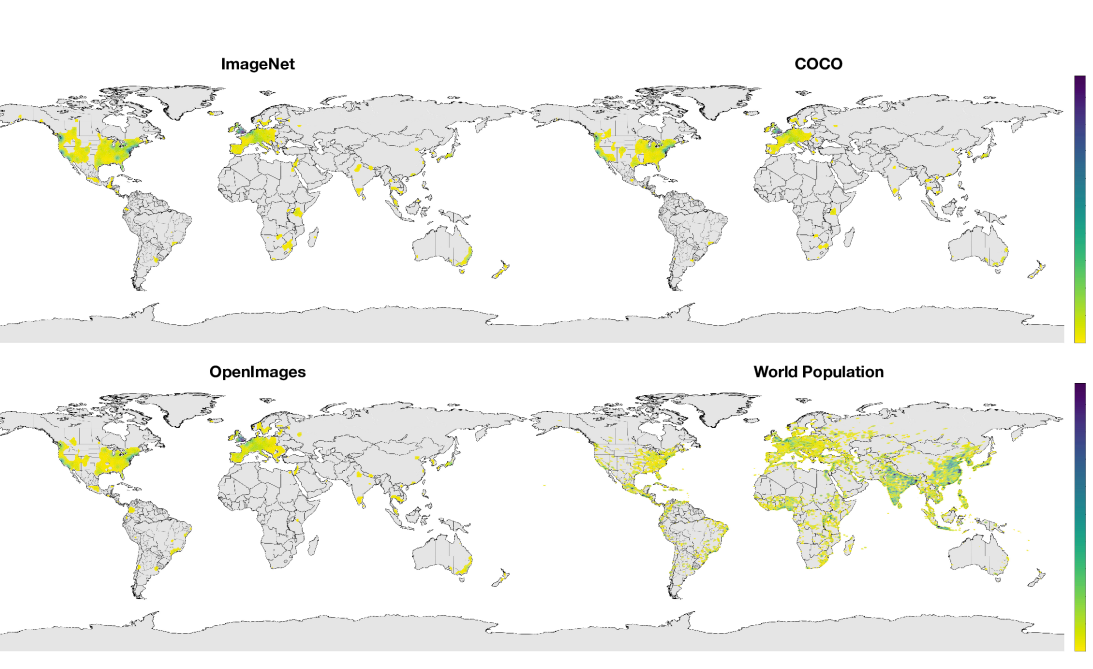

However, object recognition systems fail because the datasets that they were trained on are biased and contain images mostly from Western countries. Researchers plot the geo-distribution of popular datasets for object recognition to demonstrate this.

This study is part of a bigger project on fairness in machine learning and it suggests that still further work is needed to make object recognition work for everyone.